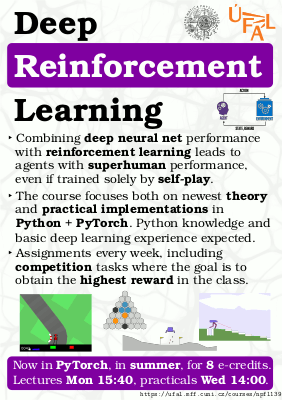

Deep Reinforcement Learning – Summer 2023/24

In recent years, reinforcement learning has been combined with deep neural networks, giving rise to game agents with super-human performance (for example for Go, chess, StarCraft II, capable of being trained solely by self-play), datacenter cooling algorithms being 50% more efficient than trained human operators, or faster code for sorting or matrix multiplication. The goal of the course is to introduce reinforcement learning employing deep neural networks, focusing both on the theory and on practical implementations.

Python programming skills and basic PyTorch/TensorFlow skills are required (the latter can be obtained on the Deep Learning course). No previous knowledge of reinforcement learning is necessary.

About

SIS code: NPFL139

Semester: summer

E-credits: 8

Examination: 3/4 C+Ex

Guarantor: Milan Straka

Timespace Coordinates

- lecture: the lecture is held on Monday 15:40 in S5; first lecture is on Feb 19

- practicals: the practicals take place on Wednesday 14:00 in S5; first practicals are on Feb 21

- consultations: entirely optional consultations take place on Tuesday 15:40 in S4; first consultations are on Feb 27

All lectures and practicals will be recorded and available on this website.

Lectures

1. Introduction to Reinforcement Learning Slides PDF Slides Lecture MonteCarlo Questions bandits monte_carlo

2. Value and Policy Iteration, Monte Carlo, Temporal Difference Slides PDF Slides Lecture Q-learning Questions policy_iteration policy_iteration_exact policy_iteration_mc_estarts policy_iteration_mc_egreedy q_learning

3. Off-Policy Methods, N-step, Function Approximation Slides PDF Slides Lecture Questions importance_sampling td_algorithms q_learning_tiles lunar_lander

4. Function Approximation, Deep Q Network, Rainbow Slides PDF Slides Lecture Questions q_network car_racing

5. Rainbow II, Distributional RL Slides PDF Slides Lecture Questions

6. Policy Gradient Methods Slides PDF Slides Lecture Questions reinforce reinforce_baseline cart_pole_pixels

8. PAAC, DDPG, TD3, SAC Slides PDF Slides Lecture Questions paac paac_continuous ddpg

9. Eligibility traces, Impala Slides PDF Slides Lecture Questions walker walker_hardcore cheetah humanoid humanoid_standup

10. PPO, R2D2, Agent57 Slides PDF Slides Lecture trace_algorithms ppo

11. UCB, Monte Carlo Tree Search, AlphaZero Slides PDF Slides Lecture Questions az_quiz

12. MuZero, AlphaZero Policy Target, Gumbel-Max, GumbelZero Slides PDF Slides Lecture GumbelZero Questions az_quiz_cpp az_quiz_randomized pisqorky

13. PlaNet, ST and Gumbel-softmax, DreamerV2, DreamerV3 Slides PDF Slides Lecture Questions

14. MARL, External Memory Slides PDF Slides Lecture mappo memory_game memory_game_rl

License

Unless otherwise stated, teaching materials for this course are available under CC BY-SA 4.0.

The lecture content, including references to study materials.

The main study material is the Reinforcement Learning: An Introduction; second edition by Richard S. Sutton and Andrew G. Barto (reffered to as RLB). It is available online and also as a hardcopy.

References to study materials cover all theory required at the exam, and sometimes even more – the references in italics cover topics not required for the exam.

1. Introduction to Reinforcement Learning

Feb 19 Slides PDF Slides Lecture MonteCarlo Questions bandits monte_carlo

- History of RL [Chapter 1 of RLB]

- Multi-armed bandits [Sections 2-2.6 of RLB]

- Markov Decision Process [Sections 3-3.3 of RLB]

- Policies and Value Functions [Sections 3.5-3.6 of RLB]

2. Value and Policy Iteration, Monte Carlo, Temporal Difference

Feb 26 Slides PDF Slides Lecture Q-learning Questions policy_iteration policy_iteration_exact policy_iteration_mc_estarts policy_iteration_mc_egreedy q_learning

- Value Iteration [Sections 4 and 4.4 of RLB]

- Proof of convergence only in slides

- Policy Iteration [Sections 4.1-4.3 of RLB]

- Generalized Policy Iteration [Section 4.6 or RLB]

- Monte Carlo Methods [Sections 5-5.4 of RLB]

- Model-free and model-based methods, using state-value or action-value functions [Chapter 8 before Section 8.1, and Section 6.8 of RLB]

- Temporal-difference methods [Sections 6-6.3 of RLB]

- Sarsa [Section 6.4 of RLB]

- Q-learning [Section 6.5 of RLB]

3. Off-Policy Methods, N-step, Function Approximation

Mar 4 Slides PDF Slides Lecture Questions importance_sampling td_algorithms q_learning_tiles lunar_lander

- Off-policy Monte Carlo Methods [Sections 5.5-5.7 of RLB]

- Expected Sarsa [Section 6.6 of RLB]

- N-step TD policy evaluation [Section 7.1 of RLB]

- N-step Sarsa [Section 7.2 of RLB]

- Off-policy n-step Sarsa [Section 7.3 of RLB]

- Tree backup algorithm [Section 7.5 of RLB]

- Function approximation [Sections 9-9.3 of RLB]

- Tile coding [Section 9.5.4 of RLB]

- Linear function approximation [Section 9.4 of RLB, without the Proof of Convergence of Linear TD(0)]

4. Function Approximation, Deep Q Network, Rainbow

Mar 11 Slides PDF Slides Lecture Questions q_network car_racing

- Semi-Gradient TD methods [Sections 9.3, 10-10.2 of RLB]

- Off-policy function approximation TD divergence [Sections 11.2-11.3 of RLB]

- Deep Q Network [Volodymyr Mnih et al.: Human-level control through deep reinforcement learning]

- Double Deep Q Network (DDQN) [Hado van Hasselt et al.: Deep Reinforcement Learning with Double Q-learning]

- Prioritized Experience Replay [Tom Schaul et al.: Prioritized Experience Replay]

- Dueling Deep Q Network [Ziyu Wang et al.: Dueling Network Architectures for Deep Reinforcement Learning]

5. Rainbow II, Distributional RL

Mar 18 Slides PDF Slides Lecture Questions

- Noisy Nets [Meire Fortunato et al.: Noisy Networks for Exploration]

- Distributional Reinforcement Learning [Marc G. Bellemare et al.: A Distributional Perspective on Reinforcement Learning]

- Rainbow [Matteo Hessel et al.: Rainbow: Combining Improvements in Deep Reinforcement Learning]

- QR-DQN [Will Dabney et al.: Distributional Reinforcement Learning with Quantile Regression]

- IQN [Will Dabney et al.: Implicit Quantile Networks for Distributional Reinforcement Learning]

6. Policy Gradient Methods

Mar 25 Slides PDF Slides Lecture Questions reinforce reinforce_baseline cart_pole_pixels

- Policy Gradient Methods [Sections 13-13.1 of RLB]

- Policy Gradient Theorem [Section 13.2 of RLB]

- REINFORCE algorithm [Section 13.3 of RLB]

- REINFORCE with baseline algorithm [Section 13.4 of RLB]

- OP-REINFORCE [Dibya Ghosh, Marlos C. Machado, Nicolas Le Roux: An operator view of policy gradient methods]

- Actor-Critic methods [Section 13.5 of RLB, without the eligibility traces variant]

- A3C and asynchronous RL [Volodymyr Mnih et al.: Asynchronous Methods for Deep Reinforcement Learning]

7. Easter Monday

Apr 01

8. PAAC, DDPG, TD3, SAC

Apr 08 Slides PDF Slides Lecture Questions paac paac_continuous ddpg

- PAAC [Alfredo V. Clemente et al.: Efficient Parallel Methods for Deep Reinforcement Learning]

- Gradient methods with continuous actions [Section 13.7 of RLB]

- Deterministic policy gradient theorem (DPG) [David Silver et al.: Deterministic Policy Gradient Algorithms]

- Deep deterministic policy gradient (DDPG) [Timothy P. Lillicrap et al.: Continuous Control with Deep Reinforcement Learning]

- Twin delayed deep deterministic policy gradient (TD3) [Scott Fujimoto et al.: Addressing Function Approximation Error in Actor-Critic Methods]

- Soft actor-critic (SAC) [Tuomas Haarnoja et al.: Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor]

9. Eligibility traces, Impala

Apr 15 Slides PDF Slides Lecture Questions walker walker_hardcore cheetah humanoid humanoid_standup

- Eligibility traces [Sections 12, 12.1, 12.3, 12.8, 12.9 of RLB]

- TD(λ) [Section 12.2 of RLB]

- The V-trace algorithm, IMPALA [Lasse Espeholt et al.: IMPALA: Scalable Distributed Deep-RL with Importance Weighted Actor-Learner Architectures]

- PBT [Max Jaderberg et al.: Population Based Training of Neural Networks]

- PopArt reward normalization [Matteo Hessel et al.: Multi-task Deep Reinforcement Learning with PopArt]

10. PPO, R2D2, Agent57

Apr 22 Slides PDF Slides Lecture trace_algorithms ppo

- Natural policy gradient (NPG) [Sham Kakade: A Natural Policy Gradient]

- Truncated natural policy gradient (TNPG), Trust Region Policy Optimalization (TRPO) [John Schulman et al.: Trust Region Policy Optimization]

- PPO algorithm [John Schulman et al.: Proximal Policy Optimization Algorithms]

- Transformed rewards [Tobias Pohlen et al.: Observe and Look Further: Achieving Consistent Performance on Atari]

- Recurrent Replay Distributed DQN (R2D2) [Steven Kapturowski et al.: Recurrent Experience Replay in Distributed Reinforcement Learning]

- Retrace [Rémi Munos et al.:Safe and Efficient Off-Policy Reinforcement Learning]

- Never Give Up [Adrià Puigdomènech Badia et al.: Never Give Up: Learning Directed Exploration Strategies]]

- Agent57 [Adrià Puigdomènech Badia et al.: Agent57: Outperforming the Atari Human Benchmark]]

11. UCB, Monte Carlo Tree Search, AlphaZero

Apr 29 Slides PDF Slides Lecture Questions az_quiz

- UCB [Section 2.7 of RLB]

- Monte-Carlo tree search [Guillaume Chaslot et al.: Monte-Carlo Tree Search: A New Framework for Game A]

- AlphaZero [David Silver et al.: A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play

12. MuZero, AlphaZero Policy Target, Gumbel-Max, GumbelZero

May 6 Slides PDF Slides Lecture GumbelZero Questions az_quiz_cpp az_quiz_randomized pisqorky

- MuZero [Julian Schrittwieser et al.: Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model]

- AlphaZero as regularized policy optization [Jean-Bastien Grill et al.: Monte-Carlo Tree Search as Regularized Policy Optimization]

- GumbelZero [Ivo Danihelka et al.: Policy Improvement by Planning with Gumbel]

13. PlaNet, ST and Gumbel-softmax, DreamerV2, DreamerV3

May 13 Slides PDF Slides Lecture Questions

- PlaNet [D. Hafner et al.: Learning Latent Dynamics for Planning from Pixels]

- Straight-Through estimator [Yoshua Bengio, Nicholas Léonard, Aaron Courville: Estimating or Propagating Gradients Through Stochastic Neurons for Conditional Computation]

- Gumbel softmax [Eric Jang, Shixiang Gu, Ben Poole: Categorical Reparameterization with Gumbel-Softmax, Chris J. Maddison, Andriy Mnih, Yee Whye Teh: The Concrete Distribution: A Continuous Relaxation of Discrete Random Variables]

- DreamerV2 [D. Hafner et al.: Mastering Atari with Discrete World Models]

- DreamerV3 [D. Hafner et al.: Mastering Diverse Domains through World Models]

14. MARL, External Memory

May 20 Slides PDF Slides Lecture mappo memory_game memory_game_rl

- Multi-Agent Reinforcement Learning [Nice overview gives the diploma thesis Cooperative Multi-Agent Reinforcement Learning]

- MERLIN model [Greg Wayne et al.:Unsupervised Predictive Memory in a Goal-Directed Agent]

- FTW agent for multiplayer CTF [Max Jaderberg et al.: Human-level performance in first-person multiplayer games with population-based deep reinforcement learning]

Requirements

To pass the practicals, you need to obtain at least 80 points, excluding the bonus points. Note that all surplus points (both bonus and non-bonus) will be transfered to the exam. In total, assignments for at least 120 points (not including the bonus points) will be available, and if you solve all the assignments (any non-zero amount of points counts as solved), you automatically pass the exam with grade 1.

Environment

The tasks are evaluated automatically using the ReCodEx Code Examiner.

The evaluation is performed using Python 3.11, Gymnasium 1.0.0a1 and PyTorch 2.2.0. You should install the exact version of these packages yourselves. Additionally, ReCodEx also provides TensorFlow 2.16.1, TensorFlow Probability 0.24.0, Keras 3, and JAX 0.4.25.

Teamwork

Solving assignments in teams (of size at most 3) is encouraged, but everyone has to participate (it is forbidden not to work on an assignment and then submit a solution created by other team members). All members of the team must submit in ReCodEx individually, but can have exactly the same sources/models/results. Each such solution must explicitly list all members of the team to allow plagiarism detection using this template.

No Cheating

Cheating is strictly prohibited and any student found cheating will be punished. The punishment can involve failing the whole course, or, in grave cases, being expelled from the faculty. While discussing assignments with any classmate is fine, each team must complete the assignments themselves, without using code they did not write (unless explicitly allowed). Of course, inside a team you are allowed to share code and submit identical solutions. Note that all students involved in cheating will be punished, so if you share your source code with a friend, both you and your friend will be punished. That also means that you should never publish your solutions.

bandits

Deadline: Mar 05, 22:00 3 points

Implement the -greedy strategy for solving multi-armed bandits.

Start with the bandits.py

template, which defines MultiArmedBandits environment, which has the following

three methods:

reset(): reset the environmentstep(action) → reward: perform the chosen action in the environment, obtaining a rewardgreedy(epsilon): returnTruewith probability 1-epsilon

Your goal is to implement the following solution variants:

alpha: perform -greedy search, updating the estimates using averaging.alpha: perform -greedy search, updating the estimates using a fixed learning ratealpha.

Note that the initial estimates should be set to a given value and epsilon can

be zero, in which case purely greedy actions are used.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 bandits.py --alpha=0 --epsilon=0.1 --initial=0

1.39 0.08

python3 bandits.py --alpha=0 --epsilon=0 --initial=1

1.48 0.22

python3 bandits.py --alpha=0.15 --epsilon=0.1 --initial=0

1.37 0.09

python3 bandits.py --alpha=0.15 --epsilon=0 --initial=1

1.52 0.04

monte_carlo

Deadline: Mar 05, 22:00 5 points

Solve the discretized CartPole-v1 environment

from the Gymnasium library using the Monte Carlo

reinforcement learning algorithm. The gymnasium environments have the followng

methods and properties:

observation_space: the description of environment observationsaction_space: the description of environment actionsreset() → new_state, info: starts a new episode, returning the new state and additional environment-specific informationstep(action) → new_state, reward, terminated, truncated, info: perform the chosen action in the environment, returning the new state, obtained reward, boolean flags indicating a terminal state and episode truncation, and additional environment-specific information

We additionaly extend the gymnasium environment by:

episode: number of the current episode (zero-based)reset(start_evaluation=False) → new_state, info: ifstart_evaluationisTrue, an evaluation is started

Once you finish training (which you indicate by passing start_evaluation=True

to reset), your goal is to reach an average return of 490 during 100

evaluation episodes. Note that the environment prints your 100-episode

average return each 10 episodes even during training.

Start with the monte_carlo.py

template, which parses several useful parameters, creates the environment

and illustrates the overall usage.

You will also need the wrappers.py

module, which wraps the standard gymnasium API with the above-mentioned added features we use.

During evaluation in ReCodEx, three different random seeds will be employed, and you need to reach the required return on all of them. Time limit for each test is 5 minutes.

policy_iteration

Deadline: Mar 12, 22:00 2 points

Consider the following gridworld:

Start with policy_iteration.py, which implements the gridworld mechanics, by providing the following methods:

GridWorld.states: return the number of states (11)GridWorld.actions: return a list with labels of the actions (["↑", "→", "↓", "←"])GridWorld.step(state, action): return possible outcomes of performing theactionin a givenstate, as a list of triples containingprobability: probability of the outcomereward: reward of the outcomenew_state: new state of the outcome

Implement policy iteration algorithm, with --steps steps of policy

evaluation/policy improvement. During policy evaluation, use the current value

function and perform --iterations applications of the Bellman equation.

Perform the policy evaluation asynchronously (i.e., update the value function

in-place for states ). Assume the initial policy is “go North” and

initial value function is zero.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 policy_iteration.py --gamma=0.95 --iterations=1 --steps=1

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ -10.00← -10.95↑

0.00↑ 0.00← -7.50← -88.93←

python3 policy_iteration.py --gamma=0.95 --iterations=1 --steps=2

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ -8.31← -11.83←

0.00↑ 0.00← -1.50← -20.61←

python3 policy_iteration.py --gamma=0.95 --iterations=1 --steps=3

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ -6.46← -6.77←

0.00↑ 0.00← -0.76← -13.08↓

python3 policy_iteration.py --gamma=0.95 --iterations=1 --steps=10

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ -1.04← -0.83←

0.00↑ 0.00← -0.11→ -0.34↓

python3 policy_iteration.py --gamma=0.95 --iterations=10 --steps=10

11.93↓ 11.19← 10.47← 6.71↑

12.83↓ 10.30← 10.12←

13.70→ 14.73→ 15.72→ 16.40↓

python3 policy_iteration.py --gamma=1 --iterations=1 --steps=100

74.73↓ 74.50← 74.09← 65.95↑

75.89↓ 72.63← 72.72←

77.02→ 78.18→ 79.31→ 80.16↓

policy_iteration_exact

Deadline: Mar 12, 22:00 2 points

Starting with policy_iteration_exact.py,

extend the policy_iteration assignment to perform policy evaluation

exactly by solving a system of linear equations. Note that you need to

use 64-bit floats because lower precision results in unacceptable error.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 policy_iteration_exact.py --gamma=0.95 --steps=1

-0.00↑ -0.00↑ -0.00↑ -0.00↑

-0.00↑ -12.35← -12.35↑

-0.85← -8.10← -19.62← -100.71←

python3 policy_iteration_exact.py --gamma=0.95 --steps=2

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ 0.00← -11.05←

-0.00↑ -0.00↑ -0.00← -12.10↓

python3 policy_iteration_exact.py --gamma=0.95 --steps=3

-0.00↑ 0.00↑ 0.00↑ 0.00↑

-0.00↑ -0.00← 0.69←

-0.00↑ -0.00↑ -0.00→ 6.21↓

python3 policy_iteration_exact.py --gamma=0.95 --steps=4

-0.00↑ 0.00↑ 0.00↓ 0.00↑

-0.00↓ 5.91← 6.11←

0.65→ 6.17→ 14.93→ 15.99↓

python3 policy_iteration_exact.py --gamma=0.95 --steps=5

2.83↓ 4.32→ 8.09↓ 5.30↑

12.92↓ 9.44← 9.35←

13.77→ 14.78→ 15.76→ 16.53↓

python3 policy_iteration_exact.py --gamma=0.95 --steps=6

11.75↓ 8.15← 8.69↓ 5.69↑

12.97↓ 9.70← 9.59←

13.82→ 14.84→ 15.82→ 16.57↓

python3 policy_iteration_exact.py --gamma=0.95 --steps=7

12.12↓ 11.37← 9.19← 6.02↑

13.01↓ 9.92← 9.79←

13.87→ 14.89→ 15.87→ 16.60↓

python3 policy_iteration_exact.py --gamma=0.95 --steps=8

12.24↓ 11.49← 10.76← 7.05↑

13.14↓ 10.60← 10.42←

14.01→ 15.04→ 16.03→ 16.71↓

python3 policy_iteration_exact.py --gamma=0.9999 --steps=5

7385.23↓ 7392.62→ 7407.40↓ 7400.00↑

7421.37↓ 7411.10← 7413.16↓

7422.30→ 7423.34→ 7424.27→ 7425.84↓

policy_iteration_mc_estarts

Deadline: Mar 12, 22:00 2 points

Starting with policy_iteration_mc_estarts.py,

extend the policy_iteration assignment to perform policy evaluation

by using Monte Carlo estimation with exploring starts. Specifically,

we update the action-value function by running a

simulation with a given number of steps and using the observed return

as its estimate.

The estimation can now be performed model-free (without the access to the full

MDP dynamics), therefore, the GridWorld.step returns a randomly sampled

result instead of a full distribution.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 policy_iteration_mc_estarts.py --gamma=0.95 --seed=42 --mc_length=100 --steps=1

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ 0.00↑ 0.00↑

0.00↑ 0.00→ 0.00↑ 0.00↓

python3 policy_iteration_mc_estarts.py --gamma=0.95 --seed=42 --mc_length=100 --steps=10

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ 0.00↑ -19.50↑

0.27↓ 0.48← 2.21↓ 8.52↓

python3 policy_iteration_mc_estarts.py --gamma=0.95 --seed=42 --mc_length=100 --steps=50

0.09↓ 0.32↓ 0.22← 0.15↑

0.18↑ -2.43← -5.12↓

0.18↓ 1.80↓ 3.90↓ 9.14↓

python3 policy_iteration_mc_estarts.py --gamma=0.95 --seed=42 --mc_length=100 --steps=100

3.09↓ 2.42← 2.39← 1.17↑

3.74↓ 1.66← 0.18←

3.92→ 5.28→ 7.16→ 11.07↓

python3 policy_iteration_mc_estarts.py --gamma=0.95 --seed=42 --mc_length=100 --steps=200

7.71↓ 6.76← 6.66← 3.92↑

8.27↓ 6.17← 5.31←

8.88→ 10.12→ 11.36→ 13.92↓

policy_iteration_mc_egreedy

Deadline: Mar 12, 22:00 2 points

Starting with policy_iteration_mc_egreedy.py,

extend the policy_iteration_mc_estarts assignment to perform policy

evaluation by using -greedy Monte Carlo estimation. Specifically,

we update the action-value function by running a

simulation with a given number of steps and using the observed return

as its estimate.

For the sake of replicability, use the provided

GridWorld.epsilon_greedy(epsilon, greedy_action) method, which returns

a random action with probability of epsilon and otherwise returns the

given greedy_action.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 policy_iteration_mc_egreedy.py --gamma=0.95 --seed=42 --mc_length=100 --steps=1

0.00↑ 0.00↑ 0.00↑ 0.00↑

0.00↑ 0.00→ 0.00→

0.00↑ 0.00↑ 0.00→ 0.00→

python3 policy_iteration_mc_egreedy.py --gamma=0.95 --seed=42 --mc_length=100 --steps=10

-1.20↓ -1.43← 0.00← -6.00↑

0.78→ -20.26↓ 0.00←

0.09← 0.00↓ -9.80↓ 10.37↓

python3 policy_iteration_mc_egreedy.py --gamma=0.95 --seed=42 --mc_length=100 --steps=50

-0.16↓ -0.19← 0.56← -6.30↑

0.13→ -6.99↓ -3.51↓

0.01← 0.00← 3.18↓ 7.57↓

python3 policy_iteration_mc_egreedy.py --gamma=0.95 --seed=42 --mc_length=100 --steps=100

-0.07↓ -0.09← 0.28← -4.66↑

0.06→ -5.04↓ -8.32↓

0.00← 0.00← 1.70↓ 4.38↓

python3 policy_iteration_mc_egreedy.py --gamma=0.95 --seed=42 --mc_length=100 --steps=200

-0.04↓ -0.04← -0.76← -4.15↑

0.03→ -8.02↓ -5.96↓

0.00← 0.00← 2.53↓ 4.36↓

python3 policy_iteration_mc_egreedy.py --gamma=0.95 --seed=42 --mc_length=100 --steps=500

-0.02↓ -0.02← -0.65← -3.52↑

0.01→ -11.34↓ -8.07↓

0.00← 0.00← 3.15↓ 3.99↓

q_learning

Deadline: Mar 12, 22:00 4 points

Solve the discretized MountainCar-v0 environment from the Gymnasium library using the Q-learning reinforcement learning algorithm. Note that this task still does not require PyTorch.

The environment methods and properties are described in the monte_carlo assignment.

Once you finish training (which you indicate by passing start_evaluation=True

to reset), your goal is to reach an average return of -150 during 100

evaluation episodes.

You can start with the q_learning.py template, which parses several useful parameters, creates the environment and illustrates the overall usage. Note that setting hyperparameters of Q-learning is a bit tricky – I usually start with a larger value of (like 0.2 or even 0.5) and then gradually decrease it to almost zero.

During evaluation in ReCodEx, three different random seeds will be employed, and you need to reach the required return on all of them. The time limit for each test is 5 minutes.

importance_sampling

Deadline: Mar 19, 22:00 2 points

Using the FrozenLake-v1 environment, implement Monte Carlo weighted importance sampling to estimate state value function of target policy, which uniformly chooses either action 1 (down) or action 2 (right), utilizing behaviour policy, which uniformly chooses among all four actions.

Start with the importance_sampling.py template, which creates the environment and generates episodes according to behaviour policy.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 importance_sampling.py --episodes=200

0.00 0.00 0.24 0.32

0.00 0.00 0.40 0.00

0.00 0.00 0.20 0.00

0.00 0.00 0.22 0.00

python3 importance_sampling.py --episodes=5000

0.03 0.00 0.01 0.03

0.04 0.00 0.09 0.00

0.10 0.24 0.23 0.00

0.00 0.44 0.49 0.00

python3 importance_sampling.py --episodes=50000

0.03 0.02 0.05 0.01

0.13 0.00 0.07 0.00

0.21 0.33 0.36 0.00

0.00 0.35 0.76 0.00

td_algorithms

Deadline: Mar 19, 22:00 4 points

Starting with the td_algorithms.py template, implement all of the following -step TD methods variants:

- SARSA, expected SARSA and Tree backup;

- either on-policy (with -greedy behaviour policy) or off-policy (with the same behaviour policy, but greedy target policy).

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 td_algorithms.py --episodes=10 --mode=sarsa --n=1

Episode 10, mean 100-episode return -652.70 +-37.77

python3 td_algorithms.py --episodes=10 --mode=sarsa --n=1 --off_policy

Episode 10, mean 100-episode return -632.90 +-126.41

python3 td_algorithms.py --episodes=10 --mode=sarsa --n=4

Episode 10, mean 100-episode return -715.70 +-156.56

python3 td_algorithms.py --episodes=10 --mode=sarsa --n=4 --off_policy

Episode 10, mean 100-episode return -649.10 +-171.73

python3 td_algorithms.py --episodes=10 --mode=expected_sarsa --n=1

Episode 10, mean 100-episode return -641.90 +-122.11

python3 td_algorithms.py --episodes=10 --mode=expected_sarsa --n=1 --off_policy

Episode 10, mean 100-episode return -633.80 +-63.61

python3 td_algorithms.py --episodes=10 --mode=expected_sarsa --n=4

Episode 10, mean 100-episode return -713.90 +-107.05

python3 td_algorithms.py --episodes=10 --mode=expected_sarsa --n=4 --off_policy

Episode 10, mean 100-episode return -648.20 +-107.08

python3 td_algorithms.py --episodes=10 --mode=tree_backup --n=1

Episode 10, mean 100-episode return -641.90 +-122.11

python3 td_algorithms.py --episodes=10 --mode=tree_backup --n=1 --off_policy

Episode 10, mean 100-episode return -633.80 +-63.61

python3 td_algorithms.py --episodes=10 --mode=tree_backup --n=4

Episode 10, mean 100-episode return -663.50 +-111.78

python3 td_algorithms.py --episodes=10 --mode=tree_backup --n=4 --off_policy

Episode 10, mean 100-episode return -708.50 +-125.63

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 td_algorithms.py --mode=sarsa --n=1

Episode 200, mean 100-episode return -235.23 +-92.94

Episode 400, mean 100-episode return -133.18 +-98.63

Episode 600, mean 100-episode return -74.19 +-70.39

Episode 800, mean 100-episode return -41.84 +-54.53

Episode 1000, mean 100-episode return -31.96 +-52.14

python3 td_algorithms.py --mode=sarsa --n=1 --off_policy

Episode 200, mean 100-episode return -227.81 +-91.62

Episode 400, mean 100-episode return -131.29 +-90.07

Episode 600, mean 100-episode return -65.35 +-64.78

Episode 800, mean 100-episode return -34.65 +-44.93

Episode 1000, mean 100-episode return -8.70 +-25.74

python3 td_algorithms.py --mode=sarsa --n=4

Episode 200, mean 100-episode return -277.55 +-146.18

Episode 400, mean 100-episode return -87.11 +-152.12

Episode 600, mean 100-episode return -6.95 +-23.28

Episode 800, mean 100-episode return -1.88 +-19.21

Episode 1000, mean 100-episode return 0.97 +-11.76

python3 td_algorithms.py --mode=sarsa --n=4 --off_policy

Episode 200, mean 100-episode return -339.11 +-144.40

Episode 400, mean 100-episode return -172.44 +-176.79

Episode 600, mean 100-episode return -36.23 +-100.93

Episode 800, mean 100-episode return -22.43 +-81.29

Episode 1000, mean 100-episode return -3.95 +-17.78

python3 td_algorithms.py --mode=expected_sarsa --n=1

Episode 200, mean 100-episode return -223.35 +-102.16

Episode 400, mean 100-episode return -143.82 +-96.71

Episode 600, mean 100-episode return -79.92 +-68.88

Episode 800, mean 100-episode return -38.53 +-47.12

Episode 1000, mean 100-episode return -17.41 +-31.26

python3 td_algorithms.py --mode=expected_sarsa --n=1 --off_policy

Episode 200, mean 100-episode return -231.91 +-87.72

Episode 400, mean 100-episode return -136.19 +-94.16

Episode 600, mean 100-episode return -79.65 +-70.75

Episode 800, mean 100-episode return -35.42 +-44.91

Episode 1000, mean 100-episode return -11.79 +-23.46

python3 td_algorithms.py --mode=expected_sarsa --n=4

Episode 200, mean 100-episode return -263.10 +-161.97

Episode 400, mean 100-episode return -102.52 +-162.03

Episode 600, mean 100-episode return -7.13 +-24.53

Episode 800, mean 100-episode return -1.69 +-12.21

Episode 1000, mean 100-episode return -1.53 +-11.04

python3 td_algorithms.py --mode=expected_sarsa --n=4 --off_policy

Episode 200, mean 100-episode return -376.56 +-116.08

Episode 400, mean 100-episode return -292.35 +-166.14

Episode 600, mean 100-episode return -173.83 +-194.11

Episode 800, mean 100-episode return -89.57 +-153.70

Episode 1000, mean 100-episode return -54.60 +-127.73

python3 td_algorithms.py --mode=tree_backup --n=1

Episode 200, mean 100-episode return -223.35 +-102.16

Episode 400, mean 100-episode return -143.82 +-96.71

Episode 600, mean 100-episode return -79.92 +-68.88

Episode 800, mean 100-episode return -38.53 +-47.12

Episode 1000, mean 100-episode return -17.41 +-31.26

python3 td_algorithms.py --mode=tree_backup --n=1 --off_policy

Episode 200, mean 100-episode return -231.91 +-87.72

Episode 400, mean 100-episode return -136.19 +-94.16

Episode 600, mean 100-episode return -79.65 +-70.75

Episode 800, mean 100-episode return -35.42 +-44.91

Episode 1000, mean 100-episode return -11.79 +-23.46

python3 td_algorithms.py --mode=tree_backup --n=4

Episode 200, mean 100-episode return -270.51 +-134.35

Episode 400, mean 100-episode return -64.27 +-109.50

Episode 600, mean 100-episode return -1.80 +-13.34

Episode 800, mean 100-episode return -0.22 +-13.14

Episode 1000, mean 100-episode return 0.60 +-9.37

python3 td_algorithms.py --mode=tree_backup --n=4 --off_policy

Episode 200, mean 100-episode return -248.56 +-147.74

Episode 400, mean 100-episode return -68.60 +-126.13

Episode 600, mean 100-episode return -6.25 +-32.23

Episode 800, mean 100-episode return -0.53 +-11.82

Episode 1000, mean 100-episode return 2.33 +-8.35

q_learning_tiles

Deadline: Mar 19, 22:00 3 points

Improve the q_learning task performance on the

MountainCar-v0 environment

using linear function approximation with tile coding.

Your goal is to reach an average reward of -110 during 100 evaluation episodes.

The environment methods are described in the q_learning assignment, with

the following changes:

- The

statereturned by theenv.stepmethod is a list containing weight indices of the current state (i.e., the feature vector of the state consists of zeros and ones, and only the indices of the ones are returned). The action-value function is therefore approximated as a sum of the weights whose indices are returned byenv.step. - The

env.observation_space.nvecreturns a list, where the -th element is a number of weights used by first elements ofstate. Notably,env.observation_space.nvec[-1]is the total number of the weights.

You can start with the q_learning_tiles.py

template, which parses several useful parameters and creates the environment.

Implementing Q-learning is enough to pass the assignment, even if both N-step

Sarsa and Tree Backup converge a little faster. The default number of tiles in

tile encoding (i.e., the size of the list with weight indices) is

args.tiles=8, but you can use any number you want (but the assignment is

solvable with 8).

During evaluation in ReCodEx, three different random seeds will be employed, and you need to reach the required return on all of them. The time limit for each test is 5 minutes.

lunar_lander

Deadline: Mar 19, 22:00 5 points + 5 bonus

Solve the LunarLander-v2 environment from the Gymnasium library Note that this task does not require PyTorch.

The environment methods and properties are described in the monte_carlo assignment,

but include one additional method:

-

expert_trajectory(seed=None) → trajectory: This method generates one expert trajectory, wheretrajectoryis a list of triples (state, action, reward), where the action and reward isNonewhen reaching the terminal state. If aseedis given, the expert trajectory random generator is reset before generating the trajectory.You cannot change the implementation of this method or use its internals in any other way than just calling

expert_trajectory(). Furthermore, you can use this method only during training, not during evaluation.

To pass the task, you need to reach an average return of 0 during 1000 evaluation episodes. During evaluation in ReCodEx, three different random seeds will be employed, and you need to reach the required return on all of them. Time limit for each test is 15 minutes.

The task is additionally a competition, and at most 5 points will be awarded according to the relative ordering of your solutions.

You can start with the lunar_lander.py template, which parses several useful parameters, creates the environment and illustrates the overall usage.

In the competition, you should consider the environment states meaning to be unknown, so you cannot use the knowledge about how they are created. But you can learn any such information from the data.

q_network

Deadline: Mar 26, 22:00 5 points

Solve the continuous CartPole-v1 environment from the Gymnasium library using Q-learning with neural network as a function approximation.

You can start with the q_network.py template, which provides a simple network implementation in PyTorch. Feel free to use Tensorflow or JAX instead, if you like.

The continuous environment is very similar to a discrete one, except

that the states are vectors of real-valued observations with shape

env.observation_space.shape.

Use Q-learning with neural network as a function approximation, which for a given state returns state-action values for all actions. You can use any network architecture, but one hidden layer of several dozens ReLU units is a good start. Your goal is to reach an average return of 450 during 100 evaluation episodes.

During evaluation in ReCodEx, two different random seeds will be employed, and you need to reach the required return on all of them. Time limit for each test is 10 minutes (so you can train in ReCodEx, but you can also pretrain your network if you like).

car_racing

Deadline: Mar 26, 22:00 6 points + 6 bonus

The goal of this competition is to use Deep Q Networks (and any of Rainbow improvements) on a more real-world CarRacing-v2 environment from the Gymnasium library.

The supplied car_racing_environment.py

provides the environment. The states are RGB np.uint8 images of size

, but you can downsample them even more. The actions

are also continuous and consist of an array with the following three elements:

steerin range [-1, 1]gasin range [0, 1]brakein range [0, 1]; note that full brake is quite aggressive, so you might consider using less force when braking Internally you should probably generate discrete actions and convert them to the required representation before thestepcall. Alternatively, you might setargs.continuous=0, which changes the action space from continuous to 5 discrete actions – do nothing, steer left, steer right, gas, and brake. But you can experiment with different action space if you want.

The environment also supports frame skipping (args.frame_skipping), which

improves its performance (only some frames need to be rendered). Note that

ReCodEx respects both args.continuous and args.frame_skipping during

evaluation.

In ReCodEx, you are expected to submit an already trained model, which is evaluated on 15 different tracks with a total time limit of 15 minutes. If your average return is at least 300, you obtain 6 points. The task is also a competition, and at most 6 points will be awarded according to relative ordering of your solutions.

The car_racing.py template parses several useful parameters and creates the environment. Note that the car_racing_environment.py can be executed directly and in that case you can drive the car using arrows.

Also, you might want to use a vectorized version of the environment for training, which runs several individual environments in separate processes. The template contains instructions how to create it. The vectorized environment expects a vector of actions and returns a vector of observations, rewards, dones and infos. When one of the environments finishes, it is automatically reset in the next step.

reinforce

Deadline: Apr 09, 22:00 4 points

Solve the continuous CartPole-v1 environment from the Gymnasium library using the REINFORCE algorithm.

Your goal is to reach an average return of 490 during 100 evaluation episodes.

Start with the reinforce.py template, which provides a simple network implementation in PyTorch. Feel free to use TensorFlow (reinforce.tf.py) or JAX instead, if you like.

During evaluation in ReCodEx, two different random seeds will be employed, and you need to reach the required return on all of them. Time limit for each test is 5 minutes.

reinforce_baseline

Deadline: Apr 09, 22:00 3 points

This is a continuation of the reinforce assignment.

Using the reinforce_baseline.py template, solve the continuous CartPole-v1 environment using the REINFORCE with baseline algorithm. The TensorFlow version of the template reinforce_baseline.tf.py is also available.

Using a baseline lowers the variance of the value function gradient estimator, which allows faster training and decreases sensitivity to hyperparameter values. To reflect this effect in ReCodEx, note that the evaluation phase will automatically start after 200 episodes. Using only 200 episodes for training in this setting is probably too little for the REINFORCE algorithm, but suffices for the variant with a baseline. In this assignment, you must train your agent in ReCodEx using the provided environment only.

Your goal is to reach an average return of 490 during 100 evaluation episodes.

During evaluation in ReCodEx, two different random seeds will be employed, and you need to reach the required return on all of them. Time limit for each test is 5 minutes.

cart_pole_pixels

Deadline: Apr 09, 22:00 3 points + 4 bonus

The supplied cart_pole_pixels_environment.py

generates a pixel representation of the CartPole environment

as an np.uint8 image with three channels, with each channel representing one time step

(i.e., the current observation and the two previous ones).

During evaluation in ReCodEx, three different random seeds will be employed, each with time limit of 10 minutes, and if you reach an average return at least 450 on all of them, you obtain 3 points. The task is also a competition, and at most 4 points will be awarded according to relative ordering of your solutions.

The cart_pole_pixels.py template parses several parameters and creates the environment. You are again supposed to train the model beforehand and submit only the trained neural network.

paac

Deadline: Apr 23, 22:00 3 points

Solve the CartPole-v1 environment

using parallel actor-critic algorithm, employing the vectorized

environment described in the car_racing assignment.

Your goal is to reach an average return of 450 during 100 evaluation episodes.

Start with the paac.py template, which provides a simple network implementation in PyTorch. Feel free to use TensorFlow or JAX instead, if you like.

During evaluation in ReCodEx, two different random seeds will be employed, and you need to reach the required return on all of them. Time limit for each test is 10 minutes.

paac_continuous

Deadline: Apr 23, 22:00 4 points

Solve the MountainCarContinuous-v0 environment

using parallel actor-critic algorithm with continuous actions.

When actions are continuous, env.action_space is the same Box space

as env.observation_space, offering:

env.action_space.shape, which specifies the shape of actions (you can assume actions are always a 1D vector),env.action_space.lowandenv.action_space.high, which specify the ranges of the corresponding actions.

Your goal is to reach an average return of 90 during 100 evaluation episodes.

Start with the paac_continuous.py template, which provides a simple network implementation in PyTorch. Feel free to use TensorFlow or JAX instead, if you like.

During evaluation in ReCodEx, two different random seeds will be employed, and you need to reach the required return on all of them. Time limit for each test is 10 minutes.

ddpg

Deadline: Apr 23, 22:00 5 points

Solve the continuous Pendulum-v1 and InvertedDoublePendulum-v5 environments using deep deterministic policy gradient algorithm.

Your goal is to reach an average return of -200 for Pendulum-v1 and 9000 for InvertedDoublePendulum-v5

during 100 evaluation episodes.

Start with the ddpg.py template, which provides a simple network implementation in PyTorch. Feel free to use TensorFlow (ddpg.tf.py) or JAX instead, if you like.

During evaluation in ReCodEx, two different random seeds will be employed for both environments, and you need to reach the required return on all of them. Time limit for each test is 10 minutes.

walker

Deadline: Apr 30, 22:00 5 points

In this exercise we explore continuous robot control by solving the continuous BipedalWalker-v3 environment.

Note that the penalty of -100 on crash makes the training considerably slower.

Even if all of DDPG, TD3 and SAC can be trained with original rewards, overriding

the reward at the end of episode to 0 speeds up training considerably.

In ReCodEx, you are expected to submit an already trained model, which is evaluated with two seeds, each for 100 episodes with a time limit of 10 minutes. If your average return is at least 200, you obtain 5 points.

The walker.py template contains the skeleton for implementing the SAC agent, but you can also solve the assignment with DDPG/TD3. The TensorFlow template walker.tf.py is also available.

walker_hardcore

Deadline: Apr 30, 22:00 5 points + 5 bonus

As an extension of the walker assignment, solve the

hardcore version of the BipedalWalker-v3 environment.

Note that the penalty of -100 on crash can discourage or even stop training,

so overriding the reward at the end of episode to 0 (or descresing it

substantially) makes the training considerably easier (I have not surpassed

return 0 with neither TD3 nor SAC with the original -100 penalty).

In ReCodEx, you are expected to submit an already trained model, which is evaluated with three seeds, each for 100 episodes with a time limit of 10 minutes. If your average return is at least 100, you obtain 5 points. The task is also a competition, and at most 5 points will be awarded according to relative ordering of your solutions.

The walker_hardcore.py

template shows a basic structure of evaluaton in ReCodEx, but

you most likely want to start either with ddpg.py.

or with walker.py

and just change the env argument to BipedalWalkerHardcore-v3.

cheetah

Deadline: Apr 30 Jun 28, 22:00

2 points

In this exercise, use the DDPG/TD3/SAC algorithm to solve the HalfCheetah environment. If you start with DDPG, implementing the TD3 improvements should make the hyperparameter search significantly easier. However, for me, the SAC algorithm seems to work the best.

The template cheetah.py only creates the environment and shows the evaluation in ReCodEx.

In ReCodEx, you are expected to submit an already trained model, which is evaluated with two seeds, each for 100 episodes with a time limit of 10 minutes. If your average return is at least 8000 on all of them, you obtain 2 points.

humanoid

Deadline: May 14 Jun 28, 22:00

3 points; not required for automatically passing the exam

In this exercise, use the DDPG/TD3/SAC algorithm to solve the Humanoid environment.

The template humanoid.py only creates the environment and shows the evaluation in ReCodEx.

In ReCodEx, you are expected to submit an already trained model, which is evaluated with two seeds, each for 100 episodes with a time limit of 10 minutes. If your average return is at least 8000 on all of them, you obtain 3 points.

humanoid_standup

Deadline: May 14 Jun 28, 22:00

5 points; not required for automatically passing the exam

In this exercise, use the DDPG/TD3/SAC algorithm to solve the Humanoid Standup environment.

The template humanoid_standup.py only creates the environment and shows the evaluation in ReCodEx.

In ReCodEx, you are expected to submit an already trained model, which is evaluated with two seeds, each for 100 episodes with a time limit of 10 minutes. If your average return is at least 380000 on all of them, you obtain 5 points.

trace_algorithms

Deadline: May 7, 22:00 4 points

Starting with the trace_algorithms.py template, implement the following state value estimations:

- use -step estimates for a given ;

- if requested, use eligibility traces with a given ;

- allow off-policy correction using importance sampling with control variates, optionally clipping the individual importance sampling ratios by a given threshold.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 trace_algorithms.py --episodes=50 --n=1

The mean 1000-episode return after evaluation -196.80 +-25.96

python3 trace_algorithms.py --episodes=50 --n=4

The mean 1000-episode return after evaluation -165.45 +-78.01

python3 trace_algorithms.py --episodes=50 --n=8 --seed=62

The mean 1000-episode return after evaluation -180.20 +-61.48

python3 trace_algorithms.py --episodes=50 --n=4 --trace_lambda=0.6

The mean 1000-episode return after evaluation -170.70 +-72.93

python3 trace_algorithms.py --episodes=50 --n=8 --trace_lambda=0.6 --seed=77

The mean 1000-episode return after evaluation -154.24 +-86.67

python3 trace_algorithms.py --episodes=50 --n=1 --off_policy

The mean 1000-episode return after evaluation -189.16 +-46.74

python3 trace_algorithms.py --episodes=50 --n=4 --off_policy

The mean 1000-episode return after evaluation -159.09 +-83.40

python3 trace_algorithms.py --episodes=50 --n=8 --off_policy

The mean 1000-episode return after evaluation -166.82 +-76.04

python3 trace_algorithms.py --episodes=50 --n=1 --off_policy --vtrace_clip=1

The mean 1000-episode return after evaluation -198.50 +-17.93

python3 trace_algorithms.py --episodes=50 --n=4 --off_policy --vtrace_clip=1

The mean 1000-episode return after evaluation -144.76 +-92.48

python3 trace_algorithms.py --episodes=50 --n=8 --off_policy --vtrace_clip=1

The mean 1000-episode return after evaluation -167.63 +-75.87

python3 trace_algorithms.py --episodes=50 --n=4 --off_policy --vtrace_clip=1 --trace_lambda=0.6

The mean 1000-episode return after evaluation -186.28 +-52.05

python3 trace_algorithms.py --episodes=50 --n=8 --off_policy --vtrace_clip=1 --trace_lambda=0.6

The mean 1000-episode return after evaluation -185.67 +-53.04

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 trace_algorithms.py --n=1

Episode 100, mean 100-episode return -96.50 +-92.02

Episode 200, mean 100-episode return -53.64 +-76.70

Episode 300, mean 100-episode return -29.03 +-54.84

Episode 400, mean 100-episode return -8.78 +-21.69

Episode 500, mean 100-episode return -14.24 +-41.76

Episode 600, mean 100-episode return -4.57 +-17.56

Episode 700, mean 100-episode return -7.90 +-27.92

Episode 800, mean 100-episode return -2.17 +-16.67

Episode 900, mean 100-episode return -2.07 +-14.01

Episode 1000, mean 100-episode return 0.13 +-13.93

The mean 1000-episode return after evaluation -35.05 +-84.82

python3 trace_algorithms.py --n=4

Episode 100, mean 100-episode return -74.01 +-89.62

Episode 200, mean 100-episode return -4.84 +-20.95

Episode 300, mean 100-episode return 0.37 +-11.81

Episode 400, mean 100-episode return 1.82 +-8.04

Episode 500, mean 100-episode return 1.28 +-8.66

Episode 600, mean 100-episode return 3.13 +-7.02

Episode 700, mean 100-episode return 0.76 +-8.05

Episode 800, mean 100-episode return 2.05 +-8.11

Episode 900, mean 100-episode return 0.98 +-9.22

Episode 1000, mean 100-episode return 0.29 +-9.13

The mean 1000-episode return after evaluation -11.49 +-60.05

python3 trace_algorithms.py --n=8 --seed=62

Episode 100, mean 100-episode return -102.83 +-104.71

Episode 200, mean 100-episode return -5.02 +-23.36

Episode 300, mean 100-episode return -0.43 +-13.33

Episode 400, mean 100-episode return 1.99 +-8.89

Episode 500, mean 100-episode return -2.17 +-16.57

Episode 600, mean 100-episode return -2.62 +-19.87

Episode 700, mean 100-episode return 1.66 +-7.81

Episode 800, mean 100-episode return -7.40 +-36.75

Episode 900, mean 100-episode return -5.95 +-34.04

Episode 1000, mean 100-episode return 3.51 +-7.88

The mean 1000-episode return after evaluation 6.88 +-14.89

python3 trace_algorithms.py --n=4 --trace_lambda=0.6

Episode 100, mean 100-episode return -85.33 +-91.17

Episode 200, mean 100-episode return -16.06 +-39.97

Episode 300, mean 100-episode return -2.74 +-15.78

Episode 400, mean 100-episode return -0.33 +-9.93

Episode 500, mean 100-episode return 1.39 +-9.48

Episode 600, mean 100-episode return 1.59 +-9.26

Episode 700, mean 100-episode return 3.66 +-6.99

Episode 800, mean 100-episode return 2.08 +-7.26

Episode 900, mean 100-episode return 1.32 +-8.76

Episode 1000, mean 100-episode return 3.33 +-7.27

The mean 1000-episode return after evaluation 7.93 +-2.63

python3 trace_algorithms.py --n=8 --trace_lambda=0.6 --seed=77

Episode 100, mean 100-episode return -118.76 +-105.12

Episode 200, mean 100-episode return -21.82 +-49.91

Episode 300, mean 100-episode return -0.59 +-11.21

Episode 400, mean 100-episode return 2.27 +-8.29

Episode 500, mean 100-episode return 1.65 +-8.52

Episode 600, mean 100-episode return 1.16 +-10.32

Episode 700, mean 100-episode return 1.18 +-9.62

Episode 800, mean 100-episode return 3.35 +-7.34

Episode 900, mean 100-episode return 1.66 +-8.67

Episode 1000, mean 100-episode return 0.86 +-8.56

The mean 1000-episode return after evaluation -11.93 +-60.63

python3 trace_algorithms.py --n=1 --off_policy

Episode 100, mean 100-episode return -68.47 +-73.52

Episode 200, mean 100-episode return -29.11 +-34.15

Episode 300, mean 100-episode return -20.30 +-31.24

Episode 400, mean 100-episode return -13.44 +-25.04

Episode 500, mean 100-episode return -4.72 +-13.75

Episode 600, mean 100-episode return -3.07 +-17.63

Episode 700, mean 100-episode return -2.70 +-13.81

Episode 800, mean 100-episode return 1.32 +-11.79

Episode 900, mean 100-episode return 0.78 +-8.95

Episode 1000, mean 100-episode return 1.15 +-9.27

The mean 1000-episode return after evaluation -12.63 +-62.51

python3 trace_algorithms.py --n=4 --off_policy

Episode 100, mean 100-episode return -96.25 +-105.93

Episode 200, mean 100-episode return -26.21 +-74.65

Episode 300, mean 100-episode return -4.84 +-31.78

Episode 400, mean 100-episode return -0.34 +-9.46

Episode 500, mean 100-episode return 1.15 +-8.49

Episode 600, mean 100-episode return 2.95 +-7.20

Episode 700, mean 100-episode return 0.94 +-10.19

Episode 800, mean 100-episode return 0.13 +-9.27

Episode 900, mean 100-episode return 1.95 +-9.69

Episode 1000, mean 100-episode return 1.91 +-7.59

The mean 1000-episode return after evaluation 6.79 +-3.68

python3 trace_algorithms.py --n=8 --off_policy

Episode 100, mean 100-episode return -180.08 +-112.11

Episode 200, mean 100-episode return -125.56 +-124.82

Episode 300, mean 100-episode return -113.66 +-125.12

Episode 400, mean 100-episode return -77.98 +-117.08

Episode 500, mean 100-episode return -23.71 +-69.71

Episode 600, mean 100-episode return -21.44 +-67.38

Episode 700, mean 100-episode return -2.43 +-16.31

Episode 800, mean 100-episode return 2.38 +-7.42

Episode 900, mean 100-episode return 1.29 +-7.78

Episode 1000, mean 100-episode return 0.84 +-8.37

The mean 1000-episode return after evaluation 7.03 +-2.37

python3 trace_algorithms.py --n=1 --off_policy --vtrace_clip=1

Episode 100, mean 100-episode return -71.85 +-75.59

Episode 200, mean 100-episode return -29.60 +-39.91

Episode 300, mean 100-episode return -23.11 +-33.97

Episode 400, mean 100-episode return -12.00 +-21.72

Episode 500, mean 100-episode return -5.93 +-15.92

Episode 600, mean 100-episode return -7.69 +-16.03

Episode 700, mean 100-episode return -2.95 +-13.75

Episode 800, mean 100-episode return 0.45 +-9.76

Episode 900, mean 100-episode return 0.65 +-9.36

Episode 1000, mean 100-episode return -1.56 +-11.53

The mean 1000-episode return after evaluation -24.25 +-75.88

python3 trace_algorithms.py --n=4 --off_policy --vtrace_clip=1

Episode 100, mean 100-episode return -76.39 +-83.74

Episode 200, mean 100-episode return -3.32 +-13.97

Episode 300, mean 100-episode return -0.33 +-9.49

Episode 400, mean 100-episode return 2.20 +-7.80

Episode 500, mean 100-episode return 1.49 +-7.72

Episode 600, mean 100-episode return 2.27 +-8.67

Episode 700, mean 100-episode return 1.07 +-9.07

Episode 800, mean 100-episode return 3.17 +-6.27

Episode 900, mean 100-episode return 3.25 +-7.39

Episode 1000, mean 100-episode return 0.70 +-8.61

The mean 1000-episode return after evaluation 7.70 +-2.52

python3 trace_algorithms.py --n=8 --off_policy --vtrace_clip=1

Episode 100, mean 100-episode return -110.07 +-106.29

Episode 200, mean 100-episode return -7.22 +-32.31

Episode 300, mean 100-episode return 0.54 +-9.65

Episode 400, mean 100-episode return 2.03 +-7.82

Episode 500, mean 100-episode return 1.64 +-8.63

Episode 600, mean 100-episode return 1.54 +-7.28

Episode 700, mean 100-episode return 2.80 +-7.86

Episode 800, mean 100-episode return 1.69 +-7.26

Episode 900, mean 100-episode return 1.17 +-8.59

Episode 1000, mean 100-episode return 2.39 +-7.59

The mean 1000-episode return after evaluation 7.57 +-2.35

python3 trace_algorithms.py --n=4 --off_policy --vtrace_clip=1 --trace_lambda=0.6

Episode 100, mean 100-episode return -81.87 +-87.96

Episode 200, mean 100-episode return -15.94 +-29.31

Episode 300, mean 100-episode return -5.24 +-20.41

Episode 400, mean 100-episode return -1.01 +-12.52

Episode 500, mean 100-episode return 1.09 +-9.55

Episode 600, mean 100-episode return 0.73 +-9.15

Episode 700, mean 100-episode return 3.09 +-7.59

Episode 800, mean 100-episode return 3.13 +-7.60

Episode 900, mean 100-episode return 1.30 +-8.72

Episode 1000, mean 100-episode return 3.77 +-7.11

The mean 1000-episode return after evaluation 6.46 +-17.53

python3 trace_algorithms.py --n=8 --off_policy --vtrace_clip=1 --trace_lambda=0.6

Episode 100, mean 100-episode return -127.86 +-106.40

Episode 200, mean 100-episode return -27.64 +-48.34

Episode 300, mean 100-episode return -12.75 +-35.05

Episode 400, mean 100-episode return -0.38 +-14.28

Episode 500, mean 100-episode return 1.35 +-9.10

Episode 600, mean 100-episode return 0.43 +-10.53

Episode 700, mean 100-episode return 3.11 +-9.26

Episode 800, mean 100-episode return 3.58 +-6.81

Episode 900, mean 100-episode return 1.24 +-8.24

Episode 1000, mean 100-episode return 1.58 +-7.15

The mean 1000-episode return after evaluation 7.93 +-2.67

ppo

Deadline: May 7 May 14, 22:00

4 points

Implement the PPO algorithm in a single-agent settings. Notably, solve

the SingleCollect environment implemented by the

multi_collect_environment.py

module. To familiarize with it, you can watch a trained agent

and you can run the module directly, controlling the agent with the arrow keys.

In the environment, your goal is to reach a known place, obtaining rewards

based on the agent's distance. If the agent is continuously occupying the place

for some period of time, it gets a large reward and the place is moved randomly.

The environment runs for 250 steps and it is considered solved if you obtain

a return of at least 500.

The ppo.py PyTorch template contains a skeleton of the PPO algorithm implementation. TensorFlow template ppo.tf.py is also available. Regarding the unspecified hyperparameters, I would consider the following ranges:

batch_sizebetween 64 and 512clip_epsilonbetween 0.1 and 0.2epochsbetween 1 and 10gammabetween 0.97 and 1.0trace_lambdais usually 0.95envsbetween 16 and 128worker_stepsbetween tens and hundreds

My implementation trains in approximately three minutes of CPU time.

During evaluation in ReCodEx, two different random seeds will be employed, and you need to reach the average return of 450 on all of them. Time limit for each test is 10 minutes.

az_quiz

Deadline: May 21, 22:00 10 points + 5 bonus

In this competition assignment, use Monte Carlo Tree Search to learn an agent for a simplified version of AZ-kvíz. In our version, the agent does not have to answer questions and we assume that all answers are correct.

The game itself is implemented in the

az_quiz.py

module (using the default randomized=False constructor argument).

The evaluation in ReCodEx should be implemented by returning an object

implementing a method play, which given an AZ-kvíz instance returns the chosen

move. The illustration of the interface is in the

az_quiz_player_random.py

module, which implements a random agent.

Your solution in ReCodEx is automatically evaluated against a very simple heuristic az_quiz_player_simple_heuristic.py, playing 56 games as a starting player and 56 games as a non-starting player. The time limit for the games is 10 minutes and you should see the win rate directly in ReCodEx. If you achieve at least 95%, you will pass the assignment. A better heuristic az_quiz_player_fork_heuristic.py is also available for your evaluations.

The final competition evaluation will be performed after the deadline by

a round-robin tournament. In this tournament, we also consider games

where the first move is chosen for the first player (FirstChosen label

in ReCodEx, --first_chosen option of the evaluator).

The az_quiz_evaluator.py can be used to evaluate any two given implementations and there are two interactive players available, az_quiz_player_interactive_mouse.py and az_quiz_player_interactive_keyboard.py.

The starting PyTorch template is available in the az_quiz_agent.py module; TensorFlow version az_quiz_agent_tf.py is available too.

To get regular points, you must implement an AlphaZero-style algorithm. However, any algorithm can be used in the competition.

az_quiz_cpp

In addition to the Python template for az_quiz, you can also use

az_quiz_cpp,

which is a directory containing a skeleton of C++ MCTS and self-play implementation.

Utilizing the C++ implementation is not required, but it offers a large speedup

(up to 10 times on a multi-core CPU and up to 50-100 times on a GPU). See

README.md

for more information.

az_quiz_randomized

Deadline: Jun 28, 22:00

5 points; either this or pisqorky is required for automatically passing the exam

Extend the az_quiz assignment to handle the possibility of wrong

answers. Therefore, when choosing a field (an action), you might not

claim it; in such a case, the state of the field becomes “failed”. When

a “failed” field is chosen as an action by a player, then either

- it is successfully claimed by the player (they “answer correctly”); or

- if the player “answers incorrectly”, the field is claimed by the opposing player; however, in this case, the original player continue playing (i.e., the players do not alternate in this case).

To instantiate this randomized game variant, pass randomized=True

to the AZQuiz class of az_quiz.py.

Your goal is to propose how to modify the Monte Carlo Tree Search to properly

handle stochastic MDPs. The information about distribution of possible next

states is provided by the AZQuiz.all_moves method, which returns a list of

(probability, az_quiz_instance) next states (in our environment, there are

always two possible next states).

Your implementation must be capable of training and achieve at least 90% win rate against the simple heuristic. Additionally, part of this assignment is to also write us on Piazza (once you pass in ReCodEx) a description of how you handle the stochasticity in MCTS; you will get points only after we finish the discussion.

pisqorky

Deadline: May 21, 22:00

5 points + 5 bonus; either this or az_quiz_randomized is required for automatically passing the exam

Train an agent on Piškvorky, usually called Gomoku internationally.

Because the game is more complex than az_quiz, you probably have to use the

C++ template pisqorky_cpp.

Note that the template shares a lot of code with az_quiz_cpp; it would be

definitely better to refactor it to use the BoardGame ancestor and to share

the common functionality.

The C++ template also provides quite a strong heuristic; in ReCodEx, your agent is evaluated against it, and if it reaches at least 25% win rate in 100 games (50 as a starting player and 50 as a non-starting player), you get the regular points. The final competition evaluation will be performed after the deadline by a round-robin tournament.

To get regular points, you must implement an AlphaZero-style algorithm. However, any algorithm can be used in the competition.

mappo

Deadline: Jun 28, 22:00 4 points

Implement MAPPO in a multi-agent settings. Notably, solve MultiCollect

(a multi-agent extension of SingleCollect) with 2 agents,

implemented again by the multi_collect_environment.py

module (you can watch the trained agents).

The environment is a generalization of SingleCollect. If there are

agents, there are also target places, and each place rewards

the closest agent. Additionally, any agent colliding with others gets

a negative reward, and the environment reward is the average of the agents'

rewards (to keep the rewards less dependent on the number of agents).

Again, the environment runs for 250 steps and is considered solved

when reaching return of at least 500.

The mappo.py

PyTorch template contains a skeleton of the MAPPO algorithm implementation;

a TensorFlow variant mappo.tf.py

is also available. I use hyperparameter values quite similar to the ppo

assignment, with a notable exception of a smaller learning_rate=3e-4, which is

already specified in the template.

My implementation (with two indepenent networks) successfully converges in only circa 50% of the cases, and trains in roughly 10-20 minutes. You can also try using a single shared network for both agents, but then you need to indicate which agent should the network operate on (because positions of both agents are part of the state).

During evaluation in ReCodEx, two different random seeds will be employed, and you need to reach the average return of 450 on all of them. Time limit for each test is 10 minutes.

memory_game

Deadline: Jun 28, 22:00 3 points

In this exercise we explore a partially observable environment. Consider a one-player variant of a memory game (pexeso), where a player repeatedly flips cards. If the player flips two cards with the same symbol in succession, the cards are removed and the player receives a reward of +2. Otherwise the player receives a reward of -1. An episode ends when all cards are removed. Note that it is valid to try to flip an already removed card.

Let there be cards in the environment, being even. There are

actions – the actions flip the corresponding card, and the action 0

flips the unused card with the lowest index (or the card if all have

been used already). The observations consist of a pair of discrete values

(card, symbol), where the card is the index of the card flipped, and

the symbol is the symbol on the flipped card; the env.observation_space.nvec

is a pair , representing there are card indices and

symbol indices.

Every episode can be ended by at most actions, and the required return is therefore greater or equal to zero. Note that there is a limit of at most actions per episode. The described environment is provided by the memory_game_environment.py module.

Your goal is to solve the environment, using supervised learning via the provided

expert episodes and networks with external memory. The environment implements

an env.expert_episode() method, which returns a fresh correct episode

as a list of (state, action) pairs (with the last action being None).

ReCodEx evaluates your solution on environments with 8, 12 and 16 cards

(utilizing the --cards argument). For each card number, 100 episodes are

simulated once you pass evaluating=True to env.reset and your solution gets

1 point if the average return is nonnegative. You can

train the agent directly in ReCodEx (the time limit is 15 minutes),

or submit a pre-trained one.

PyTorch template memory_game.py shows a possible way to use memory augmented networks. TensorFlow template memory_game.tf.py is also available.

memory_game_rl

Deadline: Jun 28, 22:00 5 points

This is a continuation of the memory_game assignment.

In this task, your goal is to solve the memory game environment

using reinforcement learning. That is, you must not use the

env.expert_episode method during training. You can start with PyTorch template

memory_game_rl.py,

which extends the memory_game template by generating training episodes

suitable for some reinforcement learning algorithm. TensorFlow template

memory_game_rl.tf.py

is also available.

ReCodEx evaluates your solution on environments with 4, 6 and 8 cards (utilizing

the --cards argument). For each card number, your solution gets 2 points

(1 point for 4 cards) if the average return is nonnegative. You can train the agent

directly in ReCodEx (the time limit is 15 minutes), or submit a pre-trained one.

Submitting to ReCodEx

When submitting a competition solution to ReCodEx, you should submit a trained agent and a Python source capable of running it.

Furthermore, please also include the Python source and hyperparameters

you used to train the submitted model. But be careful that there still must be

exactly one Python source with a line starting with def main(.

Do not forget about the maximum allowed model size and time and memory limits.

Competition Evaluation

-

Before the deadline, ReCodEx prints the exact performance of your agent, but only if it is worse than the baseline.

If you surpass the baseline, the assignment is marked as solved in ReCodEx and you immediately get regular points for the assignment. However, ReCodEx does not print the reached performance.

-

After the competition deadline, the latest submission of every user surpassing the required baseline participates in a competition. Additional bonus points are then awarded according to the ordering of the performance of the participating submissions.

-

After the competition results announcement, ReCodEx starts to show the exact performance for all the already submitted solutions and also for the solutions submitted later.

What Is Allowed

- Unless stated otherwise, you can use any algorithm to solve the competition task at hand, but the implementation must be created by you and you must understand it fully. You can of course take inspiration from any paper or existing implementation, but please reference it in that case.

- PyTorch, TensorFlow, and JAX are available in ReCodEx (but there are no GPUs).

Install

-

Installing to central user packages repository

You can install all required packages to central user packages repository using

python3 -m pip install --user --no-cache-dir --extra-index-url=https://download.pytorch.org/whl/cu118 torch~=2.2.0 torchaudio~=2.2.0 torchvision~=0.17.0 gymnasium==1.0.0a1 pygame~=2.5.2 ufal.pybox2d~=2.3.10.3 mujoco==3.1.1 imageio~=2.34.0.The above command installs CUDA 11.8 PyTorch build, but you can change

cu118to:cputo get CPU-only (smaller) version,cu121to get CUDA 12.1 build,rocm5.7to get AMD ROCm 5.7 build.

-

Installing to a virtual environment

Python supports virtual environments, which are directories containing independent sets of installed packages. You can create a virtual environment by running

python3 -m venv VENV_DIRfollowed byVENV_DIR/bin/pip install --no-cache-dir --extra-index-url=https://download.pytorch.org/whl/cu118 torch~=2.2.0 torchaudio~=2.2.0 torchvision~=0.17.0 gymnasium==1.0.0a1 pygame~=2.5.2 ufal.pybox2d~=2.3.10.3 mujoco==3.1.1 imageio~=2.34.0. (orVENV_DIR/Scripts/pipon Windows).Again, apart from the CUDA 11.8 build, you can change

cu118to:cputo get CPU-only (smaller) version,cu121to get CUDA 12.1 build,rocm5.7to get AMD ROCm 5.7 build.

-

Windows installation

-

On Windows, it can happen that

python3is not in PATH, whilepycommand is – in that case you can usepy -m venv VENV_DIR, which uses the newest Python available, or for examplepy -3.11 -m venv VENV_DIR, which uses Python version 3.11. -

If you encounter a problem creating the logs in the

args.logdirdirectory, a possible cause is that the path is longer than 260 characters, which is the default maximum length of a complete path on Windows. However, you can increase this limit on Windows 10, version 1607 or later, by following the instructions.

-

-

GPU support on Linux and Windows

PyTorch supports NVIDIA GPU or AMD GPU out of the box, you just need to select appropriate

--extra-index-urlwhen installing the packages.If you encounter problems loading CUDA or cuDNN libraries, make sure your

LD_LIBRARY_PATHdoes not contain paths to older CUDA/cuDNN libraries. -

GPU support on macOS

The support for Apple Silicon GPUs in PyTorch+Keras is currently not great. Apple is working on

mlxbackend for Keras, which might improve the situation in the future.

MetaCentrum

-

How to apply for MetaCentrum account?

After reading the Terms and conditions, you can apply for an account here.

After your account is created, please make sure that the directories containing your solutions are always private.

-

How to activate Python 3.10 on MetaCentrum?

On Metacentrum, currently the newest available Python is 3.10, which you need to activate in every session by running the following command:

module add python/python-3.10.4-intel-19.0.4-sc7snnf -

How to install the required virtual environment on MetaCentrum?

To create a virtual environment, you first need to decide where it will reside. Either you can find a permanent storage, where you have large-enough quota, or you can use scratch storage for a submitted job.

TL;DR:

-

Run an interactive CPU job, asking for 16GB scratch space:

qsub -l select=1:ncpus=1:mem=8gb:scratch_local=16gb -I -

In the job, use the allocated scratch space as the temporary directory:

export TMPDIR=$SCRATCHDIR -

You should clear the scratch space before you exit using the

clean_scratchcommand. You can instruct the shell to call it automatically by running:trap 'clean_scratch' TERM EXIT -

Finally, create the virtual environment and install PyTorch in it:

module add python/python-3.10.4-intel-19.0.4-sc7snnf python3 -m venv CHOSEN_VENV_DIR CHOSEN_VENV_DIR/bin/pip install --no-cache-dir --upgrade pip setuptools CHOSEN_VENV_DIR/bin/pip install --no-cache-dir --extra-index-url=https://download.pytorch.org/whl/cu118 torch~=2.2.0 torchaudio~=2.2.0 torchvision~=0.17.0 gymnasium~=1.0.0a1 pygame~=2.5.2 ufal.pybox2d~=2.3.10.3 mujoco==3.1.1 imageio~=2.34.0

-

-

How to run a GPU computation on MetaCentrum?

First, read the official MetaCentrum documentation: Basic terms, Run simple job, GPU computing, GPU clusters.

TL;DR: To run an interactive GPU job with 1 CPU, 1 GPU, 8GB RAM, and 16GB scatch space, run:

qsub -q gpu -l select=1:ncpus=1:ngpus=1:mem=8gb:scratch_local=16gb -ITo run a script in a non-interactive way, replace the

-Ioption with the script to be executed.If you want to run a CPU-only computation, remove the

-q gpuandngpus=1:from the above commands.

AIC

-

How to install required packages on AIC?

The Python 3.11.7 is available

/opt/python/3.11.7/bin/python3, so you should start by creating a virtual environment using/opt/python/3.11.7/bin/python3 -m venv VENV_DIRand then install the required packages in it using

VENV_DIR/bin/pip install --no-cache-dir --extra-index-url=https://download.pytorch.org/whl/cu118 torch~=2.2.0 torchaudio~=2.2.0 torchvision~=0.17.0 gymnasium~=1.0.0a1 pygame~=2.5.2 ufal.pybox2d~=2.3.10.3 mujoco==3.1.1 imageio~=2.34.0 -

How to run a GPU computation on AIC?

First, read the official AIC documentation: Submitting CPU Jobs, Submitting GPU Jobs.

TL;DR: To run an interactive GPU job with 1 CPU, 1 GPU, and 16GB RAM, run:

srun -p gpu -c1 -G1 --mem=16G --pty bashTo run a shell script requiring a GPU in a non-interactive way, use

sbatch -p gpu -c1 -G1 --mem=16G SCRIPT_PATHIf you want to run a CPU-only computation, remove the

-p gpuand-G1from the above commands.

Git

-

Is it possible to keep the solutions in a Git repository?

Definitely. Keeping the solutions in a branch of your repository, where you merge them with the course repository, is probably a good idea. However, please keep the cloned repository with your solutions private.

-

On GitHub, do not create a public fork with your solutions

If you keep your solutions in a GitHub repository, please do not create a clone of the repository by using the Fork button – this way, the cloned repository would be public.

Of course, if you just want to create a pull request, GitHub requires a public fork and that is fine – just do not store your solutions in it.

-

How to clone the course repository?

To clone the course repository, run

git clone https://github.com/ufal/npfl139This creates the repository in the

npfl139subdirectory; if you want a different name, add it as a last parameter.To update the repository, run

git pullinside the repository directory. -

How to keep the course repository as a branch in your repository?

If you want to store the course repository just in a local branch of your existing repository, you can run the following command while in it:

git remote add upstream https://github.com/ufal/npfl139 git fetch upstream git checkout -t upstream/masterThis creates a branch

master; if you want a different name, add-b BRANCH_NAMEto the last command.In both cases, you can update your checkout by running

git pullwhile in it. -

How to merge the course repository with your modifications?

If you want to store your solutions in a branch merged with the course repository, you should start by

git remote add upstream https://github.com/ufal/npfl139 git pull upstream masterwhich creates a branch

master; if you want a different name, change the last argument tomaster:BRANCH_NAME.You can then commit to this branch and push it to your repository.