Deep Learning – Summer 2023/24

The objective of this course is to provide a comprehensive introduction to deep neural networks, which have consistently demonstrated superior performance across diverse domains, notably in processing and generating images, text, and speech.

The course focuses both on theory spanning from the basics to the latest advances, as well as on practical implementations in Python and PyTorch (students implement and train deep neural networks performing image classification, image segmentation, object detection, part of speech tagging, lemmatization, speech recognition, reading comprehension, and image generation). Basic Python skills are required, but no previous knowledge of artificial neural networks is needed; basic machine learning understanding is advantageous.

Students work either individually or in small teams on weekly assignments, including competition tasks, where the goal is to obtain the highest performance in the class.

About

SIS code: NPFL138

Semester: summer

E-credits: 8

Examination: 3/4 C+Ex

Guarantor: Milan Straka

Timespace Coordinates

- lectures: Czech lecture is held on Monday 12:20 in S5, English lecture on Tuesday 12:20 in S4; first lecture is on Feb 19/20

- practicals: there are two parallel practicals, a Czech one on Wednesday 9:00 in S9, and an English one on Wednesday 10:40 in S9; first practicals are on Feb 21

- consultations: entirely optional consultations take place on Tuesday 15:40 in S4; first consultations are on Feb 27

All lectures and practicals will be recorded and available on this website.

Lectures

1. Introduction to Deep Learning Slides PDF Slides CZ Lecture CZ UniApprox EN Lecture EN UniApprox Questions numpy_entropy pca_first mnist_layers_activations

2. Training Neural Networks Slides PDF Slides CZ Lecture EN Lecture Questions sgd_backpropagation sgd_manual mnist_training gym_cartpole

3. Training Neural Networks II Slides PDF Slides CZ Lecture CZ Convergence EN Lecture EN Convergence Questions mnist_regularization mnist_ensemble uppercase

4. Convolutional Neural Networks Slides PDF Slides CZ Lecture EN Lecture Questions mnist_cnn torch_dataset mnist_multiple cifar_competition

5. Convolutional Neural Networks II Slides PDF Slides CZ Lecture EN Lecture EN Transposed Convolution Questions cnn_manual cags_classification cags_segmentation

6. Object Detection Slides PDF Slides CZ Lecture EN Lecture Questions bboxes_utils svhn_competition

7. Easter Monday 3d_recognition

8. Recurrent Neural Networks Slides PDF Slides CZ Lecture EN Lecture Questions sequence_classification tagger_we tagger_cle tagger_competition

9. Structured Prediction, CTC, Word2Vec Slides PDF Slides CZ Lecture EN Lecture Questions tensorboard_projector tagger_ner ctc_loss speech_recognition

10. Seq2seq, NMT, Transformer Slides PDF Slides CZ Lecture EN Lecture Questions lemmatizer_noattn lemmatizer_attn lemmatizer_competition

11. Transformer, BERT, ViT Slides PDF Slides CZ Lecture EN Lecture Questions tagger_transformer sentiment_analysis reading_comprehension

12. Deep Reinforcement Learning, VAE Slides PDF Slides CZ Lecture EN Lecture Questions homr_competition reinforce reinforce_baseline reinforce_pixels vae

13. Generative Adversarial Networks, Diffusion Models Slides PDF Slides CZ Lecture CZ Stable Diffusion, Score-based Models EN Lecture Questions gan dcgan ddim ddim_attention ddim_conditional

14. Speech Synthesis, External Memory, Meta-Learning Slides PDF Slides CZ Lecture EN Lecture Questions learning_to_learn

License

Unless otherwise stated, teaching materials for this course are available under CC BY-SA 4.0.

The lecture content, including references to study materials. The main study material is the Deep Learning Book by Ian Goodfellow, Yoshua Bengio and Aaron Courville, (referred to as DLB).

References to study materials cover all theory required at the exam, and sometimes even more – the references in italics cover topics not required for the exam.

1. Introduction to Deep Learning

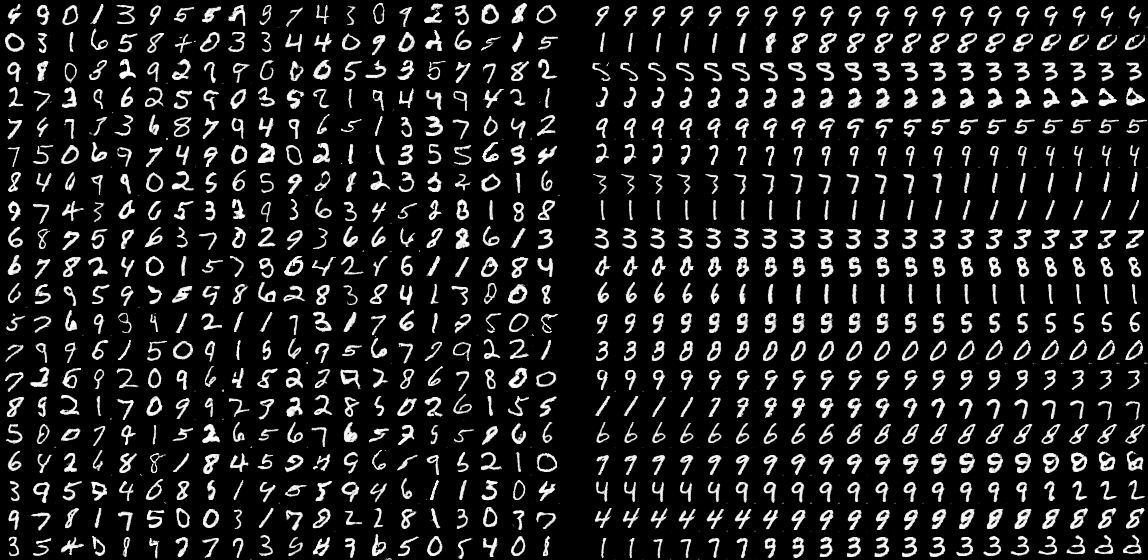

Feb 19 Slides PDF Slides CZ Lecture CZ UniApprox EN Lecture EN UniApprox Questions numpy_entropy pca_first mnist_layers_activations

- Random variables, probability distributions, expectation, variance, Bernoulli distribution, Categorical distribution [Sections 3.2, 3.3, 3.8, 3.9.1 and 3.9.2 of DLB]

- Self-information, entropy, cross-entropy, KL-divergence [Section 3.13 of DBL]

- Gaussian distribution [Section 3.9.3 of DLB]

- Machine Learning Basics [Section 5.1-5.1.3 of DLB]

- History of Deep Learning [Section 1.2 of DLB]

- Linear regression [Section 5.1.4 of DLB]

- Challenges Motivating Deep Learning [Section 5.11 of DLB]

- Neural network basics

- Neural networks as graphs [Chapter 6 before Section 6.1 of DLB]

- Output activation functions [Section 6.2.2 of DLB, excluding Section 6.2.2.4]

- Hidden activation functions [Section 6.3 of DLB, excluding Section 6.3.3]

- Basic network architectures [Section 6.4 of DLB, excluding Section 6.4.2]

- Universal approximation theorem

2. Training Neural Networks

Feb 26 Slides PDF Slides CZ Lecture EN Lecture Questions sgd_backpropagation sgd_manual mnist_training gym_cartpole

- Capacity, overfitting, underfitting, regularization [Section 5.2 of DLB]

- Hyperparameters and validation sets [Section 5.3 of DLB]

- Maximum Likelihood Estimation [Section 5.5 of DLB]

- Neural network training

- Gradient Descent and Stochastic Gradient Descent [Sections 4.3 and 5.9 of DLB]

- Backpropagation algorithm [Section 6.5 to 6.5.3 of DLB, especially Algorithms 6.1 and 6.2; note that Algorithms 6.5 and 6.6 are used in practice]

- SGD algorithm [Section 8.3.1 and Algorithm 8.1 of DLB]

- SGD with Momentum algorithm [Section 8.3.2 and Algorithm 8.2 of DLB]

- SGD with Nestorov Momentum algorithm [Section 8.3.3 and Algorithm 8.3 of DLB]

- Optimization algorithms with adaptive gradients

- AdaGrad algorithm [Section 8.5.1 and Algorithm 8.4 of DLB]

- RMSProp algorithm [Section 8.5.2 and Algorithm 8.5 of DLB]

- Adam algorithm [Section 8.5.3 and Algorithm 8.7 of DLB]

3. Training Neural Networks II

Mar 4 Slides PDF Slides CZ Lecture CZ Convergence EN Lecture EN Convergence Questions mnist_regularization mnist_ensemble uppercase

- Softmax with NLL (negative log likelihood) as a loss function [Section 6.2.2.3 of DLB, notably equation (6.30); plus slides 10-12]

- Regularization [Chapter 7 until Section 7.1 of DLB]

- Early stopping [Section 7.8 of DLB, without the How early stopping acts as a regularizer part]

- L2 and L1 regularization [Sections 7.1 and 5.6.1 of DLB; plus slides 17-18]

- Dataset augmentation [Section 7.4 of DLB]

- Ensembling [Section 7.11 of DLB]

- Dropout [Section 7.12 of DLB]

- Label smoothing [Section 7.5.1 of DLB]

- Saturating non-linearities [Section 6.3.2 and second half of Section 6.2.2.2 of DLB]

- Parameter initialization strategies [Section 8.4 of DLB]

- Gradient clipping [Section 10.11.1 of DLB]

4. Convolutional Neural Networks

Mar 11 Slides PDF Slides CZ Lecture EN Lecture Questions mnist_cnn torch_dataset mnist_multiple cifar_competition

- Introduction to convolutional networks [Chapter 9 and Sections 9.1-9.3 of DLB]

- Convolution as operation on 4D tensors [Section 9.5 of DLB, notably Equations (9.7) and (9.8)]

- Max pooling and average pooling [Section 9.3 of DLB]

- Stride and Padding schemes [Section 9.5 of DLB]

- AlexNet [ImageNet Classification with Deep Convolutional Neural Networks]

- VGG [Very Deep Convolutional Networks for Large-Scale Image Recognition]

- GoogLeNet (aka Inception) [Going Deeper with Convolutions]

- Batch normalization [Section 8.7.1 of DLB, optionally the paper Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift]

- Inception v2 and v3 [Rethinking the Inception Architecture for Computer Vision]

- ResNet [Deep Residual Learning for Image Recognition]

5. Convolutional Neural Networks II

Mar 18 Slides PDF Slides CZ Lecture EN Lecture EN Transposed Convolution Questions cnn_manual cags_classification cags_segmentation

- Residual CNN Networks

- ResNet [Deep Residual Learning for Image Recognition]

- WideNet [Wide Residual Network]

- DenseNet [Densely Connected Convolutional Networks]

- PyramidNet [Deep Pyramidal Residual Networks]

- ResNeXt [Aggregated Residual Transformations for Deep Neural Networks]

- Regularizing CNN Networks

- SENet [Squeeze-and-Excitation Networks]

- EfficientNet [EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks]

- EfficientNetV2 [EfficientNetV2: Smaller Models and Faster Training]

- Transposed convolution

- U-Net [U-Net: Convolutional Networks for Biomedical Image Segmentation]

6. Object Detection

Mar 25 Slides PDF Slides CZ Lecture EN Lecture Questions bboxes_utils svhn_competition

- R-CNN [R-CNN]

- Fast R-CNN [Fast R-CNN]

- Proposing RoIs using Faster R-CNN [Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks]

- Mask R-CNN [Mask R-CNN]

- Feature Pyramid Networks [Feature Pyramid Networks for Object Detection]

- Focal Loss, RetinaNet [Focal Loss for Dense Object Detection]

- EfficientDet [EfficientDet: Scalable and Efficient Object Detection]

- Group Normalization [Group Normalization]

7. Easter Monday

Apr 01 3d_recognition

8. Recurrent Neural Networks

Apr 8 Slides PDF Slides CZ Lecture EN Lecture Questions sequence_classification tagger_we tagger_cle tagger_competition

- Sequence modelling using Recurrent Neural Networks (RNN) [Chapter 10 until Section 10.2.1 (excluding) of DLB]

- The challenge of long-term dependencies [Section 10.7 of DLB]

- Long Short-Term Memory (LSTM) [Section 10.10.1 of DLB, Sepp Hochreiter, Jürgen Schmidhuber (1997): Long short-term memory, Felix A. Gers, Jürgen Schmidhuber, Fred Cummins (2000): Learning to Forget: Continual Prediction with LSTM]

- Gated Recurrent Unit (GRU) [Section 10.10.2 of DLB, Kyunghyun Cho, Bart van Merrienboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, Yoshua Bengio: Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation]

- Highway Networks [Training Very Deep Networks]

- RNN Regularization

- Variational Dropout [A Theoretically Grounded Application of Dropout in Recurrent Neural Networks]

- Layer Normalization [Layer Normalization]

- Bidirectional RNN [Section 10.3 of DLB]

- Word Embeddings [Section 14.2.4 of DLB]

- Character-level embeddings using Recurrent neural networks [C2W model from Finding Function in Form: Compositional Character Models for Open Vocabulary Word Representation]

- Character-level embeddings using Convolutional neural networks [CharCNN from Character-Aware Neural Language Models]

9. Structured Prediction, CTC, Word2Vec

Apr 15 Slides PDF Slides CZ Lecture EN Lecture Questions tensorboard_projector tagger_ner ctc_loss speech_recognition

- Structured prediction

- Connectionist Temporal Classification (CTC) loss [Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks]

Word2vecword embeddings, notably the CBOW and Skip-gram architectures [Efficient Estimation of Word Representations in Vector Space]- Hierarchical softmax [Section 12.4.3.2 of DLB or Distributed Representations of Words and Phrases and their Compositionality]

- Negative sampling Distributed Representations of Words and Phrases and their Compositionality]

- Character-level embeddings using character n-grams [Described simultaneously in several papers as Charagram (Charagram: Embedding Words and Sentences via Character n-grams), Subword Information (Enriching Word Vectors with Subword Information or SubGram (SubGram: Extending Skip-Gram Word Representation with Substrings)]

- ELMO [Matthew E. Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, Luke Zettlemoyer: Deep contextualized word representations]

10. Seq2seq, NMT, Transformer

Apr 22 Slides PDF Slides CZ Lecture EN Lecture Questions lemmatizer_noattn lemmatizer_attn lemmatizer_competition

- Neural Machine Translation using Encoder-Decoder or Sequence-to-Sequence architecture [Section 12.5.4 of DLB, Ilya Sutskever, Oriol Vinyals, Quoc V. Le: Sequence to Sequence Learning with Neural Networks and Kyunghyun Cho et al.: Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation]

- Using Attention mechanism in Neural Machine Translation [Section 12.4.5.1 of DLB, Dzmitry Bahdanau, Kyunghyun Cho, Yoshua Bengio: Neural Machine Translation by Jointly Learning to Align and Translate]

- Translating Subword Units [Rico Sennrich, Barry Haddow, Alexandra Birch: Neural Machine Translation of Rare Words with Subword Units]

- Google NMT [Yonghui Wu et al.: Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation]

- Transformer architecture [Attention Is All You Need]

11. Transformer, BERT, ViT

Apr 29 Slides PDF Slides CZ Lecture EN Lecture Questions tagger_transformer sentiment_analysis reading_comprehension

- Transformer architecture [Attention Is All You Need]

- BERT [BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding]

- RoBERTa [RoBERTa: A Robustly Optimized BERT Pretraining Approach]

- ViT [An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale]

- MAE [Masked Autoencoders Are Scalable Vision Learners]

- DETR [End-to-End Object Detection with Transformers]

12. Deep Reinforcement Learning, VAE

May 6 Slides PDF Slides CZ Lecture EN Lecture Questions homr_competition reinforce reinforce_baseline reinforce_pixels vae

Study material for Reinforcement Learning is the Reinforcement Learning: An Introduction; second edition by Richard S. Sutton and Andrew G. Barto (reffered to as RLB), available online.

- Multi-armed bandits [Sections 2-2.4 of RLB]

- Markov Decision Process [Sections 3-3.3 of RLB]

- Policies and Value Functions [Sections 3.5 of RLB]

- Policy Gradient Methods [Sections 13-13.1 of RLB]

- Policy Gradient Theorem [Section 13.2 of RLB]

- REINFORCE algorithm [Section 13.3 of RLB]

- REINFORCE with baseline algorithm [Section 13.4 of RLB]

- Autoencoders (undercomplete, sparse, denoising) [Chapter 14, Sections 14-14.2.3 of DLB]

- Deep Generative Models using Differentiable Generator Nets [Section 20.10.2 of DLB]

- Variational Autoencoders [Section 20.10.3 plus Reparametrization trick from Section 20.9 (but not Section 20.9.1) of DLB, Auto-Encoding Variational Bayes]

13. Generative Adversarial Networks, Diffusion Models

May 13 Slides PDF Slides CZ Lecture CZ Stable Diffusion, Score-based Models EN Lecture Questions gan dcgan ddim ddim_attention ddim_conditional

- Generative Adversarial Networks

- GAN [Section 20.10.4 of DLB, Generative Adversarial Networks]

- CGAN [Conditional Generative Adversarial Nets]

- DCGAN [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks]

- Diffusion Models

14. Speech Synthesis, External Memory, Meta-Learning

May 20 Slides PDF Slides CZ Lecture EN Lecture Questions learning_to_learn

- WaveNet [WaveNet: A Generative Model for Raw Audio]

- Parallel WaveNet [Parallel WaveNet: Fast High-Fidelity Speech Synthesis]

- Full speech synthesis pipeline Tacotron 2 [Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions]

- Neural Turing Machine [Neural Turing Machines]

- Memory Augmented Neural Networks [One-shot learning with Memory-Augmented Neural Networks]

- Differenciable Neural Computer [Hybrid computing using a neural network with dynamic external memory]

- Token Turing Machine [Token Turing Machines]

Requirements

To pass the practicals, you need to obtain at least 80 points, excluding the bonus points. Note that all surplus points (both bonus and non-bonus) will be transfered to the exam. In total, assignments for at least 120 points (not including the bonus points) will be available, and if you solve all the assignments (any non-zero amount of points counts as solved), you automatically pass the exam with grade 1.

Environment

The tasks are evaluated automatically using the ReCodEx Code Examiner.

The evaluation is performed using Python 3.11, Keras 3.0.5, PyTorch 2.2.0, HF Transformers 4.37.2, and Gymnasium 1.0.0a. You should install the exact version of these packages yourselves.

Teamwork

Solving assignments in teams (of size at most 3) is encouraged, but everyone has to participate (it is forbidden not to work on an assignment and then submit a solution created by other team members). All members of the team must submit in ReCodEx individually, but can have exactly the same sources/models/results. Each such solution must explicitly list all members of the team to allow plagiarism detection using this template.

No Cheating

Cheating is strictly prohibited and any student found cheating will be punished. The punishment can involve failing the whole course, or, in grave cases, being expelled from the faculty. While discussing assignments with any classmate is fine, each team must complete the assignments themselves, without using code they did not write (unless explicitly allowed). Of course, inside a team you are allowed to share code and submit identical solutions. Note that all students involved in cheating will be punished, so if you share your source code with a friend, both you and your friend will be punished. That also means that you should never publish your solutions.

numpy_entropy

Deadline: Mar 05, 22:00 3 points

The goal of this exercise is to familiarize with Python, NumPy and ReCodEx submission system. Start with the numpy_entropy.py.

Load a file specified in args.data_path, whose lines consist of data points of our

dataset, and load a file specified in args.model_path, which describes a model probability distribution,

with each line being a tab-separated pair of (data point, probability).

Then compute the following quantities using NumPy, and print them each on

a separate line rounded on two decimal places (or inf for positive infinity,

which happens when an element of data distribution has zero probability

under the model distribution):

- entropy H(data distribution)

- cross-entropy H(data distribution, model distribution)

- KL-divergence DKL(data distribution, model distribution)

Use natural logarithms to compute the entropies and the divergence.

python3 numpy_entropy.py --data_pathnumpy_entropy_data_1.txt--model_pathnumpy_entropy_model_1.txt

Entropy: 0.96 nats

Crossentropy: 0.99 nats

KL divergence: 0.03 nats

python3 numpy_entropy.py --data_pathnumpy_entropy_data_2.txt--model_pathnumpy_entropy_model_2.txt

Entropy: 0.96 nats

Crossentropy: inf nats

KL divergence: inf nats

- The last three tests use data available only in ReCodEx. They are analogous to the numpy_entropy_data_3.txt numpy_entropy_model_3.txt and numpy_entropy_data_4.txt numpy_entropy_model_4.txt, but are generated with different random seeds.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 numpy_entropy.py --data_pathnumpy_entropy_data_1.txt--model_pathnumpy_entropy_model_1.txt

Entropy: 0.96 nats

Crossentropy: 0.99 nats

KL divergence: 0.03 nats

python3 numpy_entropy.py --data_pathnumpy_entropy_data_2.txt--model_pathnumpy_entropy_model_2.txt

Entropy: 0.96 nats

Crossentropy: inf nats

KL divergence: inf nats

python3 numpy_entropy.py --data_pathnumpy_entropy_data_3.txt--model_pathnumpy_entropy_model_3.txt

Entropy: 4.15 nats

Crossentropy: 4.23 nats

KL divergence: 0.08 nats

python3 numpy_entropy.py --data_pathnumpy_entropy_data_4.txt--model_pathnumpy_entropy_model_4.txt

Entropy: 4.99 nats

Crossentropy: 5.03 nats

KL divergence: 0.04 nats

pca_first

Deadline: Mar 05, 22:00 2 points

The goal of this exercise is to familiarize with PyTorch torch.Tensors,

shapes and basic tensor manipulation methods. Start with the

pca_first.py

(and you will also need the mnist.py

module).

Alternatively, you can instead use the

pca_first.keras.py

template, which uses backend-agnostic keras.ops operations instead of PyTorch

operations – both templates can be used to solve the assignment.

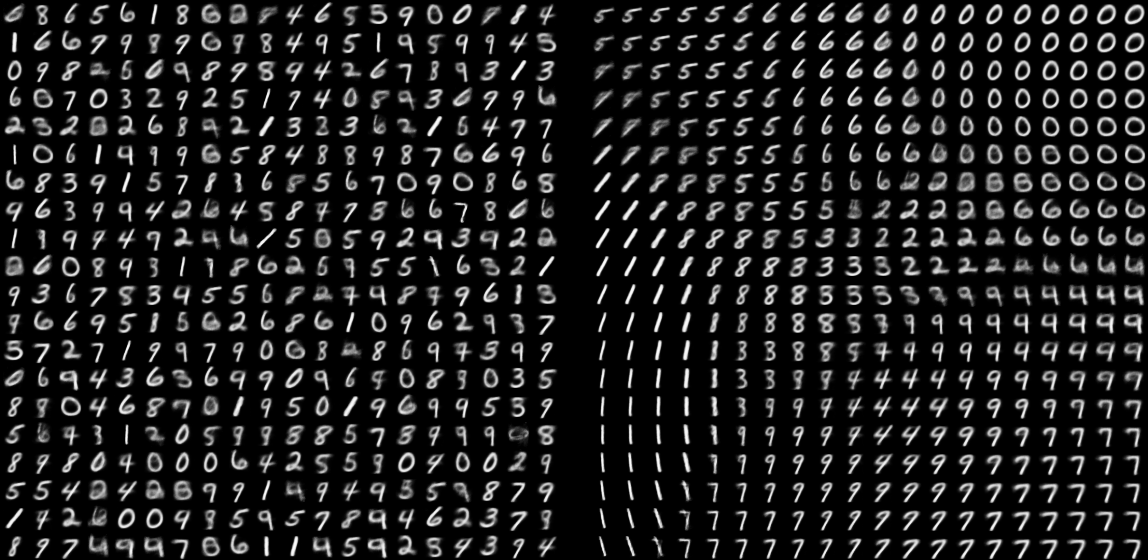

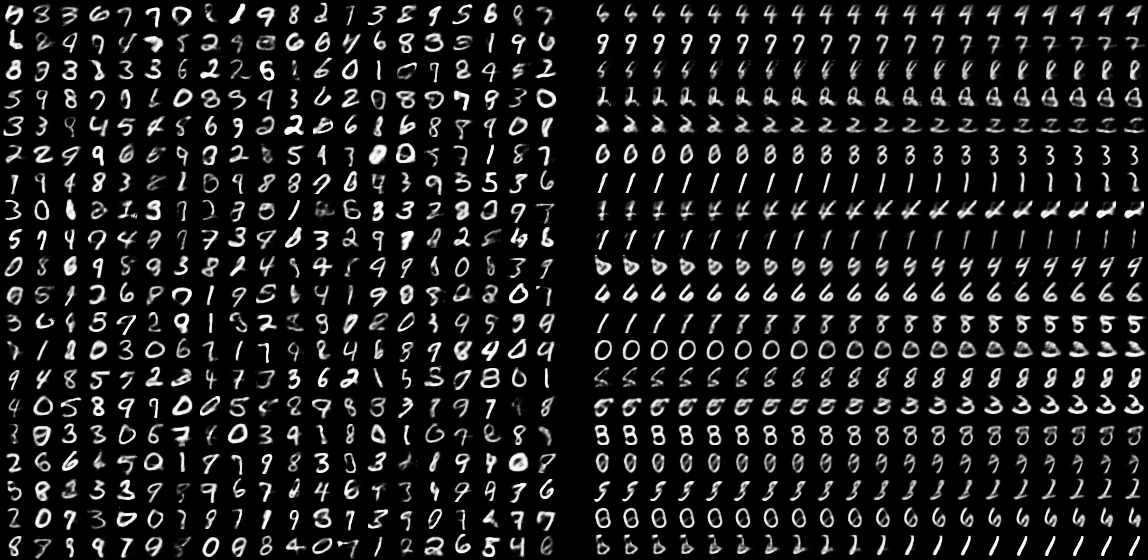

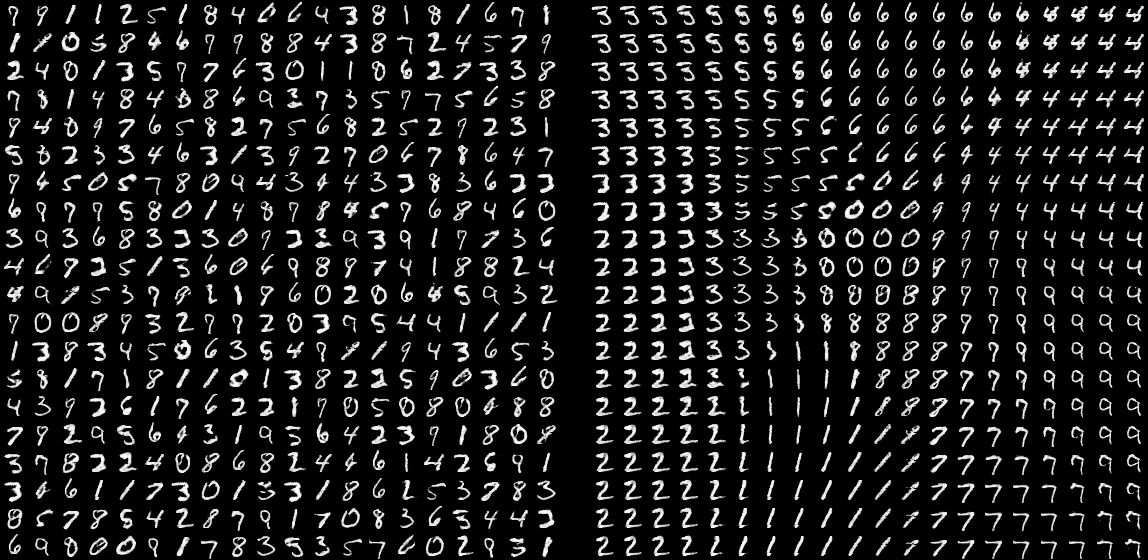

In this assignment, you should compute the covariance matrix of several examples from the MNIST dataset, then compute the first principal component, and quantify the explained variance of it. It is fine if you are not familiar with terms like covariance matrix or principal component – the template contains a detailed description of what you have to do.

Finally, you might want to read the Introduction to PyTorch Tensors.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 pca_first.py --examples=1024 --iterations=64

Total variance: 53.12

Explained variance: 9.64%

python3 pca_first.py --examples=8192 --iterations=128

Total variance: 53.05

Explained variance: 9.89%

python3 pca_first.py --examples=55000 --iterations=1024

Total variance: 52.74

Explained variance: 9.71%

mnist_layers_activations

Deadline: Mar 05, 22:00 2 points

Before solving the assignment, start by playing with

example_keras_tensorboard.py,

in order to familiarize with Keras and TensorBoard.

Run it, and when it finishes, run TensorBoard using tensorboard --logdir logs.

Then open http://localhost:6006 in a browser and explore the active tabs.

Your goal is to modify the mnist_layers_activations.py template such that a user-specified neural network is constructed:

- A number of hidden layers (including zero) can be specified on the command line

using parameter

hidden_layers. - Activation function of these hidden layers can be also specified as a command

line parameter

activation, with supported values ofnone,relu,tanhandsigmoid.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_layers_activations.py --epochs=1 --hidden_layers=0 --activation=none

accuracy: 0.7801 - loss: 0.8405 - val_accuracy: 0.9300 - val_loss: 0.2716

python3 mnist_layers_activations.py --epochs=1 --hidden_layers=1 --activation=none

accuracy: 0.8483 - loss: 0.5230 - val_accuracy: 0.9352 - val_loss: 0.2422

python3 mnist_layers_activations.py --epochs=1 --hidden_layers=1 --activation=relu

accuracy: 0.8503 - loss: 0.5286 - val_accuracy: 0.9604 - val_loss: 0.1432

python3 mnist_layers_activations.py --epochs=1 --hidden_layers=1 --activation=tanh

accuracy: 0.8529 - loss: 0.5183 - val_accuracy: 0.9564 - val_loss: 0.1632

python3 mnist_layers_activations.py --epochs=1 --hidden_layers=1 --activation=sigmoid

accuracy: 0.7851 - loss: 0.8650 - val_accuracy: 0.9414 - val_loss: 0.2196

python3 mnist_layers_activations.py --epochs=1 --hidden_layers=3 --activation=relu

accuracy: 0.8497 - loss: 0.5011 - val_accuracy: 0.9664 - val_loss: 0.1225

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_layers_activations.py --hidden_layers=0 --activation=none

Epoch 1/10 accuracy: 0.7801 - loss: 0.8405 - val_accuracy: 0.9300 - val_loss: 0.2716

Epoch 5/10 accuracy: 0.9222 - loss: 0.2792 - val_accuracy: 0.9406 - val_loss: 0.2203

Epoch 10/10 accuracy: 0.9304 - loss: 0.2515 - val_accuracy: 0.9432 - val_loss: 0.2159

python3 mnist_layers_activations.py --hidden_layers=1 --activation=none

Epoch 1/10 accuracy: 0.8483 - loss: 0.5230 - val_accuracy: 0.9352 - val_loss: 0.2422

Epoch 5/10 accuracy: 0.9236 - loss: 0.2758 - val_accuracy: 0.9360 - val_loss: 0.2325

Epoch 10/10 accuracy: 0.9298 - loss: 0.2517 - val_accuracy: 0.9354 - val_loss: 0.2439

python3 mnist_layers_activations.py --hidden_layers=1 --activation=relu

Epoch 1/10 accuracy: 0.8503 - loss: 0.5286 - val_accuracy: 0.9604 - val_loss: 0.1432

Epoch 5/10 accuracy: 0.9824 - loss: 0.0613 - val_accuracy: 0.9808 - val_loss: 0.0740

Epoch 10/10 accuracy: 0.9948 - loss: 0.0202 - val_accuracy: 0.9788 - val_loss: 0.0821

python3 mnist_layers_activations.py --hidden_layers=1 --activation=tanh

Epoch 1/10 accuracy: 0.8529 - loss: 0.5183 - val_accuracy: 0.9564 - val_loss: 0.1632

Epoch 5/10 accuracy: 0.9800 - loss: 0.0728 - val_accuracy: 0.9740 - val_loss: 0.0853

Epoch 10/10 accuracy: 0.9948 - loss: 0.0244 - val_accuracy: 0.9782 - val_loss: 0.0772

python3 mnist_layers_activations.py --hidden_layers=1 --activation=sigmoid

Epoch 1/10 accuracy: 0.7851 - loss: 0.8650 - val_accuracy: 0.9414 - val_loss: 0.2196

Epoch 5/10 accuracy: 0.9647 - loss: 0.1270 - val_accuracy: 0.9704 - val_loss: 0.1079

Epoch 10/10 accuracy: 0.9852 - loss: 0.0583 - val_accuracy: 0.9756 - val_loss: 0.0837

python3 mnist_layers_activations.py --hidden_layers=3 --activation=relu

Epoch 1/10 accuracy: 0.8497 - loss: 0.5011 - val_accuracy: 0.9664 - val_loss: 0.1225

Epoch 5/10 accuracy: 0.9862 - loss: 0.0438 - val_accuracy: 0.9734 - val_loss: 0.1026

Epoch 10/10 accuracy: 0.9932 - loss: 0.0202 - val_accuracy: 0.9818 - val_loss: 0.0865

python3 mnist_layers_activations.py --hidden_layers=10 --activation=relu

Epoch 1/10 accuracy: 0.7710 - loss: 0.6793 - val_accuracy: 0.9570 - val_loss: 0.1479

Epoch 5/10 accuracy: 0.9780 - loss: 0.0783 - val_accuracy: 0.9786 - val_loss: 0.0808

Epoch 10/10 accuracy: 0.9869 - loss: 0.0481 - val_accuracy: 0.9724 - val_loss: 0.1163

python3 mnist_layers_activations.py --hidden_layers=10 --activation=sigmoid

Epoch 1/10 accuracy: 0.1072 - loss: 2.3068 - val_accuracy: 0.1784 - val_loss: 2.1247

Epoch 5/10 accuracy: 0.8825 - loss: 0.4776 - val_accuracy: 0.9164 - val_loss: 0.3686

Epoch 10/10 accuracy: 0.9294 - loss: 0.2994 - val_accuracy: 0.9386 - val_loss: 0.2671

sgd_backpropagation

Deadline: Mar 12, 22:00 3 points

In this exercise you will learn how to compute gradients using the so-called automatic differentiation, which allows to automatically run backpropagation algorithm for a given computation. You can read the Automatic Differentiation with torch.autograd tutorial if interested. After computing the gradient, you should then perform training by running manually implemented minibatch stochastic gradient descent.

Starting with the sgd_backpropagation.py template, you should:

- implement a neural network with a single tanh hidden layer and categorical output layer;

- compute the crossentropy loss;

- use

.backward()to automatically compute the gradient of the loss with respect to all variables; - perform the SGD update.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 sgd_backpropagation.py --epochs=2 --batch_size=64 --hidden_layer=20 --learning_rate=0.1

Dev accuracy after epoch 1 is 93.30

Dev accuracy after epoch 2 is 94.38

Test accuracy after epoch 2 is 93.15

python3 sgd_backpropagation.py --epochs=2 --batch_size=100 --hidden_layer=32 --learning_rate=0.2

Dev accuracy after epoch 1 is 93.64

Dev accuracy after epoch 2 is 94.80

Test accuracy after epoch 2 is 93.54

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 sgd_backpropagation.py --batch_size=64 --hidden_layer=20 --learning_rate=0.1

Dev accuracy after epoch 1 is 93.30

Dev accuracy after epoch 2 is 94.38

Dev accuracy after epoch 3 is 95.16

Dev accuracy after epoch 4 is 95.50

Dev accuracy after epoch 5 is 95.96

Dev accuracy after epoch 6 is 96.04

Dev accuracy after epoch 7 is 95.82

Dev accuracy after epoch 8 is 95.92

Dev accuracy after epoch 9 is 95.96

Dev accuracy after epoch 10 is 96.16

Test accuracy after epoch 10 is 95.26

python3 sgd_backpropagation.py --batch_size=100 --hidden_layer=32 --learning_rate=0.2

Dev accuracy after epoch 1 is 93.64

Dev accuracy after epoch 2 is 94.80

Dev accuracy after epoch 3 is 95.56

Dev accuracy after epoch 4 is 95.98

Dev accuracy after epoch 5 is 96.24

Dev accuracy after epoch 6 is 96.74

Dev accuracy after epoch 7 is 96.52

Dev accuracy after epoch 8 is 96.54

Dev accuracy after epoch 9 is 97.04

Dev accuracy after epoch 10 is 97.02

Test accuracy after epoch 10 is 96.16

sgd_manual

Deadline: Mar 12, 22:00 2 points

The goal in this exercise is to extend your solution to the sgd_backpropagation assignment by manually computing the gradient.

While in this assignment we compute the gradient manually, we will nearly always use the automatic differentiation. Therefore, the assignment is more of a mathematical exercise than a real-world application. Furthermore, we will compute the derivatives together on the Mar 06 practicals.

Start with the sgd_manual.py template, which is based on sgd_backpropagation.py one.

Note that ReCodEx disables the PyTorch automatic differentiation during evaluation.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 sgd_manual.py --epochs=2 --batch_size=64 --hidden_layer=20 --learning_rate=0.1

Dev accuracy after epoch 1 is 93.30

Dev accuracy after epoch 2 is 94.38

Test accuracy after epoch 2 is 93.15

python3 sgd_manual.py --epochs=2 --batch_size=100 --hidden_layer=32 --learning_rate=0.2

Dev accuracy after epoch 1 is 93.64

Dev accuracy after epoch 2 is 94.80

Test accuracy after epoch 2 is 93.54

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 sgd_manual.py --batch_size=64 --hidden_layer=20 --learning_rate=0.1

Dev accuracy after epoch 1 is 93.30

Dev accuracy after epoch 2 is 94.38

Dev accuracy after epoch 3 is 95.16

Dev accuracy after epoch 4 is 95.50

Dev accuracy after epoch 5 is 95.96

Dev accuracy after epoch 6 is 96.04

Dev accuracy after epoch 7 is 95.82

Dev accuracy after epoch 8 is 95.92

Dev accuracy after epoch 9 is 95.96

Dev accuracy after epoch 10 is 96.16

Test accuracy after epoch 10 is 95.26

python3 sgd_manual.py --batch_size=100 --hidden_layer=32 --learning_rate=0.2

Dev accuracy after epoch 1 is 93.64

Dev accuracy after epoch 2 is 94.80

Dev accuracy after epoch 3 is 95.56

Dev accuracy after epoch 4 is 95.98

Dev accuracy after epoch 5 is 96.24

Dev accuracy after epoch 6 is 96.74

Dev accuracy after epoch 7 is 96.52

Dev accuracy after epoch 8 is 96.54

Dev accuracy after epoch 9 is 97.04

Dev accuracy after epoch 10 is 97.02

Test accuracy after epoch 10 is 96.16

mnist_training

Deadline: Mar 12, 22:00 2 points

This exercise should teach you using different optimizers, learning rates, and learning rate decays. Your goal is to modify the mnist_training.py template and implement the following:

- Using specified optimizer (either

SGDorAdam). - Optionally using momentum for the

SGDoptimizer. - Using specified learning rate for the optimizer.

- Optionally use a given learning rate schedule. The schedule can be either

linear,exponential, orcosine. If a schedule is specified, you also get a final learning rate, and the learning rate should be gradually decresed during training to reach the final learning rate just after the training (i.e., the first update after the training would use exactly the final learning rate).

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_training.py --epochs=1 --optimizer=SGD --learning_rate=0.01

accuracy: 0.6537 - loss: 1.2786 - val_accuracy: 0.9098 - val_loss: 0.3743

python3 mnist_training.py --epochs=1 --optimizer=SGD --learning_rate=0.01 --momentum=0.9

accuracy: 0.8221 - loss: 0.6138 - val_accuracy: 0.9492 - val_loss: 0.1873

python3 mnist_training.py --epochs=1 --optimizer=SGD --learning_rate=0.1

accuracy: 0.8400 - loss: 0.5742 - val_accuracy: 0.9528 - val_loss: 0.1800

python3 mnist_training.py --epochs=1 --optimizer=Adam --learning_rate=0.001

accuracy: 0.8548 - loss: 0.5121 - val_accuracy: 0.9640 - val_loss: 0.1327

python3 mnist_training.py --epochs=1 --optimizer=Adam --learning_rate=0.01

accuracy: 0.8858 - loss: 0.3598 - val_accuracy: 0.9564 - val_loss: 0.1393

python3 mnist_training.py --epochs=2 --optimizer=Adam --learning_rate=0.01 --decay=linear --learning_rate_final=0.0001

Epoch 1/2 accuracy: 0.8889 - loss: 0.3520 - val_accuracy: 0.9682 - val_loss: 0.1107

Epoch 2/2 accuracy: 0.9715 - loss: 0.0956 - val_accuracy: 0.9792 - val_loss: 0.0688

Next learning rate to be used: 0.0001

python3 mnist_training.py --epochs=2 --optimizer=Adam --learning_rate=0.01 --decay=exponential --learning_rate_final=0.001

Epoch 1/2 accuracy: 0.8912 - loss: 0.3447 - val_accuracy: 0.9702 - val_loss: 0.0997

Epoch 2/2 accuracy: 0.9746 - loss: 0.0824 - val_accuracy: 0.9778 - val_loss: 0.0776

Next learning rate to be used: 0.001

python3 mnist_training.py --epochs=2 --optimizer=Adam --learning_rate=0.01 --decay=cosine --learning_rate_final=0.0001

Epoch 1/2 accuracy: 0.8875 - loss: 0.3548 - val_accuracy: 0.9726 - val_loss: 0.0976

Epoch 2/2 accuracy: 0.9742 - loss: 0.0851 - val_accuracy: 0.9764 - val_loss: 0.0740

Next learning rate to be used: 0.0001

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_training.py --optimizer=SGD --learning_rate=0.01

Epoch 1/10 accuracy: 0.6537 - loss: 1.2786 - val_accuracy: 0.9098 - val_loss: 0.3743

Epoch 2/10 accuracy: 0.8848 - loss: 0.4316 - val_accuracy: 0.9222 - val_loss: 0.2895

Epoch 3/10 accuracy: 0.9057 - loss: 0.3450 - val_accuracy: 0.9308 - val_loss: 0.2539

Epoch 4/10 accuracy: 0.9118 - loss: 0.3131 - val_accuracy: 0.9372 - val_loss: 0.2368

Epoch 5/10 accuracy: 0.9188 - loss: 0.2924 - val_accuracy: 0.9406 - val_loss: 0.2202

Epoch 6/10 accuracy: 0.9235 - loss: 0.2750 - val_accuracy: 0.9426 - val_loss: 0.2076

Epoch 7/10 accuracy: 0.9291 - loss: 0.2572 - val_accuracy: 0.9464 - val_loss: 0.1997

Epoch 8/10 accuracy: 0.9304 - loss: 0.2456 - val_accuracy: 0.9494 - val_loss: 0.1909

Epoch 9/10 accuracy: 0.9339 - loss: 0.2340 - val_accuracy: 0.9536 - val_loss: 0.1813

Epoch 10/10 accuracy: 0.9377 - loss: 0.2199 - val_accuracy: 0.9534 - val_loss: 0.1756

python3 mnist_training.py --optimizer=SGD --learning_rate=0.01 --momentum=0.9

Epoch 1/10 accuracy: 0.8221 - loss: 0.6138 - val_accuracy: 0.9492 - val_loss: 0.1873

Epoch 2/10 accuracy: 0.9370 - loss: 0.2173 - val_accuracy: 0.9646 - val_loss: 0.1385

Epoch 3/10 accuracy: 0.9599 - loss: 0.1453 - val_accuracy: 0.9716 - val_loss: 0.1076

Epoch 4/10 accuracy: 0.9673 - loss: 0.1127 - val_accuracy: 0.9746 - val_loss: 0.0961

Epoch 5/10 accuracy: 0.9740 - loss: 0.0933 - val_accuracy: 0.9774 - val_loss: 0.0875

Epoch 6/10 accuracy: 0.9778 - loss: 0.0811 - val_accuracy: 0.9746 - val_loss: 0.0856

Epoch 7/10 accuracy: 0.9821 - loss: 0.0680 - val_accuracy: 0.9774 - val_loss: 0.0803

Epoch 8/10 accuracy: 0.9825 - loss: 0.0632 - val_accuracy: 0.9776 - val_loss: 0.0780

Epoch 9/10 accuracy: 0.9849 - loss: 0.0552 - val_accuracy: 0.9804 - val_loss: 0.0725

Epoch 10/10 accuracy: 0.9877 - loss: 0.0463 - val_accuracy: 0.9780 - val_loss: 0.0735

python3 mnist_training.py --optimizer=SGD --learning_rate=0.1

Epoch 1/10 accuracy: 0.8400 - loss: 0.5742 - val_accuracy: 0.9528 - val_loss: 0.1800

Epoch 2/10 accuracy: 0.9389 - loss: 0.2123 - val_accuracy: 0.9670 - val_loss: 0.1335

Epoch 3/10 accuracy: 0.9602 - loss: 0.1431 - val_accuracy: 0.9728 - val_loss: 0.1052

Epoch 4/10 accuracy: 0.9685 - loss: 0.1115 - val_accuracy: 0.9770 - val_loss: 0.0946

Epoch 5/10 accuracy: 0.9747 - loss: 0.0927 - val_accuracy: 0.9754 - val_loss: 0.0878

Epoch 6/10 accuracy: 0.9775 - loss: 0.0798 - val_accuracy: 0.9754 - val_loss: 0.0852

Epoch 7/10 accuracy: 0.9813 - loss: 0.0680 - val_accuracy: 0.9780 - val_loss: 0.0797

Epoch 8/10 accuracy: 0.9828 - loss: 0.0621 - val_accuracy: 0.9796 - val_loss: 0.0757

Epoch 9/10 accuracy: 0.9847 - loss: 0.0550 - val_accuracy: 0.9804 - val_loss: 0.0731

Epoch 10/10 accuracy: 0.9875 - loss: 0.0464 - val_accuracy: 0.9782 - val_loss: 0.0731

python3 mnist_training.py --optimizer=Adam --learning_rate=0.001

Epoch 1/10 accuracy: 0.8548 - loss: 0.5121 - val_accuracy: 0.9640 - val_loss: 0.1327

Epoch 2/10 accuracy: 0.9552 - loss: 0.1505 - val_accuracy: 0.9706 - val_loss: 0.1118

Epoch 3/10 accuracy: 0.9744 - loss: 0.0900 - val_accuracy: 0.9770 - val_loss: 0.0833

Epoch 4/10 accuracy: 0.9808 - loss: 0.0658 - val_accuracy: 0.9778 - val_loss: 0.0786

Epoch 5/10 accuracy: 0.9836 - loss: 0.0533 - val_accuracy: 0.9804 - val_loss: 0.0735

Epoch 6/10 accuracy: 0.9890 - loss: 0.0403 - val_accuracy: 0.9782 - val_loss: 0.0772

Epoch 7/10 accuracy: 0.9911 - loss: 0.0311 - val_accuracy: 0.9792 - val_loss: 0.0756

Epoch 8/10 accuracy: 0.9922 - loss: 0.0257 - val_accuracy: 0.9818 - val_loss: 0.0717

Epoch 9/10 accuracy: 0.9947 - loss: 0.0202 - val_accuracy: 0.9806 - val_loss: 0.0734

Epoch 10/10 accuracy: 0.9953 - loss: 0.0167 - val_accuracy: 0.9802 - val_loss: 0.0779

python3 mnist_training.py --optimizer=Adam --learning_rate=0.01

Epoch 1/10 accuracy: 0.8858 - loss: 0.3598 - val_accuracy: 0.9564 - val_loss: 0.1393

Epoch 2/10 accuracy: 0.9565 - loss: 0.1478 - val_accuracy: 0.9622 - val_loss: 0.1445

Epoch 3/10 accuracy: 0.9688 - loss: 0.1041 - val_accuracy: 0.9686 - val_loss: 0.1184

Epoch 4/10 accuracy: 0.9717 - loss: 0.1016 - val_accuracy: 0.9644 - val_loss: 0.1538

Epoch 5/10 accuracy: 0.9749 - loss: 0.0914 - val_accuracy: 0.9642 - val_loss: 0.1477

Epoch 6/10 accuracy: 0.9754 - loss: 0.0878 - val_accuracy: 0.9714 - val_loss: 0.1375

Epoch 7/10 accuracy: 0.9779 - loss: 0.0804 - val_accuracy: 0.9684 - val_loss: 0.1510

Epoch 8/10 accuracy: 0.9793 - loss: 0.0764 - val_accuracy: 0.9696 - val_loss: 0.1803

Epoch 9/10 accuracy: 0.9808 - loss: 0.0747 - val_accuracy: 0.9708 - val_loss: 0.1576

Epoch 10/10 accuracy: 0.9812 - loss: 0.0750 - val_accuracy: 0.9716 - val_loss: 0.1556

python3 mnist_training.py --optimizer=Adam --learning_rate=0.01 --decay=linear --learning_rate_final=0.0001

Epoch 1/10 accuracy: 0.8862 - loss: 0.3582 - val_accuracy: 0.9636 - val_loss: 0.1395

Epoch 2/10 accuracy: 0.9603 - loss: 0.1313 - val_accuracy: 0.9684 - val_loss: 0.1056

Epoch 3/10 accuracy: 0.9730 - loss: 0.0899 - val_accuracy: 0.9718 - val_loss: 0.1089

Epoch 4/10 accuracy: 0.9780 - loss: 0.0701 - val_accuracy: 0.9676 - val_loss: 0.1250

Epoch 5/10 accuracy: 0.9818 - loss: 0.0528 - val_accuracy: 0.9744 - val_loss: 0.1001

Epoch 6/10 accuracy: 0.9876 - loss: 0.0389 - val_accuracy: 0.9738 - val_loss: 0.1233

Epoch 7/10 accuracy: 0.9907 - loss: 0.0255 - val_accuracy: 0.9780 - val_loss: 0.0989

Epoch 8/10 accuracy: 0.9954 - loss: 0.0141 - val_accuracy: 0.9802 - val_loss: 0.0909

Epoch 9/10 accuracy: 0.9976 - loss: 0.0079 - val_accuracy: 0.9814 - val_loss: 0.0923

Epoch 10/10 accuracy: 0.9995 - loss: 0.0033 - val_accuracy: 0.9818 - val_loss: 0.0946

Next learning rate to be used: 0.0001

python3 mnist_training.py --optimizer=Adam --learning_rate=0.01 --decay=exponential --learning_rate_final=0.001

Epoch 1/10 accuracy: 0.8877 - loss: 0.3564 - val_accuracy: 0.9616 - val_loss: 0.1278

Epoch 2/10 accuracy: 0.9642 - loss: 0.1228 - val_accuracy: 0.9624 - val_loss: 0.1149

Epoch 3/10 accuracy: 0.9778 - loss: 0.0720 - val_accuracy: 0.9748 - val_loss: 0.0781

Epoch 4/10 accuracy: 0.9844 - loss: 0.0500 - val_accuracy: 0.9750 - val_loss: 0.0973

Epoch 5/10 accuracy: 0.9884 - loss: 0.0356 - val_accuracy: 0.9800 - val_loss: 0.0709

Epoch 6/10 accuracy: 0.9933 - loss: 0.0228 - val_accuracy: 0.9792 - val_loss: 0.0810

Epoch 7/10 accuracy: 0.9956 - loss: 0.0150 - val_accuracy: 0.9806 - val_loss: 0.0785

Epoch 8/10 accuracy: 0.9969 - loss: 0.0095 - val_accuracy: 0.9826 - val_loss: 0.0746

Epoch 9/10 accuracy: 0.9985 - loss: 0.0069 - val_accuracy: 0.9808 - val_loss: 0.0783

Epoch 10/10 accuracy: 0.9994 - loss: 0.0036 - val_accuracy: 0.9818 - val_loss: 0.0783

Next learning rate to be used: 0.001

python3 mnist_training.py --optimizer=Adam --learning_rate=0.01 --decay=cosine --learning_rate_final=0.0001

Epoch 1/10 accuracy: 0.8858 - loss: 0.3601 - val_accuracy: 0.9624 - val_loss: 0.1311

Epoch 2/10 accuracy: 0.9566 - loss: 0.1461 - val_accuracy: 0.9654 - val_loss: 0.1270

Epoch 3/10 accuracy: 0.9695 - loss: 0.1023 - val_accuracy: 0.9740 - val_loss: 0.0965

Epoch 4/10 accuracy: 0.9755 - loss: 0.0790 - val_accuracy: 0.9710 - val_loss: 0.1152

Epoch 5/10 accuracy: 0.9831 - loss: 0.0562 - val_accuracy: 0.9748 - val_loss: 0.1004

Epoch 6/10 accuracy: 0.9889 - loss: 0.0353 - val_accuracy: 0.9758 - val_loss: 0.1003

Epoch 7/10 accuracy: 0.9930 - loss: 0.0206 - val_accuracy: 0.9786 - val_loss: 0.0864

Epoch 8/10 accuracy: 0.9972 - loss: 0.0096 - val_accuracy: 0.9790 - val_loss: 0.0958

Epoch 9/10 accuracy: 0.9985 - loss: 0.0068 - val_accuracy: 0.9802 - val_loss: 0.0880

Epoch 10/10 accuracy: 0.9992 - loss: 0.0042 - val_accuracy: 0.9802 - val_loss: 0.0891

Next learning rate to be used: 0.0001

gym_cartpole

Deadline: Mar 12, 22:00 3 points

Solve the CartPole-v1 environment from the Gymnasium library, utilizing only provided supervised training data. The data is available in gym_cartpole_data.txt file, each line containing one observation (four space separated floats) and a corresponding action (the last space separated integer). Start with the gym_cartpole.py.

The solution to this task should be a model which passes evaluation on random

inputs. This evaluation can be performed by running the

gym_cartpole.py

with --evaluate argument (optionally rendering if --render option is

provided), or directly calling the evaluate_model method. In order to pass,

you must achieve an average reward of at least 475 on 100 episodes. Your model

should have either one or two outputs (i.e., using either sigmoid or softmax

output function).

When designing the model, you should consider that the size of the training data is very small and the data is quite noisy.

When submitting to ReCodEx, do not forget to also submit the trained model.

mnist_regularization

Deadline: Mar 19, 22:00 3 points

You will learn how to implement three regularization methods in this assignment. Start with the mnist_regularization.py template and implement the following:

- Allow using dropout with rate

args.dropout. Add a dropout layer after the firstFlattenand also after allDensehidden layers (but not after the output layer). - Allow using AdamW with weight decay with strength of

args.weight_decay, making sure the weight decay is not applied on bias. - Allow using label smoothing with weight

args.label_smoothing. Instead ofSparseCategoricalCrossentropy, you will need to useCategoricalCrossentropywhich offerslabel_smoothingargument.

In addition to submitting the task in ReCodEx, also run the following variations and observe the results in TensorBoard, notably the training, development and test set accuracy and loss:

- dropout rate

0,0.3,0.5,0.6,0.8; - weight decay

0,0.1,0.3,0.5,1.0; - label smoothing

0,0.1,0.3,0.5.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_regularization.py --epochs=1 --dropout=0.3

accuracy: 0.5981 - loss: 1.2688 - val_accuracy: 0.9174 - val_loss: 0.3051

python3 mnist_regularization.py --epochs=1 --dropout=0.5 --hidden_layers 300 300

accuracy: 0.3429 - loss: 1.9163 - val_accuracy: 0.8826 - val_loss: 0.4937

python3 mnist_regularization.py --epochs=1 --weight_decay=0.1

accuracy: 0.7014 - loss: 1.0412 - val_accuracy: 0.9236 - val_loss: 0.2776

python3 mnist_regularization.py --epochs=1 --weight_decay=0.3

accuracy: 0.7006 - loss: 1.0429 - val_accuracy: 0.9232 - val_loss: 0.2801

python3 mnist_regularization.py --epochs=1 --label_smoothing=0.1

accuracy: 0.7102 - loss: 1.3015 - val_accuracy: 0.9276 - val_loss: 0.7656

python3 mnist_regularization.py --epochs=1 --label_smoothing=0.3

accuracy: 0.7113 - loss: 1.6854 - val_accuracy: 0.9332 - val_loss: 1.3709

mnist_ensemble

Deadline: Mar 19, 22:00 2 points

Your goal in this assignment is to implement model ensembling.

The mnist_ensemble.py

template trains args.models individual models, and your goal is to perform

an ensemble of the first model, first two models, first three models, …, all

models, and evaluate their accuracy on the development set.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_ensemble.py --epochs=1 --models=5

Model 1, individual accuracy 96.04, ensemble accuracy 96.04

Model 2, individual accuracy 96.28, ensemble accuracy 96.56

Model 3, individual accuracy 96.12, ensemble accuracy 96.58

Model 4, individual accuracy 95.92, ensemble accuracy 96.70

Model 5, individual accuracy 96.38, ensemble accuracy 96.72

python3 mnist_ensemble.py --epochs=1 --models=5 --hidden_layers=200

Model 1, individual accuracy 96.46, ensemble accuracy 96.46

Model 2, individual accuracy 96.86, ensemble accuracy 96.88

Model 3, individual accuracy 96.54, ensemble accuracy 97.04

Model 4, individual accuracy 96.54, ensemble accuracy 97.06

Model 5, individual accuracy 96.82, ensemble accuracy 97.20

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_ensemble.py --models=5

Model 1, individual accuracy 97.82, ensemble accuracy 97.82

Model 2, individual accuracy 97.80, ensemble accuracy 98.08

Model 3, individual accuracy 98.02, ensemble accuracy 98.20

Model 4, individual accuracy 98.20, ensemble accuracy 98.28

Model 5, individual accuracy 97.64, ensemble accuracy 98.28

python3 mnist_ensemble.py --models=5 --hidden_layers=200

Model 1, individual accuracy 98.12, ensemble accuracy 98.12

Model 2, individual accuracy 98.22, ensemble accuracy 98.42

Model 3, individual accuracy 98.26, ensemble accuracy 98.52

Model 4, individual accuracy 98.32, ensemble accuracy 98.62

Model 5, individual accuracy 97.98, ensemble accuracy 98.70

uppercase

Deadline: Mar 19, 22:00 4 points+5 bonus

This assignment introduces first NLP task. Your goal is to implement a model which is given Czech lowercased text and tries to uppercase appropriate letters. To load the dataset, use uppercase_data.py module which loads (and if required also downloads) the data. While the training and the development sets are in correct case, the test set is lowercased.

This is an open-data task, where you submit only the uppercased test set together with the training script (which will not be executed, it will be only used to understand the approach you took, and to indicate teams). Explicitly, submit exactly one .txt file and at least one .py/ipynb file.

The task is also a competition. Everyone who submits

a solution achieving at least 98.5% accuracy gets 4 basic points; the

remaining 5 bonus points are distributed depending on relative ordering of your

solutions. The accuracy is computed per-character and can be evaluated

by running uppercase_data.py

with --evaluate argument, or using its evaluate_file method.

You may want to start with the uppercase.py template, which uses the uppercase_data.py to load the data, generate an alphabet of given size containing most frequent characters, and generate sliding window view on the data. The template also comments on possibilities of character representation.

Do not use RNNs, CNNs, or Transformer in this task (if you have doubts, contact me); fully connected layers (and therefore also embedding layers), any activations, residual connections, and any regularization layers are fine.

mnist_cnn

Deadline: Mar 26, 22:00 3 points

To pass this assignment, you will learn to construct basic convolutional

neural network layers. Start with the

mnist_cnn.py

template and assume the requested architecture is described by the cnn

argument, which contains comma-separated specifications of the following layers:

C-filters-kernel_size-stride-padding: Add a convolutional layer with ReLU activation and specified number of filters, kernel size, stride and padding. Example:C-10-3-1-sameCB-filters-kernel_size-stride-padding: Same asC-filters-kernel_size-stride-padding, but use batch normalization. In detail, start with a convolutional layer without bias and activation, then add batch normalization layer, and finally ReLU activation. Example:CB-10-3-1-sameM-pool_size-stride: Add max pooling with specified size and stride, using the default"valid"padding. Example:M-3-2R-[layers]: Add a residual connection. Thelayerscontain a specification of at least one convolutional layer (but not a recursive residual connectionR). The input to theRlayer should be processed sequentially bylayers, and the produced output (after the ReLU nonlinearity of the last layer) should be added to the input (of thisRlayer). Example:R-[C-16-3-1-same,C-16-3-1-same]F: Flatten inputs. Must appear exactly once in the architecture.H-hidden_layer_size: Add a dense layer with ReLU activation and specified size. Example:H-100D-dropout_rate: Apply dropout with the given dropout rate. Example:D-0.5

An example architecture might be --cnn=CB-16-5-2-same,M-3-2,F,H-100,D-0.5.

You can assume the resulting network is valid; it is fine to crash if it is not.

After a successful ReCodEx submission, you can try obtaining the best accuracy

on MNIST and then advance to cifar_competition.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_cnn.py --epochs=1 --cnn=F,H-100

accuracy: 0.8503 - loss: 0.5286 - val_accuracy: 0.9604 - val_loss: 0.1432

python3 mnist_cnn.py --epochs=1 --cnn=F,H-100,D-0.5

accuracy: 0.7706 - loss: 0.7444 - val_accuracy: 0.9572 - val_loss: 0.1606

python3 mnist_cnn.py --epochs=1 --cnn=M-5-2,F,H-50

accuracy: 0.6630 - loss: 1.0703 - val_accuracy: 0.8798 - val_loss: 0.3894

python3 mnist_cnn.py --epochs=1 --cnn=C-8-3-5-same,C-8-3-2-valid,F,H-50

accuracy: 0.5898 - loss: 1.2535 - val_accuracy: 0.8774 - val_loss: 0.4079

python3 mnist_cnn.py --epochs=1 --cnn=CB-6-3-5-valid,F,H-32

accuracy: 0.6822 - loss: 1.0011 - val_accuracy: 0.9284 - val_loss: 0.2537

python3 mnist_cnn.py --epochs=1 --cnn=CB-8-3-5-valid,R-[CB-8-3-1-same,CB-8-3-1-same],F,H-50

accuracy: 0.7562 - loss: 0.7717 - val_accuracy: 0.9486 - val_loss: 0.1734

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_cnn.py --cnn=F,H-100

Epoch 1/10 accuracy: 0.8503 - loss: 0.5286 - val_accuracy: 0.9604 - val_loss: 0.1432

Epoch 2/10 accuracy: 0.9508 - loss: 0.1654 - val_accuracy: 0.9650 - val_loss: 0.1245

Epoch 3/10 accuracy: 0.9710 - loss: 0.1034 - val_accuracy: 0.9738 - val_loss: 0.0916

Epoch 4/10 accuracy: 0.9773 - loss: 0.0774 - val_accuracy: 0.9762 - val_loss: 0.0848

Epoch 5/10 accuracy: 0.9824 - loss: 0.0613 - val_accuracy: 0.9808 - val_loss: 0.0740

Epoch 6/10 accuracy: 0.9857 - loss: 0.0485 - val_accuracy: 0.9760 - val_loss: 0.0761

Epoch 7/10 accuracy: 0.9893 - loss: 0.0373 - val_accuracy: 0.9770 - val_loss: 0.0774

Epoch 8/10 accuracy: 0.9911 - loss: 0.0323 - val_accuracy: 0.9774 - val_loss: 0.0813

Epoch 9/10 accuracy: 0.9922 - loss: 0.0271 - val_accuracy: 0.9794 - val_loss: 0.0819

Epoch 10/10 accuracy: 0.9948 - loss: 0.0202 - val_accuracy: 0.9788 - val_loss: 0.0821

python3 mnist_cnn.py --cnn=F,H-100,D-0.5

Epoch 1/10 accuracy: 0.7706 - loss: 0.7444 - val_accuracy: 0.9572 - val_loss: 0.1606

Epoch 2/10 accuracy: 0.9177 - loss: 0.2808 - val_accuracy: 0.9646 - val_loss: 0.1286

Epoch 3/10 accuracy: 0.9313 - loss: 0.2340 - val_accuracy: 0.9732 - val_loss: 0.1038

Epoch 4/10 accuracy: 0.9389 - loss: 0.2025 - val_accuracy: 0.9730 - val_loss: 0.0951

Epoch 5/10 accuracy: 0.9409 - loss: 0.1919 - val_accuracy: 0.9752 - val_loss: 0.0927

Epoch 6/10 accuracy: 0.9448 - loss: 0.1784 - val_accuracy: 0.9768 - val_loss: 0.0864

Epoch 7/10 accuracy: 0.9495 - loss: 0.1649 - val_accuracy: 0.9758 - val_loss: 0.0833

Epoch 8/10 accuracy: 0.9506 - loss: 0.1577 - val_accuracy: 0.9768 - val_loss: 0.0826

Epoch 9/10 accuracy: 0.9544 - loss: 0.1496 - val_accuracy: 0.9778 - val_loss: 0.0806

Epoch 10/10 accuracy: 0.9560 - loss: 0.1413 - val_accuracy: 0.9754 - val_loss: 0.0792

python3 mnist_cnn.py --cnn=F,H-200,D-0.5

Epoch 1/10 accuracy: 0.8109 - loss: 0.6191 - val_accuracy: 0.9654 - val_loss: 0.1286

Epoch 2/10 accuracy: 0.9382 - loss: 0.2101 - val_accuracy: 0.9718 - val_loss: 0.0995

Epoch 3/10 accuracy: 0.9530 - loss: 0.1598 - val_accuracy: 0.9752 - val_loss: 0.0820

Epoch 4/10 accuracy: 0.9586 - loss: 0.1377 - val_accuracy: 0.9792 - val_loss: 0.0758

Epoch 5/10 accuracy: 0.9635 - loss: 0.1233 - val_accuracy: 0.9792 - val_loss: 0.0684

Epoch 6/10 accuracy: 0.9639 - loss: 0.1133 - val_accuracy: 0.9800 - val_loss: 0.0709

Epoch 7/10 accuracy: 0.9698 - loss: 0.1003 - val_accuracy: 0.9822 - val_loss: 0.0647

Epoch 8/10 accuracy: 0.9701 - loss: 0.0945 - val_accuracy: 0.9814 - val_loss: 0.0626

Epoch 9/10 accuracy: 0.9720 - loss: 0.0886 - val_accuracy: 0.9810 - val_loss: 0.0658

Epoch 10/10 accuracy: 0.9727 - loss: 0.0843 - val_accuracy: 0.9816 - val_loss: 0.0643

python3 mnist_cnn.py --cnn=C-8-3-1-same,C-8-3-1-same,M-3-2,C-16-3-1-same,C-16-3-1-same,M-3-2,F,H-200

Epoch 1/10 accuracy: 0.8549 - loss: 0.4564 - val_accuracy: 0.9836 - val_loss: 0.0529

Epoch 2/10 accuracy: 0.9809 - loss: 0.0610 - val_accuracy: 0.9830 - val_loss: 0.0527

Epoch 3/10 accuracy: 0.9878 - loss: 0.0406 - val_accuracy: 0.9902 - val_loss: 0.0303

Epoch 4/10 accuracy: 0.9905 - loss: 0.0309 - val_accuracy: 0.9872 - val_loss: 0.0444

Epoch 5/10 accuracy: 0.9916 - loss: 0.0247 - val_accuracy: 0.9918 - val_loss: 0.0286

Epoch 6/10 accuracy: 0.9930 - loss: 0.0214 - val_accuracy: 0.9924 - val_loss: 0.0286

Epoch 7/10 accuracy: 0.9941 - loss: 0.0184 - val_accuracy: 0.9910 - val_loss: 0.0318

Epoch 8/10 accuracy: 0.9955 - loss: 0.0135 - val_accuracy: 0.9944 - val_loss: 0.0236

Epoch 9/10 accuracy: 0.9963 - loss: 0.0116 - val_accuracy: 0.9928 - val_loss: 0.0262

Epoch 10/10 accuracy: 0.9953 - loss: 0.0126 - val_accuracy: 0.9916 - val_loss: 0.0309

python3 mnist_cnn.py --cnn=CB-8-3-1-same,CB-8-3-1-same,M-3-2,CB-16-3-1-same,CB-16-3-1-same,M-3-2,F,H-200

Epoch 1/10 accuracy: 0.8951 - loss: 0.3258 - val_accuracy: 0.9868 - val_loss: 0.0435

Epoch 2/10 accuracy: 0.9834 - loss: 0.0514 - val_accuracy: 0.9866 - val_loss: 0.0479

Epoch 3/10 accuracy: 0.9879 - loss: 0.0401 - val_accuracy: 0.9898 - val_loss: 0.0351

Epoch 4/10 accuracy: 0.9904 - loss: 0.0297 - val_accuracy: 0.9886 - val_loss: 0.0441

Epoch 5/10 accuracy: 0.9918 - loss: 0.0245 - val_accuracy: 0.9940 - val_loss: 0.0233

Epoch 6/10 accuracy: 0.9937 - loss: 0.0195 - val_accuracy: 0.9898 - val_loss: 0.0336

Epoch 7/10 accuracy: 0.9934 - loss: 0.0203 - val_accuracy: 0.9934 - val_loss: 0.0229

Epoch 8/10 accuracy: 0.9951 - loss: 0.0139 - val_accuracy: 0.9938 - val_loss: 0.0260

Epoch 9/10 accuracy: 0.9958 - loss: 0.0127 - val_accuracy: 0.9938 - val_loss: 0.0248

Epoch 10/10 accuracy: 0.9954 - loss: 0.0132 - val_accuracy: 0.9934 - val_loss: 0.0217

torch_dataset

Deadline: Mar 26, 22:00 2 points

In this assignment you will familiarize yourselves with torch.utils.data,

which is a PyTorch way of constructing training datasets. If you want,

you can read the Dataset and DataLoaders

tutorial.

The goal of this assignment is to start with the torch_dataset.py template and implement a simple image augmentation preprocessing.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 torch_dataset.py --epochs=1 --batch_size=100

accuracy: 0.1297 - loss: 2.2519 - val_accuracy: 0.2710 - val_loss: 1.9796

python3 torch_dataset.py --epochs=1 --batch_size=50 --augment

accuracy: 0.1354 - loss: 2.2565 - val_accuracy: 0.2690 - val_loss: 1.9889

mnist_multiple

Deadline: Mar 26, 22:00 3 points

In this assignment you will implement a model with multiple inputs and outputs. Start with the mnist_multiple.py template and:

- The goal is to create a model, which given two input MNIST images, compares if the digit on the first one is greater than on the second one.

- We perform this comparison in two different ways:

- first by directly predicting the comparison by the network (direct comparison),

- then by first classifying the images into digits and then comparing these predictions (indirect comparison).

- The model has four outputs:

- direct comparison whether the first digit is greater than the second one,

- digit classification for the first image,

- digit classification for the second image,

- indirect comparison comparing the digits predicted by the above two outputs.

- You need to implement:

- the model, using multiple inputs, outputs, losses and metrics;

- construction of two-image dataset examples using regular MNIST data via the PyTorch datasets.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 mnist_multiple.py --epochs=1 --batch_size=50

direct_comparison_accuracy: 0.7993 - indirect_comparison_accuracy: 0.8930 - loss: 1.6710 - val_direct_comparison_accuracy: 0.9508 - val_indirect_comparison_accuracy: 0.9836 - val_loss: 0.2984

python3 mnist_multiple.py --epochs=1 --batch_size=100

direct_comparison_accuracy: 0.7680 - indirect_comparison_accuracy: 0.8637 - loss: 2.1429 - val_direct_comparison_accuracy: 0.9288 - val_indirect_comparison_accuracy: 0.9772 - val_loss: 0.4157

cifar_competition

Deadline: Mar 26, 22:00 4 points+5 bonus

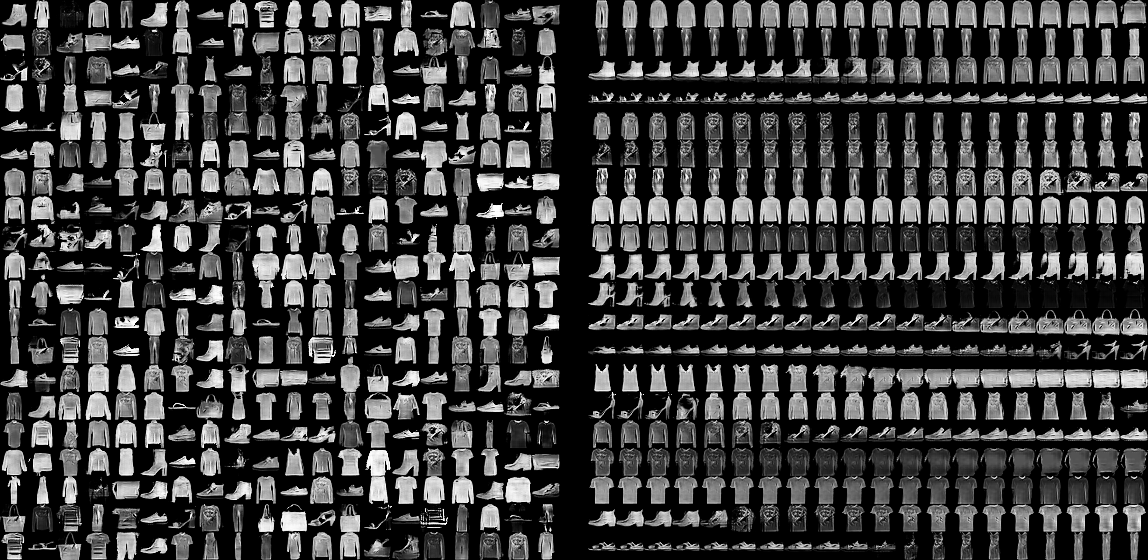

The goal of this assignment is to devise the best possible model for CIFAR-10. You can load the data using the cifar10.py module. Note that the test set is different than that of official CIFAR-10.

The task is a competition. Everyone who submits a solution achieving at least 70% test set accuracy gets 4 points; the remaining 5 bonus points are distributed depending on relative ordering of your solutions.

Note that my solutions usually need to achieve around ~85% on the development set to score 70% on the test set.

You may want to start with the cifar_competition.py template which generates the test set annotation in the required format.

Note that in this assignment, you cannot use the keras.applications module.

cnn_manual

Deadline: Apr 02, 22:00 3 points Slides

To pass this assignment, you need to manually implement the forward and backward

pass through a 2D convolutional layer. Start with the

cnn_manual.py

template, which constructs a series of 2D convolutional layers with ReLU

activation and valid padding, specified in the args.cnn option.

The args.cnn contains comma-separated layer specifications in the format

filters-kernel_size-stride.

Of course, you cannot use any PyTorch convolutional operation (instead,

implement the forward and backward pass using matrix multiplication and other

operations), nor the .backward() for gradient computation.

To make debugging easier, the template supports a --verify option, which

allows comparing the forward pass and the three gradients you compute in the

backward pass to correct values.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 cnn_manual.py --epochs=1 --cnn=5-1-1

Dev accuracy after epoch 1 is 90.42

Test accuracy after epoch 1 is 88.55

python3 cnn_manual.py --epochs=1 --cnn=5-3-1

Dev accuracy after epoch 1 is 92.26

Test accuracy after epoch 1 is 90.59

python3 cnn_manual.py --epochs=1 --cnn=5-3-2

Dev accuracy after epoch 1 is 90.82

Test accuracy after epoch 1 is 88.78

python3 cnn_manual.py --epochs=1 --cnn=5-3-2,10-3-2

Dev accuracy after epoch 1 is 92.92

Test accuracy after epoch 1 is 90.97

cags_classification

Deadline: Apr 02, 22:00 4 points+5 bonus

The goal of this assignment is to use a pretrained model, for example the EfficientNetV2-B0, to achieve best accuracy in CAGS classification.

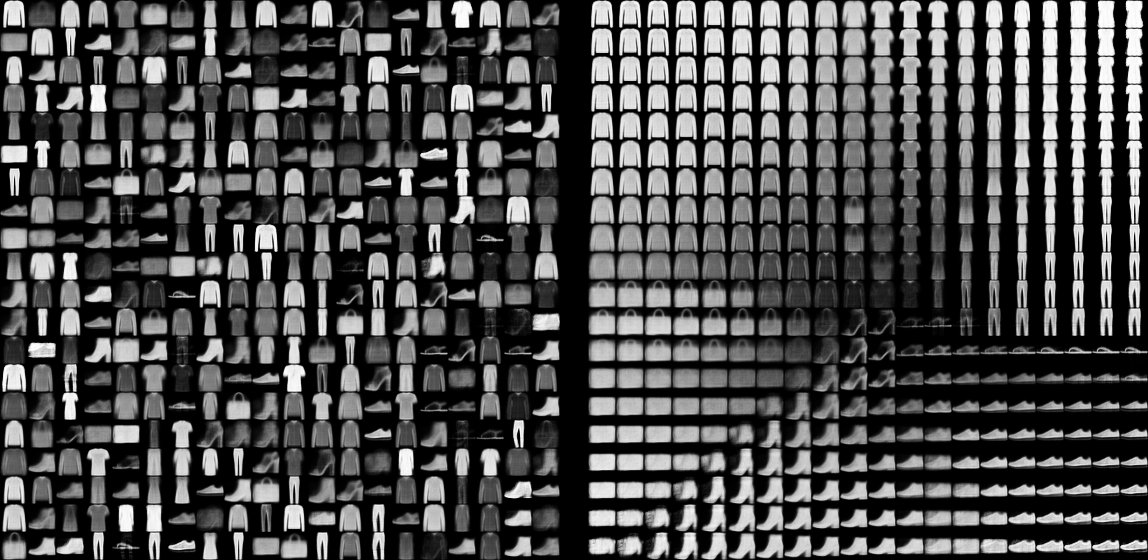

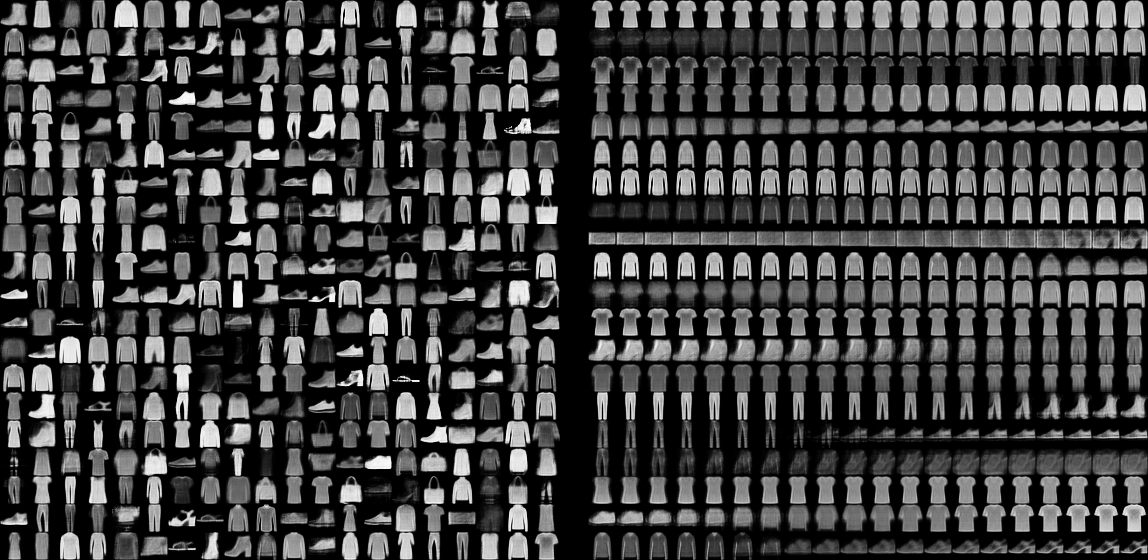

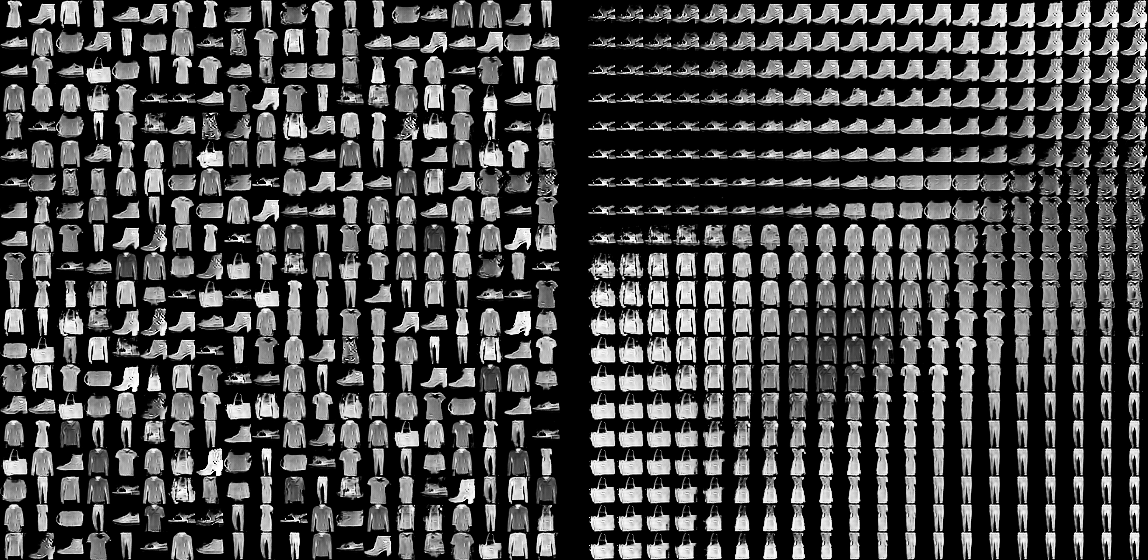

The CAGS dataset consists of images of cats and dogs of size , each classified in one of the 34 breeds and each containing a mask indicating the presence of the animal. To load the dataset, use the cags_dataset.py module.

To load the EfficientNetV2-B0, use the

keras.applications.EfficientNetV2B0

function, which constructs a Keras model, downloading the weights automatically.

However, you can use any model from keras.applications in this

assignment.

An example performing classification of given images is available in image_classification.py.

A note on finetuning: each keras.layers.Layer has a mutable trainable

property indicating whether its variables should be updated – however, after

changing it, you need to call .compile again (or otherwise make sure the list

of trainable variables for the optimizer is updated). Furthermore, training

argument passed to the invocation call decides whether the layer is executed in

training regime (neurons gets dropped in dropout, batch normalization computes

estimates on the batch) or in inference regime. There is one exception though

– if trainable == False on a batch normalization layer, it runs in the

inference regime even when training == True.

The task is a competition. Everyone who submits a solution achieving at least 93% test set accuracy gets 4 points; the remaining 5 bonus points are distributed depending on relative ordering of your solutions.

You may want to start with the cags_classification.py template which generates the test set annotation in the required format.

cags_segmentation

Deadline: Apr 02, 22:00 4 points+5 bonus

The goal of this assignment is to use a pretrained model, for example the

EfficientNetV2-B0, to achieve best image segmentation IoU score on the CAGS

dataset. The dataset and the EfficientNetV2-B0 is described in the

cags_classification assignment. Nevertheless, you can again use any model

from keras.applications in this assignment.

A mask is evaluated using intersection over union (IoU) metric, which is the

intersection of the gold and predicted mask divided by their union, and the

whole test set score is the average of its masks' IoU. A Keras-compatible

metric is implemented by the class MaskIoUMetric of the

cags_dataset.py

module, which can also evaluate your predictions (either by running with

--task=segmentation --evaluate=path arguments, or using its

evaluate_segmentation_file method).

The task is a competition. Everyone who submits a solution achieving at least 87% test set IoU gets 4 points; the remaining 5 bonus points are distributed depending on relative ordering of your solutions.

You may want to start with the cags_segmentation.py template, which generates the test set annotation in the required format – each mask should be encoded on a single line as a space separated sequence of integers indicating the length of alternating runs of zeros and ones.

bboxes_utils

Deadline: Apr 09, 22:00 2 points

This is a preparatory assignment for svhn_competition. The goal is to

implement several bounding box manipulation routines in the

bboxes_utils.py

module. Notably, you need to implement the following methods:

bboxes_to_rcnn: convert given bounding boxes to a R-CNN-like representation relative to the given anchors;bboxes_from_rcnn: convert R-CNN-like representations relative to given anchors back to bounding boxes;bboxes_training: given a list of anchors and gold objects, assign gold objects to anchors and generate suitable training data (the exact algorithm is described in the template).

The bboxes_utils.py contains simple unit tests, which are evaluated when executing the module, which you can use to check the validity of your implementation. Note that the template does not contain type annotations because Python typing system is not flexible enough to describe the tensor shape changes.

When submitting to ReCodEx, the method main is executed, returning the

implemented bboxes_to_rcnn, bboxes_from_rcnn and bboxes_training

methods. These methods are then executed and compared to the reference

implementation.

svhn_competition

Deadline: Apr 09, 22:00 5 points+5 bonus

The goal of this assignment is to implement a system performing object

recognition, optionally utilizing the pretrained EfficientNetV2-B0 backbone

(or any other model from keras.applications).

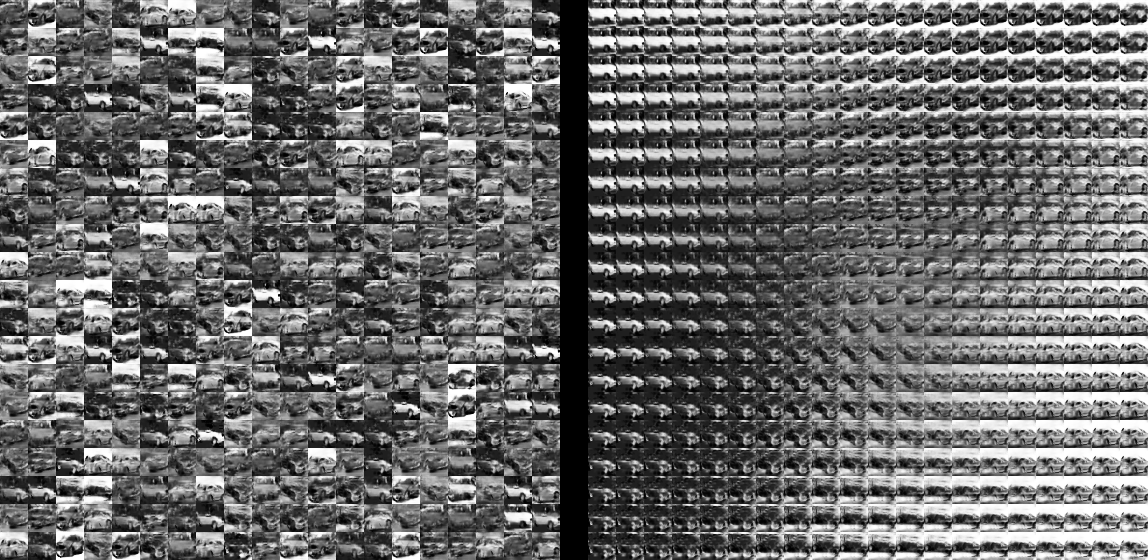

The Street View House Numbers (SVHN) dataset

annotates for every photo all digits appearing on it, including their bounding

boxes. The dataset can be loaded using the svhn_dataset.py

module. Similarly to the CAGS dataset, the train/dev/test are PyTorch

torch.utils.data.Datasets, and every element is a dictionary with the following keys:

"image": a square 3-channel image stored using PyTorch tensor of typetorch.uint8,"classes": a 1Dnp.ndarraywith all digit labels appearing in the image,"bboxes": a[num_digits, 4]2Dnp.ndarraywith bounding boxes of every digit in the image, each represented as[TOP, LEFT, BOTTOM, RIGHT].

Each test set image annotation consists of a sequence of space separated

five-tuples label top left bottom right, and the annotation is considered

correct, if exactly the gold digits are predicted, each with IoU at least 0.5.

The whole test set score is then the prediction accuracy of individual images.

You can again evaluate your predictions using the

svhn_dataset.py

module, either by running with --evaluate=path arguments, or using its

evaluate_file method.

The task is a competition. Everyone who submits a solution achieving at least 20% test set accuracy gets 5 points; the remaining 5 bonus points are distributed depending on relative ordering of your solutions. Note that I usually need at least 35% development set accuracy to achieve the required test set performance.

You should start with the svhn_competition.py template, which generates the test set annotation in the required format.

A baseline solution can use RetinaNet-like single stage detector, using only a single level of convolutional features (no FPN) with single-scale and single-aspect anchors. Focal loss is available as keras.losses.BinaryFocalCrossentropy and non-maximum suppression as torchvision.ops.nms or torchvision.ops.batched_nms.

3d_recognition

Deadline: Apr 16, 22:00 3 points+4 bonus

Your goal in this assignment is to perform 3D object recognition. The input is voxelized representation of an object, stored as a 3D grid of either empty or occupied voxels, and your goal is to classify the object into one of 10 classes. The data is available in two resolutions, either as 20×20×20 data or 32×32×32 data. To load the dataset, use the modelnet.py module.

The official dataset offers only train and test sets, with the test set having a different distributions of labels. Our dataset contains also a development set, which has nearly the same label distribution as the test set.

If you want, it is possible to use any model from keras.applications in

this assignment; however, the only way I know how to utilize such a pre-trained

model is to render the objects to a set of 2D images and classify them instead.

The task is a competition. Everyone who submits a solution achieving at least 88% test set accuracy gets 3 points; the remaining 4 bonus points are distributed depending on relative ordering of your solutions.

You can start with the 3d_recognition.py template, which among others generates test set annotations in the required format.

sequence_classification

Deadline: Apr 23, 22:00 2 points

The goal of this assignment is to introduce recurrent neural networks, show their convergence speed, and illustrate exploding gradient issue. The network should process sequences of 50 small integers and compute parity for each prefix of the sequence. The inputs are either 0/1, or vectors with one-hot representation of small integer.

Your goal is to modify the sequence_classification.py template and implement the following:

- Use the specified RNN type (

SimpleRNN,GRU, andLSTM) and dimensionality. - Process the sequence using the required RNN.

- Use additional hidden layer on the RNN outputs if requested.

- Implement gradient clipping if requested.

In addition to submitting the task in ReCodEx, please also run the following variations and observe the results in TensorBoard. Concentrate on the way how the RNNs converge, convergence speed, exploding gradient issues and how gradient clipping helps:

--rnn=SimpleRNN --sequence_dim=1,--rnn=GRU --sequence_dim=1,--rnn=LSTM --sequence_dim=1- the same as above but with

--sequence_dim=3 - the same as above but with

--sequence_dim=10 --rnn=SimpleRNN --hidden_layer=85 --rnn_dim=30 --sequence_dim=30and the same with--clip_gradient=1- the same as above but with

--rnn=GRUwith and without--clip_gradient=1 - the same as above but with

--rnn=LSTMwith and without--clip_gradient=1

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 sequence_classification.py --train_sequences=1000 --sequence_length=20 --rnn=SimpleRNN --epochs=5

Epoch 1/5 accuracy: 0.4854 - loss: 0.7253 - val_accuracy: 0.5092 - val_loss: 0.6971

Epoch 2/5 accuracy: 0.5101 - loss: 0.6944 - val_accuracy: 0.4990 - val_loss: 0.6914

Epoch 3/5 accuracy: 0.5000 - loss: 0.6904 - val_accuracy: 0.5198 - val_loss: 0.6892

Epoch 4/5 accuracy: 0.5200 - loss: 0.6887 - val_accuracy: 0.5328 - val_loss: 0.6875

Epoch 5/5 accuracy: 0.5326 - loss: 0.6869 - val_accuracy: 0.5362 - val_loss: 0.6857

python3 sequence_classification.py --train_sequences=1000 --sequence_length=20 --rnn=GRU --epochs=5

Epoch 1/5 accuracy: 0.5277 - loss: 0.6925 - val_accuracy: 0.5217 - val_loss: 0.6921

Epoch 2/5 accuracy: 0.5183 - loss: 0.6921 - val_accuracy: 0.5217 - val_loss: 0.6918

Epoch 3/5 accuracy: 0.5185 - loss: 0.6919 - val_accuracy: 0.5217 - val_loss: 0.6914

Epoch 4/5 accuracy: 0.5212 - loss: 0.6914 - val_accuracy: 0.5282 - val_loss: 0.6910

Epoch 5/5 accuracy: 0.5320 - loss: 0.6904 - val_accuracy: 0.5355 - val_loss: 0.6905

python3 sequence_classification.py --train_sequences=1000 --sequence_length=20 --rnn=LSTM --epochs=5

Epoch 1/5 accuracy: 0.5359 - loss: 0.6926 - val_accuracy: 0.5361 - val_loss: 0.6925

Epoch 2/5 accuracy: 0.5358 - loss: 0.6925 - val_accuracy: 0.5333 - val_loss: 0.6923

Epoch 3/5 accuracy: 0.5370 - loss: 0.6923 - val_accuracy: 0.5369 - val_loss: 0.6920

Epoch 4/5 accuracy: 0.5342 - loss: 0.6919 - val_accuracy: 0.5366 - val_loss: 0.6917

Epoch 5/5 accuracy: 0.5378 - loss: 0.6915 - val_accuracy: 0.5444 - val_loss: 0.6914

python3 sequence_classification.py --train_sequences=1000 --sequence_length=20 --rnn=LSTM --epochs=5 --hidden_layer=50

Epoch 1/5 accuracy: 0.5377 - loss: 0.6923 - val_accuracy: 0.5414 - val_loss: 0.6911

Epoch 2/5 accuracy: 0.5465 - loss: 0.6902 - val_accuracy: 0.5577 - val_loss: 0.6878

Epoch 3/5 accuracy: 0.5600 - loss: 0.6862 - val_accuracy: 0.5450 - val_loss: 0.6811

Epoch 4/5 accuracy: 0.5491 - loss: 0.6783 - val_accuracy: 0.5590 - val_loss: 0.6707

Epoch 5/5 accuracy: 0.5539 - loss: 0.6678 - val_accuracy: 0.5433 - val_loss: 0.6591

python3 sequence_classification.py --train_sequences=1000 --sequence_length=20 --rnn=LSTM --epochs=5 --hidden_layer=50 --clip_gradient=0.01

Epoch 1/5 accuracy: 0.5421 - loss: 0.6923 - val_accuracy: 0.5409 - val_loss: 0.6910

Epoch 2/5 accuracy: 0.5504 - loss: 0.6900 - val_accuracy: 0.5511 - val_loss: 0.6875

Epoch 3/5 accuracy: 0.5566 - loss: 0.6860 - val_accuracy: 0.5494 - val_loss: 0.6816

Epoch 4/5 accuracy: 0.5504 - loss: 0.6788 - val_accuracy: 0.5398 - val_loss: 0.6721

Epoch 5/5 accuracy: 0.5539 - loss: 0.6699 - val_accuracy: 0.5494 - val_loss: 0.6624

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 sequence_classification.py --rnn=SimpleRNN --epochs=5

Epoch 1/5 accuracy: 0.4984 - loss: 0.7004 - val_accuracy: 0.5223 - val_loss: 0.6884

Epoch 2/5 accuracy: 0.5198 - loss: 0.6862 - val_accuracy: 0.5117 - val_loss: 0.6794

Epoch 3/5 accuracy: 0.5132 - loss: 0.6784 - val_accuracy: 0.5121 - val_loss: 0.6732

Epoch 4/5 accuracy: 0.5160 - loss: 0.6723 - val_accuracy: 0.5191 - val_loss: 0.6683

Epoch 5/5 accuracy: 0.5235 - loss: 0.6680 - val_accuracy: 0.5276 - val_loss: 0.6639

python3 sequence_classification.py --rnn=GRU --epochs=5

Epoch 1/5 accuracy: 0.5109 - loss: 0.6929 - val_accuracy: 0.5128 - val_loss: 0.6915

Epoch 2/5 accuracy: 0.5174 - loss: 0.6894 - val_accuracy: 0.5155 - val_loss: 0.6785

Epoch 3/5 accuracy: 0.5446 - loss: 0.6630 - val_accuracy: 0.9538 - val_loss: 0.2142

Epoch 4/5 accuracy: 0.9812 - loss: 0.1270 - val_accuracy: 0.9987 - val_loss: 0.0304

Epoch 5/5 accuracy: 0.9985 - loss: 0.0270 - val_accuracy: 0.9995 - val_loss: 0.0135

python3 sequence_classification.py --rnn=LSTM --epochs=5

Epoch 1/5 accuracy: 0.5131 - loss: 0.6930 - val_accuracy: 0.5187 - val_loss: 0.6918

Epoch 2/5 accuracy: 0.5187 - loss: 0.6892 - val_accuracy: 0.5340 - val_loss: 0.6760

Epoch 3/5 accuracy: 0.6401 - loss: 0.5744 - val_accuracy: 1.0000 - val_loss: 0.0845

Epoch 4/5 accuracy: 1.0000 - loss: 0.0585 - val_accuracy: 1.0000 - val_loss: 0.0194

Epoch 5/5 accuracy: 1.0000 - loss: 0.0154 - val_accuracy: 1.0000 - val_loss: 0.0082

python3 sequence_classification.py --rnn=LSTM --epochs=5 --hidden_layer=85

Epoch 1/5 accuracy: 0.5151 - loss: 0.6888 - val_accuracy: 0.5323 - val_loss: 0.6571

Epoch 2/5 accuracy: 0.5387 - loss: 0.6497 - val_accuracy: 0.5575 - val_loss: 0.6321

Epoch 3/5 accuracy: 0.5570 - loss: 0.6242 - val_accuracy: 0.6199 - val_loss: 0.5854

Epoch 4/5 accuracy: 0.8367 - loss: 0.2854 - val_accuracy: 0.9897 - val_loss: 0.0503

Epoch 5/5 accuracy: 0.9995 - loss: 0.0058 - val_accuracy: 0.9999 - val_loss: 0.0014

python3 sequence_classification.py --rnn=LSTM --epochs=5 --hidden_layer=85 --clip_gradient=1

Epoch 1/5 accuracy: 0.5151 - loss: 0.6888 - val_accuracy: 0.5323 - val_loss: 0.6571

Epoch 2/5 accuracy: 0.5387 - loss: 0.6497 - val_accuracy: 0.5582 - val_loss: 0.6321

Epoch 3/5 accuracy: 0.5576 - loss: 0.6237 - val_accuracy: 0.6542 - val_loss: 0.5625

Epoch 4/5 accuracy: 0.9033 - loss: 0.1909 - val_accuracy: 0.9999 - val_loss: 0.0014

Epoch 5/5 accuracy: 0.9997 - loss: 0.0029 - val_accuracy: 1.0000 - val_loss: 4.4711e-04

tagger_we

Deadline: Apr 23, 22:00 3 points

In this assignment you will create a simple part-of-speech tagger. For training and evaluation, we will use Czech dataset containing tokenized sentences, each word annotated by gold lemma and part-of-speech tag. The morpho_dataset.py module (down)loads the dataset and provides mappings between strings and integers.

Your goal is to modify the tagger_we.py template and implement the following:

- Use specified RNN layer type (

GRUandLSTM) and dimensionality. - Create word embeddings for training vocabulary.

- Process the sentences using bidirectional RNN.

- Predict part-of-speech tags. Note that you need to properly handle sentences of different lengths in one batch.

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 tagger_we.py --epochs=1 --max_sentences=1000 --rnn=LSTM --rnn_dim=16

Epoch=1/1 3.1s loss=2.3541 accuracy=0.3138 dev_loss=2.0320 dev_accuracy=0.3611

python3 tagger_we.py --epochs=1 --max_sentences=1000 --rnn=GRU --rnn_dim=16

Epoch=1/1 3.2s loss=2.1970 accuracy=0.4233 dev_loss=1.5569 dev_accuracy=0.5121

Note that your results may be slightly different, depending on your CPU type and whether you use a GPU.

python3 tagger_we.py --epochs=5 --max_sentences=5000 --rnn=LSTM --rnn_dim=64

Epoch=1/5 21.1s loss=0.9776 accuracy=0.7080 dev_loss=0.3744 dev_accuracy=0.8814

Epoch=2/5 19.2s loss=0.1060 accuracy=0.9736 dev_loss=0.2947 dev_accuracy=0.9013

Epoch=3/5 19.4s loss=0.0291 accuracy=0.9921 dev_loss=0.2794 dev_accuracy=0.9057

Epoch=4/5 19.7s loss=0.0166 accuracy=0.9960 dev_loss=0.2976 dev_accuracy=0.9015