Institute of Formal and Applied Linguistics

Charles University, Czech Republic

Faculty of Mathematics and Physics

NPFL123 – Dialogue Systems

About

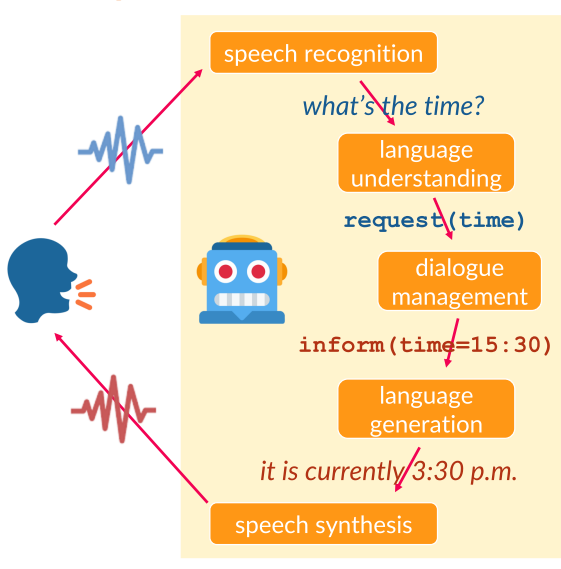

This course is a detailed introduction into the architecture of spoken dialogue systems, voice assistants and conversational systems (chatbots). We will introduce the main components of dialogue systems (speech recognition, language understanding, dialogue management, language generation and speech synthesis) and show alternative approaches to their implementation.

The lab sessions will be dedicated to implementing a simple dialogue system and selected components (via weekly homework assignments). We will use Python and a version of our Dialmonkey framework for this.

Logistics (spring 2026)

News

Language

The course will be taught in English, but we're happy to explain in Czech, too.

Time & Place

In-person lectures and labs take place in the Malá Strana building.

- Lectures: Mon 10:40, room S9 (1st floor)

- Labs: Mon 12:20, room S9

In addition, we plan to stream both lectures and lab instruction over Zoom and make the recordings available on Youtube (under a private link, on request, sent out to enrolled students at the start of the semester). We'll do our best to provide a useful experience.

- Zoom meeting ID: 981 1966 7454

- Password is the SIS code of this course (capitalized)

There's also a Discord you can use to discuss assignments and get news about the course. Invite links will be sent out to all enrolled students by the start of the semester. Please contact us by email if you want to join and haven't got an invite yet.

Passing the course

To pass this course, you will need to take an exam and do lab homeworks. There's a 60% points minimum for the exam and 50% for the homeworks to pass the course. See more details here.

Topics covered

- Dialogue system types & formats (open/closed domain, task/chat-oriented)

- Data for dialogue systems

- Dialogue systems evaluation

- What happens in a dialogue (linguistic background)

- Dialogue system components

- speech recognition

- language understanding, dialogue state tracking

- dialogue management

- language generation

- speech synthesis

- Voice assistants & question answering

- Dialogue authoring tools (IBM Watson Assistant/Google Assistant/Amazon Alexa, RAG & LLM)

- Open-domain dialogue (chitchat chatbots, LLMs)

Lectures

PDFs with lecture slides will appear here shortly before each lecture (more details on each lecture are on a separate tab). You can also check out last year's lecture slides.

1. Introduction Slides Domain selection Questions

Literature

A list of recommended literature is on a separate tab.

Lectures

1. Introduction

16 February Slides Domain selection Questions

- What are dialogue systems

- Common usage areas

- Task-oriented vs. non-task oriented systems

- Closed domain, multi-domain, open domain

- System vs. user initiative in dialogue

- Standard dialogue systems components

Homework Assignments

There will be 12 homework assignments, each for a maximum of 10 points. Please see details on grading and deadlines on a separate tab. Note that there's a 50% minimum requirement to pass the course.

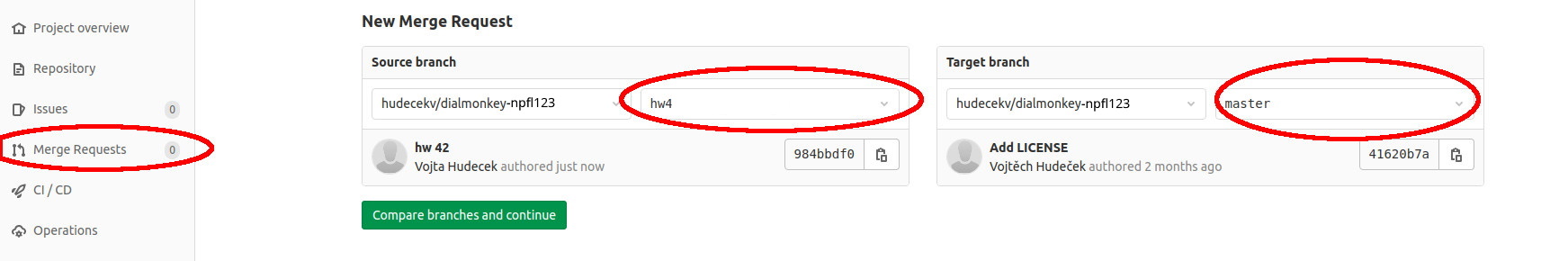

Assignments should be submitted via Git – see instructions on a separate tab. Please take special care about naming your Git branches and files the way they're given in the assignments. If our automatic checks don't find your files, you'll lose points!

You should run automatic checks before submitting -- have a look at TESTS.md. Code that crashes during the automatic checks will not get any points. You may fail the checks and still get full points, or ace the checks and get no points (especially if your code games the checks). Note that you should update your checkout since the code for the assignments might be changed during the semester.

All deadlines are 23:59:59 CET/CEST.

Index

1. Domain selection

Presented: 16 February, Deadline: 5 March

You will be building a task-oriented dialogue system in (some of) the homeworks for this course. Your first task is to choose a domain and imagine how your system will look like and work like. Since you might later find that you don't like the domain, you are now required to pick two, so you have more/better ideas later and can choose only one of them for building the system.

Requirements

The required steps for this homework are:

-

Pick two domains of your liking that are suitable for building a task-oriented dialogue system. Think of a reasonable backend (see below).

-

Write 5 example system-user dialogues for both domains, which are at least 5+5 turns long (5 sentences for both user and system). This will make sure that your domain is interesting enough. You do not necessarily have to use English here (but it's easier if we understand the language you're using -- ask us if unsure, Czech & Slovak are perfectly fine).

-

Create a flowchart for your two domains, with labels such as “ask about phone number”, “reply with phone number”, “something else” etc. It should cover all of your example dialogues. You can use e.g. Mermaid to do this, but the format is not important. Feel free to draw this by hand and take a photo, as long as it's legible.

- It's OK (even better) if your example dialogues don't go all in a straight line (e.g. some of them might loop or go back to the start).

Files to commit

Please stick to the file naming conventions -- you will lose points if you don't!

-

hw1/README.mdwith short commentary on both domains (ca. 10-15 sentences) -- what they are, what features you'd like to include, what will be the backend (see examples below). -

hw1/examples-<domain1>.txt,hw1/examples-<domain2>.txt-- 5 example dialogues for each of the domains (as described above). Use a short domain name, best with just letters and underscores.- Separate different dialogues by empty lines

- Distinguish user and system somehow

- Please use UTF-8 encoding for these files.

-

hw1/flowchart-<domain1>.{pdf,jpg,png},hw1/flowchart-<domain2>.{pdf,jpg,png}-- the flowcharts for each of the domains, as described above.

See the instructions on submission via Git -- create a branch and a merge request with your changes. Make sure to name your branch hw1 so we can find it easily.

Inspiration

You may choose any domain you like, be it tourist information, information about culture events/traffic, news, scheduling/agenda, task completion etc. You can take inspiration from stuff presented in the first lecture, or you may choose your own topic.

Since your domain will likely need to be connected to some backend database, you might want to make use of some external public APIs -- feel free to choose under one of these links:

You can of course choose anything else you like as your backend, e.g. portions of Wikidata/DBPedia or other world knowledge DBs, or even a handwritten “toy” database of a meaningful size, which you'll need to write to be able to test your system.

Homework Submission Instructions

All homework assignments will be submitted using a Git repository on MFF GitLab.

We provide an easy recipe to set up your repository below:

Creating the repository

- Log into your MFF gitlab account. Your username and password should be the same as in the CAS, see this.

- You'll have a project repository created for you under the teaching/NPFL123/2025 group. The project name will be the same as your CAS username. If you don't see any repository, it might be the first time you've ever logged on to Gitlab. In that case, Ondřej first needs to run a script that creates the repository for you (please let him know on Slack). In any case, you can explore everything in the base repository. Your own repo will be derived from this one.

- Clone your repository.

- Change into the cloned directory and run

git remote show origin

You should see these two lines:

* remote origin

Fetch URL: git@gitlab.mff.cuni.cz:teaching/NPFL123/2025/your_username.git

Push URL: git@gitlab.mff.cuni.cz:teaching/NPFL123/2025/your_username.git

- Add the base repository (with our code, for everyone) as your

upstream:

git remote add upstream https://gitlab.mff.cuni.cz/teaching/NPFL123/base.git

- You're all set!

Submitting the homework assignment

- Make sure you're on your master branch

git checkout master

- Checkout a new branch -- make sure to name it hwX (e.g. “hw4” or “hw11”) so our automatic checks can find it later!

git checkout -b hwX

-

Solve the assignment :)

-

Run the automatic tests. Your code doesn't have to pass all the tests, but it shouldn't crash them. If it crashes the tests, you won't get any points or feedback. If you think the problem is the tests and not your code, let us know.

python run_tests.py hwX

- Add new files and commit your changes -- make sure to name your files as required, or you won't pass our automatic checks!

git add hwX/solution.py

git commit -am "commit message"

- Push to your origin remote repository:

git push origin hwX

-

Create a Merge request in the web interface. Make sure you create the merge request into the master branch in your own forked repository (not into the upstream).

Merge requests -> New merge request

-

Wait a bit till we check your solution, then enjoy your points :)! Please do not delete your

hwXbranch at this point so we can find it. You can merge thehwXbranch into your master branch if you need the code for the following assignments, but please do not delete it completely. -

Once you get your points, you can safely merge your changes into your master branch, if you haven't done it before, and delete the

hwXbranch.

Updating from the base repository

You might need to update from the upstream base repository every once in a while (most probably before you start implementing each assignment). We'll let you know when we make changes to the base repo.

To upgrade from upstream, do the following:

- Make sure you're on your master branch

git checkout master

- Fetch the changes

git fetch upstream master

- Apply the diff

git merge upstream/master master

Exam Question Pool

The exam will have 10 questions, mostly from this pool. Each counts for 10 points. We reserve the right to make slight alterations or use variants of the same questions. Note that all of them are covered by the lectures, and they cover most of the lecture content. In general, none of them requires you to memorize formulas, but you should know the main ideas and principles. See the Grading tab for details on grading.

Introduction

- What's the difference between task-oriented and non-task-oriented systems?

- Describe the difference between closed-domain, multi-domain, and open-doman systems.

- Describe the difference between user-initiative, mixed-initiative, and system-initiative systems.

Linguistics of Dialogue

- What are turn taking cues/hints in a dialogue? Name a few examples.

- Explain the main idea of the speech acts theory.

- What is grounding in dialogue?

- Give some examples of grounding signals in dialogue.

- What is deixis? Give some examples of deictic expressions.

- What is coreference and how is it used in dialogue?

- What does Shannon entropy and conditional entropy measure? No need to give the formula, just the principle.

- What is entrainment/adaptation/alignment in dialogue?

Data & Evaluation

- What are the typical options for collecting dialogue data?

- How does Wizard-of-Oz data collection work?

- What is corpus annotation, what is inter-annotator agreement?

- What is the difference between intrinsic and extrinsic evaluation?

- What is the difference between subjective and objective evaluation?

- What are the main extrinsic evaluation techniques for task-oriented dialogue systems?

- What are some evaluation metrics for non-task-oriented systems (chatbots)?

- What's the main metric for evaluating ASR systems?

- What's the main metric for NLU (both slots and intents)?

- Explain an NLG evaluation metric of your choice.

- Why do you need to check for statistical significance (when evaluating an NLP experiment and comparing systems)?

- Why do you need to evaluate on a separate test set?

Natural Language Understanding

- What are some alternative semantic representations of utterances, in addition to dialogue acts?

- Describe language understanding as classification and language understanding as sequence tagging.

- How do you deal with conflicting slots or intents in classification-based NLU?

- What is delexicalization and why is it helpful in NLU?

- Describe one of the approaches to slot tagging as sequence tagging.

- What is the IOB/BIO format for slot tagging?

- What is the label bias problem?

- How can an NLU system deal with noisy ASR output? Propose an example solution.

Neural NLU & Dialogue State Tracking

- Describe an example of a neural architecture for NLU.

- How can you use pretrained language models in NLU?

- What is the dialogue state and what does it contain?

- What is an ontology in task-oriented dialogue systems?

- Describe the task of a dialogue state tracker.

- What's a partially observable Markov decision process?

- Describe a viable architecture for a belief state tracker.

- What is the difference between dialogue state and belief state?

- What's the difference between a static and a dynamiic state tracker?

- How can you use pretrained language models or large language models for state tracking?

Dialogue Policies

- What are the non-statistical approaches to dialogue management/action selection?

- Why is reinforcement learning preferred over supervised learning for training dialogue managers?

- Describe the main idea of reinforcement learning (agent, environment, states, rewards).

- What are deterministic and stochastic policies in dialogue management?

- What's a value function in a reinforcement learning scenario?

- What's the difference between actor and critic methods in reinforcement learning?

- What's the difference between model-based and model-free approaches in RL?

- What are the main optimization approaches in reinforcement learning (what measures can you optimize and how)?

- Why do you typically need a user simulator to train a reinforcement learning dialogue policy?

Neural Policies & Natural Language Generation

- How do you involve neural networks in reinforcement learning (describe a Q network or a policy network)?

- What are the main steps of a traditional NLG pipeline – describe at least 2.

- Describe one approach to NLG of your choice.

- Describe how template-based NLG works.

- What are some problems you need to deal with in template-based NLG?

- Describe a possible neural networks based NLG architecture.

- How can you use pretrained language models or large language models in NLG?

Voice assistants & Question Answering

- What is a smart speaker made of and how does it work?

- Briefly describe a viable approach to question answering.

- What is document retrieval and how is it used in question answering?

- What is dense retrieval (in the context of question answering)?

- How can you use neural models in answer extraction (for question answering)?

- How can you use retrieval-augmented generation in question answering?

- What is a knowledge graph?

Dialogue Tooling

- What is a dialogue flow/tree?

- What are intents and entities/slots?

- How can you improve a chatbot in production?

- What is the containment rate (in the context of using dialogue systems in call centers)?

- What is retrieval-augmented generation?

Automatic Speech Recognition

- What is a speech activity detector?

- Describe the main components of an ASR pipeline system.

- How do input features for an ASR model look like?

- What is the function of the acoustic model in a pipeline ASR system?

- What's the function of a decoder/language model in a pipeline ASR system?

- Describe an (example) architecture of an end-to-end neural ASR system.

Text-to-speech Synthesis

- How do humans produce sounds of speech?

- What's the difference between a vowel and a consonant?

- What is F0 and what are formants?

- What is a spectrogram?

- What are main distinguishing characteristics of consonants?

- What is a phoneme?

- What are the main distinguishing characteristics of different vowel phonemes (both how they're produced and perceived)?

- What are the main approaches to grapheme-to-phoneme conversion in TTS?

- Describe the main idea of concatenative speech synthesis.

- Describe the main ideas of statistical parametric speech synthesis.

- How can you use neural networks in speech synthesis?

Chatbots

- What are the three main approaches to building chitchat/non-task-oriented open-domain chatbots?

- How does the Turing test work? Does it have any weaknesses?

- What are some techniques rule-based chitchat chatbots use to convince their users that they're human-like?

- Describe how a retrieval-based chitchat chatbot works.

- How can you use neural networks for chatbots (non-task-oriented, open-domain systems)? Does that have any problems?

- Describe a possible architecture of an ensemble non-task-oriented chatbot.

- What do you need to train a large language model?

- What are some issues you may encounter when chatting to LLMs?

Course Grading

To pass this course, you will need to:

- Take an exam (a written test covering important lecture content).

- Do lab homeworks (various dialogue system implementation tasks).

Exam test

- There will be a written exam test at the end of the semester.

- There will be 10 questions, we expect 2-3 sentences as an answer, with a maximum of 10 points per question.

- To pass the course, you need to get at least 50% of the total points from the test.

- We plan to publish a list of possible questions beforehand.

In case the pandemic does not get better by the exam period, there will be a remote alternative for the exam (an essay with a discussion).

Homework assignments

- There will be 12 homework assignments, introduced every week, starting on the 2nd week of the semester.

- You will submit the homework assignments into a private Gitlab repository (where we will be given access).

- For each assignment, you will get a maximum of 10 points.

- All assignments will have a fixed deadline.

- If you submit the assignment after the deadline, you will get:

- up to 50% of the maximum points if it is less than 2 weeks after the deadline;

- 0 points if it is more than 2 weeks after the deadline.

- Once we check the submitted assignments, you will see the points you got and the comments from us as comments on your merge requests on Gitlab.

- You need to get at least 50% of the total assignments points to pass the course.

- You can take the exam even if you don't have 50% yet (esp. due to potential delays in grading), but you'll need to get the required points eventually.

Grading

The final grade for the course will be a combination of your exam score and your homework assignment score, weighted 3:1 (i.e. the exam accounts for 75% of the grade, the assignments for 25%).

Grading:

- Grade 1: >=87% of the weighted combination

- Grade 2: >=74% of the weighted combination

- Grade 3: >=60% of the weighted combination

- An overall score of less than 60% means you did not pass.

In any case, you need >50% of points from the test and >50% of points from the homeworks to pass. If you get less than 50% from either, even if you get more than 60% overall, you will not pass.

No cheating

- Cheating is strictly prohibited and any student found cheating will be punished. The punishment can involve failing the whole course, or, in grave cases, being expelled from the faculty.

- Discussing homework assignments with your classmates is OK. Sharing code is not OK (unless explicitly allowed); by default, you must complete the assignments yourself.

- All students involved in cheating will be punished. E.g. if you share your assignment with a friend, both you and your friend will be punished.

Recommended Reading

You should pass the course just by following the lectures, but here are some hints on further reading. There's nothing ideal on the topic as this is a very active research area, but some of these should give you a broader overview.

Basic (good and up-to-date, but very brief, available online):

- Jurafsky & Martin: Speech & Language processing. 3rd ed. draft (chapters 14-16 in Jan 2025 version; chaps. 11, 14-16, 25, app. K in the current version).

More detailed (very good but slightly outdated, available as e-book from our library):

- McTear: Conversational AI: Dialogue Systems, Conversational Agents, and Chatbots. Morgan & Claypool 2021.

Further reading (mostly outdated but still informative):

- Yi et al.: A Survey of Recent Advances in LLM-Based Multi-turn Dialogue Systems. ACM Computing Surveys 2025.

- up-to-date but focuses only on latest models, pretty advanced

- Janarthanam: Hands-On Chatbots and Conversational UI Development. Packt 2017.

- practical guide on developing dialogue systems for voice bot platforms, virtually no theory

- Gao et al.: Neural Approaches to Conversational AI. arXiv:1809.08267

- an advanced, good overview of basic neural approaches in dialogue systems

- McTear et al.: The Conversational Interface: Talking to Smart Devices. Springer 2016.

- practical, more advanced and more theory than Janarthanam

- Jokinen & McTear: Spoken dialogue systems. Morgan & Claypool 2010.

- good but outdated, some systems very specific to particular research projects

- Rieser & Lemon: Reinforcement learning for adaptive dialogue systems. Springer 2011.

- advanced, outdated, project-specific

- Lemon & Pietquin: Data-Driven Methods for Adaptive Spoken Dialogue Systems. Springer 2012.

- ditto

- Skantze: Error Handling in Spoken Dialogue Systems. PhD Thesis 2007, Chap. 2.

- good introduction into dialogue systems in general, albeit dated

- McTear: Spoken Dialogue Technology. Springer 2004.

- good but dated

- Psutka et al.: Mluvíme s počítačem česky. Academia 2006.

- virtually the only book in Czech, good for ASR but dated, not a lot about other parts of dialogue systems