Institute of Formal and Applied Linguistics

Charles University, Czech Republic

Faculty of Mathematics and Physics

Please refer instead to NPFL125 – Introduction to Language Technologies.

NPFL092 – Technology for NLP (Natural Language Processing)

About

The aim of the course is to get students familiar with basic software tools used in natural language processing.

SIS code:

NPFL092

Semester: winter

E-credits: 5

Examination: 1/2 MC (KZ)

Teachers

Whenever you have a question or need some help (and Googling does not work), contact us as soon as possible! Please always e-mail both of us.

Classes

- the classes combine lectures and practicals

- in 2020/2021, the classes are held on Wednesday in SU1, 9:50-12:10

- in the times of coronavirus, the classes are held online through Zoom

- the Zoom link is: https://cesnet.zoom.us/j/92724816555

- the password is: 1921

Covid-19-related update:

|

Requirements

To pass the course. you will need to submit homework assignments and do a written test. See Grading for more details.

Classes

1. Introduction; Survival in Linux, Bash UNIX (Czech) Bash (English) hw_ssh Questions

2. Encoding Encoding Questions

3. Editors; Intro to Bash Editors Python in Atom Unix for Poets hw_makefile Questions

4. Git A Visual Introduction to Git git-scm: About Version Control tryGit Branching hw_git Questions

5. Python, basic manipulation with strings Python: Introduction for Absolute Beginners hw_python Questions

6. Python: strings cont., I/O basics, regular expressions Strings Python tutorial Regexes hw_string Questions

7. Python: modules, packages, classes Unicode Text files Reading hw_tagger Questions

8. A gentle introduction to XML XML hw_xml Questions

9. XML & JSON XML+ XML&JSON hw_xml2json

10. Spacy, NLTK and other NLP frameworks hw_frameworks Questions

11. REST API hw_rest Building REST APIs Questions

Legend:

Badge@: primary,,Slides

Badge@: danger,,Video

Badge@: warning,,Homework assignment

Badge@: success,,Additional reading

Badge@: info,,Test questions

1. Introduction; Survival in Linux, Bash

Sep 30, 2020

-

Introduction

- Motivation

- Course requirements: MFF linux lab account

- Course plan, overview of required work, assignment requirements

-

keyboard shortcuts in KDE/GNOME, selected e.g. from here

-

motivation for scripting, command line features (completion, history...), keyboard shortcuts

-

bash in a nutshell

- ls (-l,-a,-1,-R), cd, pwd

- cp (-R), mv, rm (-r, -f), mkdir (-p), rmdir, ln (-s)

- file, cat, less, head, tail

- chmod, wget, ssh (-XY), .bashrc, man...

-

exercise: playing with text files udhr.zip, also available for download at

bit.ly/2hQQeTH -

remote access to a unix machine: SSH (Secure Shell)

-

you can access a lab computer e.g. by opening a unix terminal and typing:

ssh yourlogin@u-pl17.ms.mff.cuni.cz(replace

yourloginwith your login into the lab and type your lab password when asked for it; instead of17you can use any number between 1 and something like 30 — it is the number of the computer in the central lab that you are connecting to) -

your home is shared across all the lab computers in all the MS labs (SU1, SU2, Rotunda), i.e. you will see your files everywhere

-

you can ssh even from non-unix machines

- on Windows, you can use e.g. the Putty software

- on Windows, you can even use the Windows commandline directly --

open the command prompt (Windows+R), type

cmd, press Enter, and now a commandline opens up in which you can use ssh directly - on any computer with the Chrome browser, you can use the Secure Shell extension (and there are similar extensions for other browsers as well) which allows you to open a remote terminal in a browser tab — this is probably the most comfortable way

- on an Android device, you can use e.g. JuiceSSH

-

on Windows, you can try using the Windows Terminal

-

2. Encoding

Oct 07, 2020

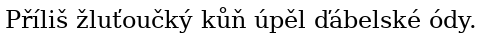

Character encoding

- ascii, 8-bits, unicode, conversions, locales (LC_*)

- Questions: answer the following questions:

- What is ASCII?

- What 8-bit encoding do you know for Czech or for your native language? How do they differ from ASCII?

- What is Unicode?

- What Unicode encodings do you know?

- What is the relation between UTF-8 a ASCII?

- Take a sample of Czech text (containing some diacritics), store it into a plain text file and convert it (by iconv) to at least two different 8-bit encodings, and to utf-8 and utf-16. Explain the differences in file sizes.

- How can you detect file encoding?

- Store any Czech web page into a file, change the file encoding and the encoding specified in the file header, and find out how it is displayed in your browser if the two encodings differ.

- How do you specify file encoding when storing a plain text or a source code in your favourite text editor?

3. Editors; Intro to Bash

Oct 14, 2020

Source-code editors (individual preparation before the lecture)

- read the slides Editors

- IMPORTANT: Make sure you can login to the UNIX lab (e.g.

u-pl13.ms.mff.cuni.cz) and use an editor to create, edit and store a source-code file. - Optional: watch the following video if you decided to master Atom:

Python in Atom

- Optional: If time remains, do the following exercise using your prefered source-code editor:

- download a badly formatted code: bad.py

- run the code (

python3 bad.py) to check it works (it should) - get some basic information about the code with

file - open the code in your editor and improve its formating (but do not change

its function), including at least the following improvements:

- convert the code into UTF-8 (and remove the first line specifying the encoding; UTF-8 is the default in Python 3)

- switch it from windows newlines (CRLF) to unix newlines (LF)

- make indentation and spacing consistent

- make quotes consistent

- add a she-bang

- make it executable

- rerun it to check you did not break it

- ideally, you should be able to do all of the above in your editor, without going to Bash

- if you do not know Python yet, then this will also serve as your first

introduction to some basic Python constructions

- indentation is important in Python, as it marks the start and

end of a block; so in the script, the first two

prints are part of the firstforblock, while the thirdprintis not; any number of spaces can be used for indentation, but it is common to use 4 spaces - there is no difference between single quotes and double quotes

- indentation is important in Python, as it marks the start and

end of a block; so in the script, the first two

- save the improved script as

good.py - take a screenshot of the code open in your editor, and save it as

good.pngorgood.jpg(so that we can see the syntax highlighting) - put the good code and the screenshot into

/afs/ms/doc/vyuka/INCOMING/TechnoNLP/your-surname/hw_editors/(of course, replaceyour-surnamewith your surname).- this cannot be done directly through SSH:

- you can use

scp(it is similar tocpbut can copy to and from remote machines; the colon:between the machine identifier and the path is what makes it clear that you want to use a remote machine):scp good.py yourlogin@u-pl13.ms.mff.cuni.cz:/afs/ms/...- on Windows, use WinSCP

- or you can simply do that when you are physically in the lab

- or you can wait till next lesson and then use Git for that

- you can use

- this cannot be done directly through SSH:

Bash and Makefiles

- Bash scripting

-

text processing commands: sort, uniq, cat, cut, [e]grep, sed, head, tail, rev, diff, patch, set, pipelines, man...

-

bash history from todays practicals

-

regular expressions

-

if, while, for

-

xargs: Compare

sed 's/:/\n/g' <<< "$PATH" | \ grep $USER | \ while read path ; do ls $path donewith

sed 's/:/\n/g' <<< "$PATH" | \ grep $USER | \ xargs ls -

Shell script, patch to show changes we made, just run

patch -p0 < script.sh

-

-

Makefiles

-

warm-up exercises:

- construct a bash pipeline that extracts words from an English text read

from the input, and sorts them in the "rhyming" order (lexicographical

ordering, but from the last letter to the first letter; "retrográdní

uspořádání" in Czech) (hint: use the command

revfor reverting individual lines) - construct a bash pipeline that reads an English text from the input

and finds 3-letter "suffixes" that are most frequent in the words that

are contained in the text, irrespectively of the words' frequencies

(suffixes not in the linguistic sense, simply just the last 3 letters

from a word that contains at least 5 letters) (hint: you can use

e.g.

sed 's/./&\t/g | rev | cut -f2,3,4 | revfor extracting the last three letters)

- construct a bash pipeline that extracts words from an English text read

from the input, and sorts them in the "rhyming" order (lexicographical

ordering, but from the last letter to the first letter; "retrográdní

uspořádání" in Czech) (hint: use the command

-

system variables

-

editting

.bashrc(aliases, paths...) -

looping, branching, e.g.

#!/bin/bash for file in *; do if [ -x $file ] then echo Executable file: $file echo Shebang line: `head -n 1 $file` echo fi done

Coding examples shown during class

- some Bash and Makefile stuff from 2019: bash history

4. Git

Oct 21, 2020 A Visual Introduction to Git git-scm: About Version Control tryGit

Before the class

- Faculty GitLab

- Check that you can access and login to the faculty GitLab server at https://gitlab.mff.cuni.cz/

- The username is usually your surname or something derived from it

- it should be the same as for SSH

- find Login in your personal information in SIS/CAS

- The password should be the same as for SIS/CAS and for SSH

- If you can log in, that's fine, that's all for now

- If you cannot log in, try it a few times and then email us; or email directly the faculty administrator of GitLab, Mr Semerád

- You will use the faculty GitLab to submit homework assignments, so this is quite important

- Reading

- In any order, read the following two texts:

- A Visual Introduction to Git (~12 pages with a lot of pictures, rather informal language)

- git-scm: About Version Control (~4 pages with diagrams, more formal language)

- In any order, read the following two texts:

- If you have some more time before the class, look at the "Questions to think about" and think about them :-)

Questions to think about

- What approaches for storing and sharing programming code can you think of?

What approach(es) do you personally use (or have used in the past)?

- By storing, we mean saving your script files (and similar stuff) somewhere.

- By sharing, we mean giving access to your code to someone else (or even to yourself on a different computer). This may include read-only sharing, but also read-write sharing, where the other people will modify the shared files and give them back to you.

- What are some advantages and disadvantages of the following approaches of storing and sharing your code?

- No approach is perfect, any approach has both advantages and disadvantages!

- Storing approaches

- Storing everything e.g. in your Documents folder on your computer. (Was that/would that be your first approach?)

- Making a copy of your code once in a while and storing it under a different name or in a different folder on your computer.

- Copying your code once in a while to another computer or to an external storage medium (external hard drive, flash disk, CD, NAS, etc.)

- Storing the files at a cloud storage (DropBox, OneDrive, Google Drive, etc.).

- Storing the files in a Git repository.

- Printing the files on paper and storing them in a cupboard.

- Carving it into the wall of a stone cave.

- Sharing approaches

- Sharing via e-mail or another messaging service. (Was that/would that be your first approach?)

- Storing the files in a shared folder (e.g.

/afs/ms/doc/vyuka/INCOMING/TechnoNLP/). - Storing the files at a cloud storage (DropBox, OneDrive, Google Drive, etc.) and sharing via public share links or via shared access for specific users.

- Storing the files in a Git repository, either giving access to the repository to specific users, or making the repository public (so anyone has access).

- Copying your printed code and giving it to another person personally or sending per snail mail (aka the traditional post service using stamps and envelopes and such).

- Telling people where the cave with your carved code is located.

- What approach (and why) would you use for…

- Trying out a few Bash commands to see how they work and to learn to use them.

- Assignments for a programming class (to eventually share with the teacher).

- A collaborative assignment (to work on together with some classmates).

- Your master thesis.

- An open-source project you are starting (e.g. a web browser add-on that transforms the text on webpages into poems.)

- Showing your code to your grandmother.

- A small old project that you worked on years ago, is useless now and you will never get to it again.

- An algorithm you invented that converts NP problems into P problems.

First setup on the faculty GitLab

Note: This "first setup" section and the concrete URLs are specific to the faculty GitLab and to our course. However, you would use a similar approach for your own project hosted either at the faculty GitLab or at another similar service which hosts Git repositories, such as GitHub, public GitLab, or BitBucket.

- Log in at https://gitlab.mff.cuni.cz/

- Create a repository for this course

- Click New project

- Fill in a Project name, e.g.

NPFL092- This will generate a Project slug, which is the identifier of your

repository; e.g.

npfl092

- This will generate a Project slug, which is the identifier of your

repository; e.g.

- Leave Visibility Level at

Private - Tick Initialize repository with a README

- Click Create project

- Note the URL of your repository

- Click Clone

- You will see two URLs, an SSH one and an HTTPS one

- We will use the HTTPS one in this class

- This works out-of-the-box

- But requires you to type your password often

- It will look something like

https://gitlab.mff.cuni.cz/yourlogin/yourprojectslug.git- e.g. for Rudolf it is

https://gitlab.mff.cuni.cz/rosar7am/npfl092.git

- e.g. for Rudolf it is

- If you want, after the class, feel free to set up SSH keys and use the

SSH one; this allows you to do less password typing

- Click your user icon in the top right corner

- Choose Settings

- Choose SSH Keys

- Follow the instructions on the page

- Give access to the repository to Rudolf and Zdeněk

- In the left menu, click Members

- In GitLab member or Email address, search for

Rudolf Rosa - In Choose a role permission, choose

Reporter(this is for read-only access) - Click Invite

- Repeat 2.-4. for

Zdeněk Žabokrtský

- Put the URL of your repository into SIS

- Go to the

Study group roster

module in SIS ("Grupíček" in the Czech version)

Study group roster

module in SIS ("Grupíček" in the Czech version) - Choose the NPFL092 course

- Enter the repository URL into the Git repo field

- Please enter the SSH variant of the URL

- Go to the

Note: the rest of the instructions is generally valid for working with any Git anywhere.

Filling your new repository — VERSION A, recommended (git clone)

- clone your repository

cdgit clone https://gitlab.mff.cuni.cz/yourlogin/npfl092.git(or whatever your repo URL is)- you will need to enter your username and password

cd npfl092(or whatever your repo ID is)- Note: you can also use SSH instead of HTTPS, which saves you some password typing, but requires you to set up SSH keys.

- add a

goodbye.shfileecho 'echo Goodbye cruel world' > goodbye.shgit statusgit add goodbye.shgit status

- commit the changes locally

git commit -m'Goodbye, all you people'git status

- push changes to the remote repository (i.e. to GitLab)

git push- you may need to enter your password

git status

Filling your new repository — VERSION B, an alternative to version A (git add remote)

- create a local repository

cdmkdir npfl092cd npfl092git init

- add a

goodbye.shfileecho 'echo Goodbye cruel world' > goodbye.shgit statusgit add goodbye.shgit status

- commit the changes locally

git commit -m'Goodbye, all you people'git status

- push your repository to the remote repository (i.e. to GitLab)

git remote add origin https://gitlab.mff.cuni.cz/yourlogin/npfl092.git(or whatever your repo URL is)git push -u origin master- you will need to enter your username and password

- you can also use SSH instead of HTTPS, which saves you some password typing, but requires you to set up SSH keys

git status

Checking the repository state through the GitLab website

- Refresh the GitLab website of your project in your browser

- You should see the current state of your repository, all the files, the history, etc.

Synchronizing changes

- make a new clone of the repository at a different place

cd; mkdir new_clone_of_repo; cd new_clone_of repogit clone https://gitlab.mff.cuni.cz/yourlogin/npfl092.git(or whatever your repo URL is)- you may need to enter your username and password

cd npfl092

- make some changes here, stage them, commit them locally, and push them to the remote repo

echo 'This repo will contain my homework.' >> READMEgit add READMEgit commit -m'adding more info'git push- you may need to enter your password

- go back to your first local repo and get the new changes from the

remote repo

cd ~/npfl092cat READMEgit pull- you may need to enter your password

cat README

Regular working with your repo

- go to a directory containing a clone of your repository (or make a new one with

git cloneif on a different computer) - synchronize your local repo with the remote repo with

git pull - do any changes to the files, create new files, etc.

- view the changes with

git status(and withgit diffto see changes inside files) - stage new/changed files that you want to become part of the repo with

git add(untracked files are ignored by git) - create a new snapshot in your local repo with

git commit - synchronize the remote repo with your local repo with

git push

Going back to previous versions

- to throw away current uncommitted changes:

git checkout filenameto revert to the last committed version of file filename- beware, there is no undo, i.e. with this command you immediately loose any uncommitted changes!

- to only show info about commits:

git logto figure out which commit you are interested ingit show commitidto show the details about a commit with id commitid

- to temporarily switch to a previous state of the repository:

- commit all your changes

git checkout commitidto go to the state after the commit commitidgit checkout masterto return to the current state

Branching

git branch branchnameto create a new branch called branchnamegit checkout branchnameto switch to the branch branchnamegit checkout masterto switch back to mastergit merge branchnameto merge branch branchname into the current branch- typically you merge into master

- i.e. you first

git checkout master - and then

git merge branchname

git branch -d branchnameto remove the branch called branchname

5. Python, basic manipulation with strings

Nov 4, 2020 Python: Introduction for Absolute Beginners

Before the class

- Python baby-steps: write your first Python code, it's easy!

-

Go to Google Colab, which is web service where you can directly write and run Python code

-

Sign in using your Google account

-

Click "New notebook" or "File > New notebook" to create a new interactive Python session

-

You will see an empty text field; this is a code field

-

Hello world

- Type

print("Hello world!")into the code field - Press

Shift+Enter - Wait for a while

- And you should see the output, i.e. the text

Hello world, printed below the code field - Cool, you have just ran a very simple Python program!

- You should also get a new empty code field

- (You can also run the code field by clicking the "play" button next to it, and create a new code field by clicking "+ Code")

- Type

-

Basic mathematics

- Try

print(20+3) - What about

print(20*3) - Try

print(20/3),print(20//3),print(20-3)

- Try

-

Fun with strings (a string is a piece of text)

- You can also add strings:

print("I like " + "apples") - And even multiply strings:

print(10 * "apple")

- You can also add strings:

-

A multiline code

a = 5 b = 10 c = a + b print(c)- A variable is a named place in memory where you can store whatever you like

- Here, we created 3 variables, called a, b and c. Think of them as named boxes for putting things.

- We've put the number 5 into a, the number 10 into b.

- We then added what was stored in a and b (so we added up 5 and 10, which gave us 15) and we've stored the result (so the number 15) into c.

- And we printed out the contents of the variable c (so, 15).

-

Now try to write something yourself

- Store some numbers into some variables, multiply them, and print out the

result

- The variable names do not need to be single-letter, so a

variable can be called

aormynumberorbig_fat_elephant

- The variable names do not need to be single-letter, so a

variable can be called

- Put a few strings into variables (e.g.

"Hello"and"world"), add them together and print out the result- Strings need to be put into quotes, so

"Hello"or'Hello'is a string - Variable names are not put into quotes, so

Hellocould be variable name - So you can e.g. write

hello = "Hello"to store the string Hello into a variable called hello

- Strings need to be put into quotes, so

- Feel free to try out more if you like

- The Colab does not run on your computer, so you cannot break anything on your computer. Feel free to experiment, you can always retry on error, kill the code if it fails to stop, ir even just close it and load it again :-)

- Store some numbers into some variables, multiply them, and print out the

result

-

- You may also want to read something about Python (but this is voluntary)

- A good source seems to be Python: Introduction for Absolute Beginners

- There is a nice Handout file going nicely and slowly over everything important

- It has 457 pages, so you may just go over e.g. the first 20 pages or so

- But if you like the material, feel free to keep going through it at your own pace over the coming weeks, it covers much more than we can cover in our few Python lessons and everything seems to be quite nicely explained there

- Also feel free to look for help in the file (like using

Ctrl+Fto search for stuff)

- A good source seems to be Python: Introduction for Absolute Beginners

Warm-up exercise (in pairs); 10-15 minutes

- Work in pairs, with your microphones turned on

- Go to Google Colab

- Retry some basic stuff from the "before the class" session to check everything works, e.g. computing how much is 63714205+59742584

- Share your screens to help each other

- Create two variables containing your names as strings (e.g.

me = "Rudolf"andyoufor the other name) and print out a greeting (e.g.print("Hello " + me + "Hi " + you)) - Create another two variables containing your favourite animals and write out a text saying who has which favourite animal

- Try to print a textual chocolate bar by printing the word "chocolate " e.g. 10 times on one line, and copying the code e.g. 3 times so you have a textual bar of chocolate with 10x3 squares. (Remember that you can multiply strings by numbers. Python also has ways to run a piece of code repeatedly, and we will get to this, but now you can just copy-paste the code 3 times...)

- Show each other your code (e.g. via screen sharing) and discuss any problems you had

- If you still have some time, you can try some other simple things, e.g.:

- Calculate your age as 2020 minus the year you were born (OK, if you were born towards the end of the year, this is not your age yet). Calculate for how many days you have already lived (at least approximately, e.g. as your age times 365), or also for how many hours, minutes, seconds? Write out the word "day" once for each day you have lived already.

- Calculate your BMI to see if your weight is OK (the website contains a lot of information, but you really just need the formula, plus the table at the end to interpret the result)

- If there is still time, try to do something more, just play with Python, in Colab you cannot really break much :-)

Python

-

To solve practical tasks, Google is your friend!

-

By default, we will use Python version 3:

python3- A day may come when you will need to use Python 2, so please note that there are some differences between these two. (Also note that you may encounter code snippets in either Python 2 or Python 3…)

-

To create a Python script (needed e.g. to submit homework assignments):

-

Create a PY textfile in your favourite editor (e.g.

myscript.py) -

Put a correct Python 3 she-bang on the first line (so that Bash knows to run the file as a Python script), and your code on subsequent lines, so the file may look e.g. like this:

#!/usr/bin/env python3 print("Hello world") -

Save the script

-

Make it executable:

chmod u+x myscript.py -

Run it (in the terminal):

./myscript.pyorpython3 myscript.py

-

-

To work interactively with Python, you can use Google Colab

- See guidelines above

- Enter code into the code fields, run using

>button orCtrl+EnterorShift+Enter(recommeded: runs the code and creates a new code field) - Your notebooks get saved under your Google account

- You can also save them as Python scripts ("File > Download .py")

- You can use such an approach to do your homework assignments

- But make sure to try running

- Important difference between interactive Python and a Python script:

- Interactive Python prints the result of the last command

- e.g.

5+5prints out10in interactive Python

- e.g.

- A Python script executes the command but only prints stuff if

you call the

print()function- e.g.

5+5does "nothing" in a Python script - but

print(5+5)prints10

- e.g.

- Interactive Python prints the result of the last command

- You can also go through "Welcome to Colaboratory" (is typically offered at the start page) to learn more about Colab

-

For offline interactive working with Python in the terminal, you can simply run

python3and start typing commands -

A slightly more friendly version is IPython:

ipython3-

to save the commands 5-10 from your IPython session to a file named

mysession.py, run:%save mysession 5-10 -

to exit IPython, run:

exit

-

-

To install missing modules (maybe ipython might be missing), use pip (in Bash):

pip3 install --user ipython -

For non-interactive work, use your favourite text editor.

-

Python types

- int:

a = 1 - float:

a = 1.0 - bool:

a = True - str:

a = '1 2 3'ora = "1 2 3" - list:

a = [1, 2, 3] - dict:

a = {"a": 1, "b": 2, "c": 3} - tuple:

a = (1, 2, 3)(something like a fixed-length immutable list)

- int:

First Python exercises (simple language modelling)

-

Create a string containing the first chapter of genesis. Print out first 40 characters.

str[from:to] # from is inclusive, to is exclusivePrint out 4th to 6th character 1-based (=3rd to 5th 0-based)

Check the length of the result usinglen(). -

Split the string into tokens (use

str.split(); see?str.splitfor help).

Print out first 10 tokens. (List splice behaves similarly to substring.)

Print out last 10 tokens.

Print out 11th to 18th token.

Check the length of the result usinglen().

Just printing a list splice is fine; also see?str.join -

Compute the unigram counts into a dictionary.

# Built-in dict (need to explicitly initialize keys): unigrams = {} # The Python way is to use the foreach-style loops; # and horizotal formatting matters! for token in tokens: # do something # defaultdict, supports autoinitialization: from collections import defaultdict # int = values for non-set keys initialized to 0: unigrams = defaultdict(int) # Even easier: from collections import Counter -

Print out most frequent unigram.

max(something) max(something, key=function_to_get_key) # getting value stored under a key in a dict: unigrams[key] unigrams.get(key)Or use

Counter.most_common()

Script from class

Everything I showed interactively in the class can be found in python_intro.ipynb

Commands from the interactive session from 2019: first_python_exercises.py

6. Python: strings cont., I/O basics, regular expressions

Nov 11, 2020

The string data type in Python

-

Individual preparation before the class (45 minutes at most):

-

Python strings resemble lists in some aspects, for instance we can access individual characters using their positional indices and the bracket notation...

greeting = "hello" print(greeting[0]) greeting[0] = "H" -

... wait, the last line causes an error! Why is that?

-

If the distinction mutable vs. immutable is new to you, please read e.g. Mutability & Immutability in Python by Chetan Ambi. Please be ready to explain the distinction at the beginning of the class.

-

If you know the distinction already, explain why a repeated string concatenation like the following one is a bad idea in Python

s = '' for i in range(n): s = str(i) + s -

Ideally explain it in terms of the big O notation.

-

How would you handle similar situations in which repeated string accumulation is needed.

-

-

str.*: useful methods you can invoke on a string- case changing (

lower,upper,capitalize,title,swapcase) is*tests (isupper,isalnum...)- matching substrings (

find,startswith,endswith,count,replace) split,splitlines,join- other useful methods (not necessarily for strings):

dir,sorted,set - my ipython3 session from the lab (unfiltered and taken from some previous year)

- case changing (

-

list comprehension

-

a very pythonic way of creating lists using functional programming concepts:

words = [word.capitalize() for word in text if len(word) > 3] -

equivalent to:

words = [] for word in text: if len(word) > 3: words.append(word.capitalize())

-

-

reading in data

-

opening a file using its name

- open file for reading:

fh = open('file.txt') - read whole file:

text = fh.read() - read into a list of lines:

lines = fh.readlines() - process line by line in a for loop:

for line in fh: print(line.rstrip())

- open file for reading:

-

read from standard input (

cat file.txt | ./process.pyor./process.py < file.txt)import sys for line in sys.stdin: print(line, end='')

-

-

Python has built-in regex support in the

remodule, but theregexmodule seems to be more powerful while using the same API. To be able to use it, you need to:-

install it (in Bash):

pip3 install --user regex -

import in (in Python)

import regex as re

-

-

search,findall,sub -

raw strings

r'...' -

character classes

[[:alnum:]], \w, ... -

flags

flags=re.Iorr'(?i)...' -

subexpressions

r'(.) (...)'+ backreferencesr'\1 \2' -

revision of regexes

^[abc]*|^[.+-]?[a-f]+[^012[:alpha:]]{3,5}(up|down)c{,5}$ -

good text to play with: the first chapter of genesis again

Coding examples shown during class:

- unfiltered ipython3 session on strings, I/O and RE from the zoom online class 2020: stringsession.py

- regex ipython3 session from the lab (unfiltered, from a lab taught in year 2016) Regexes hw_string Questions

7. Python: modules, packages, classes

Nov 18, 2020

-

Before the class: think up how we would deal with words

- In the class, we will be creating an object to represent a word with some annotations and some methods

- What annotations would you store with a word? How would you represent

them?

- Probably we want to store the word form, the lemma, the part-of-speech...? Maybe something more?

- Also think about the type/token distinction: a type is a word independent of a sentence, a token is a word in context. So in these two sentences, there are two "train" tokens, but it is just one type because the string is the same: "I like travelling on a train. I train students in programming."

- What methods could we have for a word? How would you implement them?

- How would you implement a (simplified) method e.g. to put a noun into plural or a verb into past tense (in English)?

- How would you implement a method to "truecase" a word? What annotation would you need to know for that? E.g. for "hello", "Hello" or "HELLO", the true casing is "hello" (so lowercase), while e.g. for "Rudolf" or "RUDOLF" it is "Rudolf" (so titlecase)...

- Feel free to try writing some pieces of code to try out your ideas in practice. But you do not have to code everything, the important part is to think it through.

-

Classes in Python

- creating a class to represent a word with some linguistic annotations and methods

class Word:,w = Word(),w.form = "help",def foo(self, x, y):,self.form,a.foo(x, y)def __init__(self, x, y),def __str__(self),from Module import Class,if __name__ == "__main__":- a module is typically a

.pyfile; you can just import the module, or even import specific classes from the module - beware of name clashes; but you can always

import MyModule as SomeOtherName

- a module is typically a

- inheritance:

class B(A); overriding is the default, just redefine the method; usesuper().foo()to invoke parent's implementation - static members (without

self, belong to class) -- feel free to ignore this and just use non-static members only, mostly this is fine...class A,a = 5,A.a = 10,def b(x, y),A.b(x, y) - a package is basically a directory containing multiple modules --

packA/modA.py,packA/modB.py,from packA.modB import classC...

-

Pickle: simple storing of objects into files (and then loading them again)

-

Python has a simple mechanism of storing any object (list, dict, dict of lists, any object you defined, or really nearly anthing) into special binary files.

-

To store an object (e.g. the list

my_list), usepickle.dump():my_list = ['hello', 'world', 'how', 'are', 'you?'] import pickle # Need to open the file for writing in binary mode with open('a_list.pickle', 'wb') as pickle_file: # Store the my_list object into the 'a_list.pickle' file pickle.dump(my_list, pickle_file) -

A file

a_list.picklegets created with some unreadable binary data (next week, we get to ways of storing data in a more readable way). -

However, for Python, the data is perfectly readable, so you can easily load your object like this (i.e. you can put this code into another Python script and run it like next day when you need to get back your list):

import pickle # This time need to open the file for reading in binary mode with open('a_list.pickle', 'rb') as the_file: the_list = pickle.load(the_file) # And now you have the list back! print(the_list) print(the_list[3])

-

-

Virtual environments

- Sometimes you need several different "installations" of Python -- you need version 1.2.3 of a package for project A, but version 3.5.6 for project B, etc.

- The answer is to create several separate virtual environments:

-

Once for each project, create a venv for the project; specify any path you like to store the environment:

python3 -m venv ~/venv_proj_A -

Every time you start working on project A, switch to the right venv:

source ~/venv_proj_A/bin/activate -

Checking that everything looks fine:

- Your prompt should now show something like

(venv_proj_A) - Your

pythonandpython3should now be local just for this venv:- Try running

which pythonandwhich python3 - This should print out paths within the venv, e.g.

/home/rosa/venv_proj_A/bin/python3

- Try running

- Your pip should now be a local pip just for this venv

(and

pipandpip3should be identical):which pipshould say something like/home/rosa/venv_proj_A/bin/pippip --versionshould mention python 3

- Your prompt should now show something like

-

To install Python packages just for this project:

- Use

pip install package_name(instead of the usualpip3 install --user package_name) - The package will be installed locally just for this venv

- Use

-

To get out of the venv:

- run

deactivate - or close the terminal

- run

-

-

Exercise: implement a simple Czech POS tagger in Python, choose any approach you want, required precision at least 50%

-

Tagger input format - data encoded in iso-8859-2 in a simple line-oriented plain-text format: empty line separate sentences, non-empty lines contain word forms in the first column and simplified (one-letter) POS tag in the second column, such as N for nouns or A for adjectives (you can look at tagset documentation). Columns are separated by tabs.

-

Tagger output format: empty lines not changed, nonempty lines enriched with a third column containing the predicted POS for each line

-

Training data: tagger-devel.tsv

-

Evaluation data: tagger-eval.tsv (to be used only for evaluation!!!)

-

Performance evaluation (precision=correct/total): eval-tagger.sh_

cat tagger-eval.tsv | ./my_tagger.py | ./eval-tagger.sh -

Example baseline solution - everything is a noun, precision 34%:

python -c'import sys;print"".join(s if s<"\r" else s[:-1]+"\tN\n"for s in sys.stdin)'<tagger-eval.tsv|./eval-tagger.sh prec=897/2618=0.342627960275019

-

Left over stuff from previous classes

-

Print out the unigrams sorted by count.

Usesorted()— behaves similarly tomax()

Or useCounter.most_common() -

Get unigrams with count > 5; can be done with list comprehension:

[token for token in unigrams if unigrams[token] > 5] -

Count bigrams in the text into a dict of Counters

bigrams = defaultdict(Counter) bigrams[first][second] += 1 -

For each unigram with count > 5, print it together with its most frequent successor.

[(token, something) for …] -

Print the successor together with its relative frequency rounded to 2 decimal digits.

max(), sum(), dict.values(), round(number, ndigits) -

Print a random token. Print a random unigram disregarding their distribution.

import random ?random.choice list(dict.keys()) -

Pick a random word, generate a string of 20 words by always picking the most frequent follower.

range(10) -

Put that into a function, with the number of words to be generated as a parameter.

Return the result in a list.list.append(item) def function_name (parameter_name = default): # do something return 123 -

Sample the next word according to the bigram distribution

import numpy as np ?np.random.choice np.random.choice(list, p=list_of_probs)

Encoding in Python

-

a simple rule: use Unicode everywhere, and if conversions from other encodings are needed, then do them as close to the physical data as possible (i.e., encoding should processed properly already in the data reading/writing phase, and not internally by decoding the content of variables)

-

example:

f = open(fname, encoding="latin-1") sys.stdout = codecs.getwriter('utf-8')(sys.stdout)

8. A gentle introduction to XML

Nov 25, 2020 XML

-

Individual preparation before the class (45 minutes at most)

-

Let's have a look at what can go wrong in XML files (in the sense of violating XML syntax).

-

Download the collection of toy examples of correct and incorrect XML files: xml-samples.zip

-

If you are familiar with XML already, then

- without using any library for parsing XML files (but you can use e.g. regular expressions), implement a Python script which automatically recognizes whether a file conforms the following subset of XML grammar:

- elements must be properly nested (no crossing elements)

- there is exactly one root element

- elements can have attributes; single quotes are used for values

- Your script does not have to handle: empty elements, comments, declaration, CDATA, processing instructions.

- Your script input/output:

- Input: a file name

- Output: print 'CORRECT' or 'INCORRECT' (possibly followed by error identification - not required)

- Apply your script on all files in the above-mentioned collection. Ideally, the output of your checker should perfectly correspond to the names of the files (but it might be hard to reach the perfect agreement within the given time).

- Be ready for showing your solution during the online Zoom class.

- without using any library for parsing XML files (but you can use e.g. regular expressions), implement a Python script which automatically recognizes whether a file conforms the following subset of XML grammar:

-

If XML is new to you, then

- apply any existing XML checker on all 18 XML-incorrect from the above-mentioned collection and see how the checker reports particular types of errors. You can use e.g.:

- a bash command line tool such as xmllint

- an online XML checker such as https://www.xmlvalidation.com/ or https://codebeautify.org/xmlvalidator

- In the remaining time, think about how you would recognize similar errors using Python, if you were not allowed to use any existing library specialized at XML. Be ready for explaining your thoughts during the online Zoom class.

- apply any existing XML checker on all 18 XML-incorrect from the above-mentioned collection and see how the checker reports particular types of errors. You can use e.g.:

-

-

Motivation for XML, basics of XML syntax, examples, well-formedness/validity, dtd, xmllint

-

XML exercise (to be started in class and finished as homework):

- Create an XML file representing some data structures (ideally NLP-related) manually in a text editor, or by a Python script.

- The file should contain at least 7 different elements, some of them should have attributes.

- Create a DTD file and make sure that the XML file is valid w.r.t. the DTD file.

- Create a Makefile that has targets

wellformedandvalidand usesxmllintto verify the file's well-formedness and its validity with respect to the DTD file.

-

HTML vs. XML exercise:

- modify an HTML file (such as simple example given here) so that it becomes a well-formed XML

9. XML & JSON

Dec 02, 2020

-

Individual preparation before the class (45 minutes at most)

- Download the HTML code of this course's web page and check whether it conforms to all rules of the XML syntax:

wget http://ufal.mff.cuni.cz/courses/npfl092

xmllint --noout npfl092

-

Try to fix as many violations of the rules in the HTML file as you can in the given time, by any means (either manually or e.g. by Python regular expressions). Can you turn the HTML file to a completely well-formed XML file?

-

In order to avoid any confusion: this is just an exercise, XML and HTML are only cousins, and HTML files are usually not required nor expected to be well-formed XML files; HTML validity can be checked by some other tools such as by the W3C Markup Validation Service. You can check the HTML-validity of the course web page too if time remains.

We'll briefly discuss Mardown, which is a markup language too. You can play with an online Markup-to-HTML converter.

-

A very quick overview of some XML-related standards (namespaces, XPath, XSL, SAX, DOM)

-

Let's apply XPath queries on a sample file such as books.xml using an online XPath evaluator

- Intro to XML and JSON processing in Python

- During exercising in Python, we'll use the Google Colab Notebook again.

Optional reading:

10. Spacy, NLTK and other NLP frameworks

Dec 09, 2020

Before the class

-

Install Spacy -- in Bash:

pip3 install --user spacy -

Install Spacy English model -- in Bash:

python3 -m spacy download --user en_core_web_sm # or: pip3 install --user en_core_web_sm -

Optionally, also install Spacy models for some other language(s) of your interest. 15 languages are directly available within Spacy: https://spacy.io/usage/models Sometimes there are multiple models of multiple sizes.

-

Install NLTK -- in Bash:

pip3 install --user nltk -

Install NLTK data and models -- in Python:

import nltk nltk.download() # usually, you should chose to download "all" (but it may get stuck)

I have not tested everything on Google Colab. Spacy seems to be installed including at least some of the models. NLTK seems to be installed without models and data, so these have to be downloaded. Nevertheless, please also try to install everything on your machine if possible; and definitely on the remote lab machine so that you can test stuff there.

Why use an NLP framework?

How is it better than other options, i.e. manual implementation or using existing standalone tools? (Note: the benefits of using a framework listed below are not necessarily true for all frameworks.)

- You can read in data in various formats, convert to unified representation, no need for further conversions to use with the tools, unified structured API to access the annotated data

- You get a number of tools in one batch, ready to use, with unified APIs

- You can often do everything from one or more Python scripts and run the whole pipeline at once, while standalone tools typically have to be ran and their inputs and outputs manipulated from terminal/bash script/Makefile

- Built in visualisation

- Apply but also train the tools (for machine learning you can go to: NPFL129 Machine Learning for Greenhorns, NPFL114 Deep Learning, NPFL054 Introduction to machine learning, NAIL029 Machine Learning, NPFL104 Machine Learning Exercises)

Spacy tutorial

In Bash (install Spacy and English model):

pip3 install --user spacy

python3 -m spacy download --user en_core_web_sm

# or: pip3 install --user en_core_web_sm

All officially available models: https://spacy.io/usage/models Sometimes there are multiple models of multiple sizes. For other languages, you have to find or create a model.

In Python (import spacy and load the English model):

import spacy

nlp = spacy.load("en_core_web_sm")

Create a new document:

doc = nlp("The duck-billed platypus (Ornithorhynchus anatinus) is a small mammal of the order Monotremata found in eastern Australia. It lives in rivers and on river banks. It is one of only two families of mammals which lay eggs.")

The document is automatically processed (tokenized, tagged, parsed...)

list(doc)

for token in doc:

print(token.text)

# or simply: print(token)

for token in doc:

print(token.text, token.lemma_, token.pos_, token.tag_, token.dep_, token.shape_, token.is_alpha, token.is_sent_start, token.is_stop, sep='\t')

for sentence in doc.sents:

print(sentence, sentence.root)

for ent in doc.ents:

print(ent.text, ent.start_char, ent.end_char, ent.label_)

list(doc.noun_chunks)

Spacy can also do visualisations:

from spacy import displacy

displacy.serve(doc, style="dep")

displacy.serve(doc, style="ent")

Larger models also contain word embeddings and can do word similarity: https://spacy.io/usage/spacy-101#vectors-similarity

Exercise

- process some text in Spacy

- for each word, print out the word and its part-of-speech tag

- print out the output as TSV, one token per line,

wordform POStagseparated by a tab, with an empty line separating sentences

NLTK tutorial

Installation:

# in terminal

pip3 install --user nltk

ipython3

import nltk

# optionally:

# nltk.download()

# usually, you should chose to download "all" (but it may get stuck)

A very similar tutorial to what we do in the class is available online at Dive Into NLTK; we mostly cover the contents of the parts I, II, III and IV.

Using existing tools in NLTK

Sentence segmentation, word tokenization, part-of-speech tagging, named entity recognition. Use genesis or any other text.

text = """The duck-billed platypus (Ornithorhynchus anatinus) is a small

mammal of the order Monotremata found in eastern Australia. It lives in

rivers and on river banks. It is one of only two families of mammals which

lay eggs."""

# or use e.g. Genesis again

# with open("genesis.txt", "r") as f:

# text = f.read()

sentences = nltk.sent_tokenize(text)

# just the first sentence

tokens_0 = nltk.word_tokenize(sentences[0])

tagged_0 = nltk.pos_tag(tokens_0)

# all sentences

tokenized_sentences = [nltk.word_tokenize(sent) for sent in sentences]

tagged_sentences = nltk.pos_tag_sents(tokenized_sentences)

ne=nltk.ne_chunk(tagged_0)

print(ne)

ne.draw()

Exercise

- process some text in NLTK

- for each word, print out the word and its part-of-speech tag

- print out the output as TSV, one token per line,

wordform POStagseparated by a tab, with an empty line separating sentences

Trees in NLTK

Let's create a simple constituency tree for the sentence A red bus stopped suddenly:

# what we want to create:

#

# S

# / \

# NP VP

# / | \ / \

# A red bus stopped suddenly

#

from nltk import Tree

# Tree(root, [children])

np = Tree('NP', ['A', 'red', 'bus'])

vp = Tree('VP', ['stopped', 'suddenly'])

# children can be strings or Trees

s = Tree('S', [np, vp])

# print out the tree

print(s)

# draw the tree (opens a small graphical window)

s.draw()

And a dependency tree for the same sentence:

# what we want to create:

#

# stopped

# / \

# bus suddenly

# / |

# A red

# can either use string leaf nodes:

t1=Tree('stopped', [Tree('bus', ['A', 'red']), 'suddenly'])

t1.draw()

# or represent each leaf node as a Tree without children:

t2=Tree('stopped', [Tree('bus', [ Tree('A', []), Tree('red', []) ]), Tree('suddenly', []) ])

t2.draw()

Overview of NLP frameworks

Note: some of the frameworks/toolkits are in (very) active development; therefore, the information listed here may easily fall out of date.

- NLTK Natural Language Toolkit (http://www.nltk.org/, reasonable tutorial https://textminingonline.com/dive-into-nltk-part-i-getting-started-with-nltk, NLTK book http://www.nltk.org/book/) — good for English, usable for other langs, not much support for e.g. Czech (you have to manually read in Czech corpora, process them into required format and train the tools you need); reasonably easy integration of existing standalone NLP tools (API to run e.g. the Stanford tools — you have to install them independently and set up some system variables correctly so that NLTK finds them, but then you can invoke them directly from NLTK)

- Treex (http://ufal.mff.cuni.cz/treex) — ÚFAL NLP toolkit, best for Czech, good for English, built-in support for several other langs (nl, de, pt, es…), support for ud; only in Perl; attempt to port API to Python: PyTreex (https://github.com/ufal/pytreex); web interface: TreexWeb (https://lindat.mff.cuni.cz/services/treex-web/)

- Stanford CoreNLP (http://stanfordnlp.github.io/CoreNLP/) — EN, ZH, ES, AR, FR, DE; comprehensive framework, good performance; Java, command-line interface, web service, APIs in ~15 langs (incl. Python, PHP, JavaScript…), also some integration with NLTK

- OpenNLP (https://opennlp.apache.org/) — comprehensive framework; Java, command-line interface

- Gate — good for abstracting over complex pipelines

- Spacy — very easy to use, already quite big and powerful and continually growing

- CogComp-NLP — simple online interface; supports only English

- UDPipe (https://ufal.mff.cuni.cz/udpipe) — Trainable pipeline for tokenizing, tagging, lemmatizing and parsing Universal Treebanks and other CoNLL-U files (use from commandline / bindings for C++, Python, Perl, C#, Java) — if you dont want to do anything sophisticated within the framework but just want to get the analyses (and either do the processing yourself manually or within another framework or no procesing is needed...)

- Udapi (http://udapi.github.io/) — lightweight toolkit for working with Universal Dependencies — currently can really only read in and write out data, but when it reads them in, you can access them through a rather nice API; Python, Perl, Java (go to NPFL070 Language Data Resources)

- search in corpora: PMLTQ (https://lindat.mff.cuni.cz/services/pmltq/) — go to NPFL075 Prague Dependency Treebank

- deep learning: TensorFlow (https://www.tensorflow.org/) — go to NPFL114 Deep Learning

- information retrieval: Retriever, Lucene — go to NPFL103 Information Retrieval

- dialogue systems: Alex (https://github.com/UFAL-DSG/alex) — go to NPFL099 Statistical dialogue systems

11. REST API

Dec 16, 2020

Quick start

A peek into requests library and REST APIs

-

getting resources from the internet

-

static resources (using Python instead of

wget)import requests url = 'http://p.nikde.eu' response = requests.get(url) response.encoding='utf8' print(response.text) -

dynamic resources provided through a REST API; for a given REST API you want to use, look for its documentation on its website

# you need to find out what the URL of the endpoint is url = 'http://lindat.mff.cuni.cz/services/translation/api/v2/models/en-cs' # you need to find out what parameters the API expects data = {"input_text": "I want to go for a beer today."} # sometimes, you may need to specify some headers (often not necessary) headers = {"accept": "text/plain"} # some APIs support `get`, some support `post`, some support both response = requests.post(url, data = data, headers = headers)

REST

- client-server communication

- simple, lightweight, text-based

- using HTTP

- stateless

- to use a RESTful resource, you need to know:

- its URL (also called identifier, endpoint address...)

- parameters to specify (optional)

- the method to use (typically GET or POST)

- GET has length limits (URL + parameters can have max 2048 characters in total)

- the response is often JSON, but can be in any other text-based format

Curl and UDPipe REST API

- http://lindat.mff.cuni.cz/services/udpipe/api-reference.php

-

to tokenize, tag and parse a short English text, you can run

curldirectly in the terminal (--dataspecifies data to send via the POST method; to use GET, you would put the parameters directly into the URL):curl --data 'model=english&tokenizer=&tagger=&parser=&data=Christmas is coming! Are you ready for it?' http://lindat.mff.cuni.cz/services/udpipe/api/process -

to print out the result as plaintext, you can pipe it to:

python -c "import sys,json; print(json.load(sys.stdin)['result'])" -

to perform only sentence-segmentation and tokenization, use only the

tokenizer=processor (no tagger and parser), and setoutput=horizontal -

so you can use REST APIs directly from the terminal; but it is probably more comfortable from Python

-

Calling a REST API from Python

-

use the

requestsmodule, which has aget()function (as well as apost()function); provide the URL of the API, and the parameters (if any) as a dictionary:import requests url = 'http://lindat.mff.cuni.cz/services/udpipe/api/process' params = dict() params["model"] = "english" params["tokenizer"] = "" params["tagger"] = "" params["parser"] = "" params["data"] = "Christmas is coming! Are you ready for it?" response = requests.get(url, params) -

the

responsecontains a lot of fields, the most important beingtext, which contains the content of the response; often (but not always) it is in JSON, so you might want to load it usingjson.loads(), but you can also get it directly using.json():# the "raw" response print(response.text) # if the response is in JSON: print(json.loads(response.text)) # or: print(response.json()) # if the JSON contains the 'result' field (for UDPipe it does): print(response.json()['result']) -

The requests module makes an educated guess as of the encoding of the response. If it guesses wrong, you can set the encoding manually, e.g.:

response.encoding='utf8'

Try using some other RESTful web services

-

Some NLP tools with REST APIs available at ÚFAL:

-

-

links e.g. to Cat facts :-)

curl 'https://cat-fact.herokuapp.com/facts/random?animal_type=dog' -

or to Random cats

import requests from io import BytesIO from PIL import Image rcat = requests.get('https://aws.random.cat/meow') img_url = rcat.json()['file'] rimg = requests.get(img_url) img = Image.open(BytesIO(rimg.content)) img.show()

-

-

joining multiple things together:

def randomfact(animal='cat'): url = 'https://cat-fact.herokuapp.com/facts/random?animal_type=' + animal response = requests.get(url) j = response.json() print(j['text']) d = nlp(j['text']) for entity in d.ents: print(entity, entity.label_)

hw_rest Building REST APIs Questions

12. Final test

Jan 06, 2021 Questions

Assignments

Notes

- Submit assignments via Git (except for the first two assignments). Use the assignment names as directory names.

- We will only look at the last version submitted before the deadline.

- The estimated durations are only approximate. If possible, please let us know how much time you spent with each assignment, so that we can improve the estimates for future students.

1. hw_ssh

Duration: 10-30min 100 points Deadline: Oct 11 23:59, 2020

In this homework, you will practice working through SSH.

-

Connect remotely from your home computer to the MS lab

- Linux:

sshin terminal - Windows 10:

sshin commandline -- open the command prompt (Windows+R), typecmd, press Enter, and you are in the commandline - Windows: Putty

- Chrome browser: Secure Shell extension

- Android: JuiceSSH

- Linux:

-

Check that you can see there the data from the class (or use

wgetandunzipto get the UDHR data to the computer fromhttps://ufal.mff.cuni.cz/~rosa/courses/npfl092/data/udhr.zip) -

Try practising some of the commands from the class: try renaming files, copying files, changing file permissions, etc.

-

Try to create a shell script that prints some text, make it executable, and run it, e.g.:

echo 'echo Hello World' > hello.sh chmod u+x hello.sh ./hello.sh -

List your "friends":

- Create an executable script called

friends.shthat lists all users which have the same first character of their username as you do. - Hint: in our lab, all users whose username starts with "r" have their home

directories in

/afs/ms/u/r/. - So you just need to list the contents of such a directory.

- Create an executable script called

-

Put your scripts into a shared directory:

- Go to

/afs/ms/doc/vyuka/INCOMING/TechnoNLP/. - Create a new directory there for yourself.

- Use your last name as the name for the directory.

- In this new directory, create another directory called

hw_ssh. - Copy your two scripts into the

hw_sshdirectory.

- Go to

-

You can also try connecting to the MS lab from your smartphone and running a few commands -- this will let you experience the power of being able to work remotely in Bash from anywhere...

You should be absolutely confident in doing these tasks. If you are not, take some more time to practice.

And, as always, contact us per e-mail if you run into any problems!

2. hw_makefile

Duration: 1-3h 100 points Deadline: Oct 26 23:59 CEST 2020

Create a Makefile with targets t1-t18, performing the tasks 1-18 listed below.

Put your Makefile into a new directory called hw_makefile/ and submit using Git to the gitlab server (this will be practices during the online practicals on October 21).

-

print the text

Hello world -

using wget, download skakalpes-il2.txt

-

view the file using cat and less

-

using iconv, convert the file from iso-8859-2 to to utf-8 and store it into skakalpes-utf8.txt

-

view the new file

-

count the number of lines in the file using wc

-

using head and tail, view the first 15 lines, the last 15 lines, and lines 10-20 (careful!)

-

using cut, print the first two words on each line

-

using grep, print all lines containing a digit

-

using sed, substitute spaces and punctuations marks with the new line symbol, so that there is at most one word per line (\n)

-

using grep, avoid empty lines

-

using sort, sort the words alphabetically

-

using wc, count the number of words in the text

-

using sort|uniq, count the number of distinct words in the text

-

using sort|uniq -c|sort -nr, create a frequency list of words

-

create a frequency list of letters

-

using paste, create the frequency list of word bigrams (create another file with lines shifted upwards by one, merge it by paste with the original file and make a frequency list of the lines)

-

Longer excercise: write a shell script that downloads the main web-page of some news server and finds all word bigrams in it in which both words are capitalized. Make a frequency list of HTML tags used in the document.

3. hw_git

Duration: 10min-1h 100 points Deadline: Nov 02 23:59

Go again through the instructions for using Git and GitLab, and make sure everything works both on the lab computers (connect through SSH to check this) and on your home computer. Then proceed with the following "toy" homework assignment:

-

On your home computer, clone your repository from the remote repository (i.e. GitLab) and go into it.

-

In your Git repository, create a directory called

hw_gitand add it to into Git (git add hw_git). -

In this directory, create a text file that contains at least 10 lines of text, e.g. copied from a news website. (and add it into Git).

-

Commit the changes locally (e.g.

git commit -m'adding text file'). -

Create a new Bash script called

sample.shin the directory. When you run the Bash script (./sample.sh), it should write out the first 5 lines from the text file. -

Commit the changes locally.

-

Push the changes to the remote repository (i.e. GitLab).

-

Connect to a lab computer through SSH, clone the repository from the remote repository (i.e. GitLab), try to find your script and run it to see that everything works fine. (If it does not, fix it.)

-

Still through SSH, change the script to only print first 2 lines from the file.

-

Commit and push the changes. (Even though the script file is already part of the Git repository, i.e. it is "versioned", the new changes are not, so you still need to either add the current version of the script again (

git add sample.sh), or usecommitwith the-aswitch which automatically adds all changes to versioned files.) -

Go back to your local clone of the repository on your home computer, pull the changes, and check that everything works correctly, i.e. that the script prints the first 2 lines from the file. (If it does not, fix it.)

-

In the local clone, change the script once more, so that it now prints the last 5 lines from the text file. Commit and push.

-

Go again into the repository clone stored in the lab, pull the changes, and check that the script works correctly. (If it does not, fix it.)

-

Copy your solutions for

hw_sshandhw_makefileinto the Git repository. Again, make sure to add them, commit them, push them, and check that they work. -

If you run into problems which you are unable to solve, ask for help!

You will submit all of the following assignments in this way, i.e. through Git, in a directory named identically to the assignment. Once you finish an assignment, always use SSH to connect to the lab, pull the assignment, and check that it works correctly.

4. hw_python

Duration: 1-4h 100 points Deadline: Nov 16 23:59

Create a Python script that does the following things.

For each item in this list, first print out a string saying what you are

doing (e.g. NOW DOING TASK: Tokens 3) and then do it.

Put the solution into an executable Python script with a correct shebang, put

the script into a directory called hw_python, and push it to your GitLab

git repository.

Tokens

-

Create a string containing the first chapter of genesis.

-

Print out the number of characters in it.

-

Split it into "tokens" using the default

split()method. We will assume here that these tokens are words (even though they are not, as they may contain punctuation inside). -

Print out the number of tokens.

-

Print out the first three characters from each token; ideally, use just one line of code.

-

Print out the last two characters from the first 20 tokens.

-

Compute and print out the average word length.

-

Create a frequency list of words and print out the most frequent word (we did exactly this in the class).

-

Create a frequency list of characters and print out the most frequent character.

Sentences

-

Split the text of genesis into sentences. You can assume each sentence ends by

.(it does in this case). Beware that you will also get an empty string as the last item, which you probably don't want, so do something about it :-) -

Print out the number of sentences.

-

Print out the number of characters in each sentence.

-

Split each sentence into tokens; create a list of lists, which is a list of sentences where each sentence is a list of its tokens.

-

Print out the number of words in each sentence.

-

Compute and print out the average sentence length in terms of both words and characters.

-

Print out the first two words from each sentence.

-

Print out the first character from the second word in the third sentence.

Dictionaries

-

Create a dictionary where each key is a character, and each value is a list of words starting with the given character (still using the Genesis dataset; please lowercase it for this task). So e.g. under the key "w", you would have a list containing

["was", "without", "waters", "was", ...](in the order in which the words appear in the text, repeated words present repeatedly) -

Print out the number of words starting with each of the characters.

-

Do a similar thing, but now the value is a frequency list represented by a dictionary (plain dictionary or a Counter). So e.g. under the key "w", you would have a dict containing

{"was": 17, "without": 1, "waters": 11, ...} -

Print out the most common word starting with each of the characters.

5. hw_string

Duration: 2-6h 100 points Deadline: Nov 23 23:59

- Use Python for all of the tasks. Your Python script or scripts should process standard

input (e.g.

import sys; for line in sys.stdin: ...) and write to standard output (print()); i.e. do not work directly with files. - For each task, decide yourself whether to use basic string operations or regular expressions (or a combination thereof). Feel free to add a comment explaining your decision whenever you do not find the choice straightforward.

- Include a Makefile that has three targets,

a,bandc. Select three languages from the ones included in the UDHR dataset udhr.zip. Each of the targets should run all the tasks on the UDHR file for one of your selected languages (i.e. the tasks are the same for each target but the input file is different). - Use the same Python script for all languages. If some of the tasks do not work well for some of the languages that you selected, explain that in a comment.

- Always first print out the task you are doing

(e.g.

=== NOW DOING TASK 3 ===) and then print out the result of the task. - Make sure that the UDHR files are available for the script -- either put them into the repo (just the three, not all), or download them in the Makefile (make sure they are downloaded before they are given to the script).

The tasks:

-

Print out the first 20 lines of the text with spaces substituted by underscores.

-

Find and print only lines on which all letters are uppercase.

-

Split the text into words. This time, punctuation should not be contained in the words. Print out the last 42 words, one word per line.

-

Print words containing at least two subsequent vowels.

-

Remove "stop words" from the text. Approximate the list of stop words by the list of words that have at least 10 occurrences in the text. Print either the last 10 lines or the last 200 words from the text.

-

Replace numbers by their Roman equivalents. (You can assume that the only number higher than 30 is 1948; its Roman equivalent is MCMXLVIII.) You will get bonus points for a nice code, but any solution is OK. Print the whole text.

-

Join the input text into one line and reformat it so that each line is wrapped at the nearest end of a word after the 40th character (i.e. after the 40th character on a line, replace the nearest space with a newline; or in other words, each line is at least 40 characters long but after the 40th character only the current word finishes and then there is a line break). Print the whole text.

6. hw_tagger

Duration: 1-4h 100 points Deadline: Nov 30 23:59

Implement a simple POS tagger using Object-Oriented programming. Do not forget to also include the Makefile!

-

turn your solution to the in-class tagger exercise into an Object Oriented solution:

- implement a class

Tagger - the tagger class has a method

tagger.see(word,pos)which gets a word-pos instance from the training data (and probably stores it into a dictionary or something) - the tagger class has a method

tagger.save(filename)that saves the tagging model to a file (it is recommended to usepickle; see an overview of pickle in the classes class) - the tagger class has a method

tagger.load(filename)that loads the tagging model from a file - the tagger class has a method

tagger.predict(word)that predicts a POS tag for a word given the tagging model - you can add any other methods as you see fit

- implement a class

-

the tagger should have a reasonable accuracy

- the simple solution I showed in the class had 75.3%

- so you should have at least 75.4% :-)

- I will award bonus points for nice accuracies

- but do not spend too much time with that, it is sufficient to add a few simple tricks, you do not have to do anything too huge and complex

-

the tagger should be usable as a Python module:

-

e.g. if your

Taggerclass resides inmy-tagger-class.py, you should be able to use it in another script (e.g.calling-my-tagger.py) by importing it (from my-tagger-class import Tagger) -

one option of achieving this is by having just the

Taggerclass in the script, with no code outside of the class (you then need another script to use your tagger) -

another option is to wrap any code which is outside the class into the name=main block, which is executed only if the script is run directly, not when it is imported into another script:

# This is the Tagger class, which will be imported when you "import Tagger" class Tagger: def __init__(self): self.model = dict() def see(self, word, pos): self.model[word] = pos # This code is only executed when you run the script directly, e.g. "python3 my-tagger-class.py" if __name__ == "__main__": tagger = Tagger() tagger.see("big", "A")

-

-

wrap your solution into a Makefile with the following targets:

download- downloads the datatrain- trains a tagging model given the training file and stores it into a filepredict- appends the column with predicted POS to the test file contents and saves the result into a new fileeval- prints the accuracy

7. hw_xml

Duration: 1-2h 100 points Deadline: Dec 7 23:59

Finish the XML+DTD exercise from the class. Do not forget to also include the Makefile!

- XML exercise (to be started in class and finished as homework):

- Create an XML file representing some data structures (ideally NLP-related) manually in a text editor, or by a Python script.

- The file should contain at least 7 different elements, some of them should have attributes.

- Create a DTD file and make sure that the XML file is valid w.r.t. the DTD file.

- Create a Makefile that has targets

wellformedandvalidand usesxmllintto verify the file's well-formedness and its validity with respect to the DTD file.

8. hw_xml2json

Duration: 2-6h 100 points Deadline: Dec 14 23:59

Implement conversions between TSV, XML and JSON.

- download a simplified file with Universal Dependencies trees dependency_trees_from_ud.tsv (note: simplification = some columns removed from the standard conllu format)

- write a Python script that converts this data into a reasonably structured XML file

- write a Python script that reads the XML file and converts it into a JSON file

- write a Python script that rades the JSON file and converts it back to the tsv file

- check that the final output file is identical with the original input file

- organize it all in a

Makefilewith targetsdownload,tsv2xml,xml2json,json2tsv, andcheckfor the individual steps, and a targetallthat runs them all - alternatively, you may decide to pass the roundtrip in the opposite directions (i.e. with Makefile targets

download,tsv2json,json2xml,xml2tsv,check, andall) - you can use any existing Python modules (sometimes it may take longer to learn how to use the module than to write the code yourself, but that's also good practice)

9. hw_frameworks

Duration: 1-6h 100 points Deadline: Dec 21 23:59

Train a model for an NLP framework.

-

train, use and evaluate an NLP model in Spacy or NLTK, for Czech or another language

- it can be a part-of-speech tagger, or another tool

- achieve some non-trivial accuracy (if your accuracy is e.g. 20%, then something is probably wrong)

- wrap your solution into a Makefile, with the targets

readme,download,train,eval,show:readmeprints out a short text saying what you did and how it went- e.g. "I used this and this framework, this and this data for this and this language, I trained this and this model, and its accuracy is XY%, which seems good/bad to me... I noticed this and this behaviour, it does this and this well, it makes these and these errors..."

downloaddownloads the linguistic data needed for training and testing the model- do not commit any large files into Git

traintrains the model and stores it into a file or filesevalevaluates the trained model- and prints out its accuracy or accuracies

showapplies the model to a few sample sentences- and prints out the analysis provided by the model in a meaningful and easy-to-read way

- e.g. a list of words and their predicted part-of-speech labels

- below are some hints and suggestions how to solve the task, but feel free to go your own way

- as training (and evaluation) data, you can e.g. use:

- an excerpt of Czech Universal Dependencies version of the PDT Treebank:

- or use any treebank for any language

- all UD 2.7 treebanks can be downloaded at http://hdl.handle.net/11234/1-3424

- or use the Universal Dependencies

website to navigate to the git repository of a treebank of your choice and get the data from there

- we would actually prefer that so that the downloading does not take ages

- you can use

headto cut off just a part of the treebank so that the training does not take ages...