NameTag User's Manual

In a natural language text, the task of named entity recognition (NER) is to identify proper names such as names of persons, organizations and locations. NameTag recognizes named entities in an unprocessed text using MorphoDiTa. MorphoDiTa library tokenizes the text and performs morphological analysis and tagging and NameTag identifies and classifies named entities by an algorithm described in Straková et al. 2013. NameTag can also performs NER in custom tokenized and morphologically analyzed and tagged texts.

Like any supervised machine learning tool, NameTag needs a trained linguistic model. This section describes the available language models and also the commandline tools. The C++ library is described in NameTag API Reference.

1. Czech NameTag Models

Czech models are distributed under the CC BY-NC-SA licence. They are trained on Czech Named Entity Corpus 1.1 and 2.0 and internally use MorphoDiTa as a tagger and lemmatizer. Czech models work in NameTag version 1.0 or later.

Czech models are versioned according to the date when released, the version

format is YYMMDD, where YY, MM and DD are two-digit

representation of year, month and day, respectively. The latest version is

140304.

1.1. Download

The latest version 140304 of the Czech NameTag models can be downloaded from LINDAT/CLARIN repository.

1.2. Acknowledgements

This work has been using language resources developed and/or stored and/or distributed by the LINDAT/CLARIN project of the Ministry of Education of the Czech Republic (project LM2010013).

Czech models are trained on Czech Named Entity Corpus, which was created by Magda Ševčíková, Zdeněk Žabokrtský, Jana Straková and Milan Straka.

The recognizer research was supported by the projects MSM0021620838 and LC536 of Ministry of Education, Youth and Sports of the Czech Republic, 1ET101120503 of Academy of Sciences of the Czech Republic, LINDAT/CLARIN project of the Ministry of Education of the Czech Republic (project LM2010013), and partially by SVV project number 267 314. The research was performed by Jana Straková, Zdeněk Žabokrtský and Milan Straka.

Czech models use MorphoDiTa as a tagger and lemmatizer, therefore MorphoDiTa Acknowledgements and Czech MorphoDiTa Model Acknowledgements apply.

1.2.1. Publications

- Ševčíková Magda, Žabokrtský Zdeněk, Krůza Ondřej: Named Entities in Czech: Annotating Data and Developing NE Tagger. In: Matoušek, V., Mautner, P. (eds.) TSD 2007. LNCS (LNAI), vol. 4629, pp. 188–195. Springer, Heidelberg (2007).

- Kravalová Jana, Žabokrtský Zdeněk: Czech Named Entity Corpus and SVM-based Recognizer. In: Proceedings of the 2009 Named Entities Workshop: Shared Task on Transliteration. NEWS 2009, pp. 194–201. Association for Computational Linguistics (2009).

- Straková Jana, Straka Milan, Hajič Jan: A New State-of-The-Art Czech Named Entity Recognizer. In: Lecture Notes in Computer Science, Vol. 8082, Text, Speech and Dialogue: 16th International Conference, TSD 2013. Proceedings, Copyright © Springer Verlag, Berlin / Heidelberg, ISBN 978-3-642-40584-6, ISSN 0302-9743, pp. 68-75, 2013

- Straková Jana, Straka Milan and Hajič Jan. Open-Source Tools for Morphology, Lemmatization, POS Tagging and Named Entity Recognition. In Proceedings of 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, pages 13-18, Baltimore, Maryland, June 2014. Association for Computational Linguistics.

1.3. Czech Named Entity Corpus 2.0 Model

The model is trained on the training portion of the Czech Named Entity Corpus 2.0. The corpus uses a detailed two-level named entity hierarchy, whose detailed description is available in the documentation of the Czech Named Entity Corpus 2.0. This hierarchy is an updated version of CNEC 1.1 hierarchy and is more suitable for automatic named entity recognition.

czech-cnec2.0-<version>.ner-

Czech named entity recognizer trained on training portion of CNEC 2.0.

The latest version

czech-cnec2.0-140304.nerreaches 75.38% F1-measure on two-level hierarchy and 79.16% F1-measure on top-level hierarchy of CNEC 2.0 etest data. Model speed: ~40k words/s, model size: ~8MB. czech-cnec2.0-<version>-no_numbers.ner-

Czech named entity recognizer trained on training portion of CNEC 2.0,

except for the supertype

n(Number expressions), which is not included. The latest versionczech-cnec2.0-140304-no_numbers.nerreaches 77.35% F1-measure on two-level hierarchy and 80.59% F1-measure on top-level hierarchy of CNEC 2.0 etest data withoutn(Number expressions) supertype. Model speed: ~45k words/s, model size: ~8MB.

1.4. Czech Named Entity Corpus 1.1 Model

The model is trained on the training portion of the Czech Named Entity Corpus 1.1. The corpus uses a detailed two-level named entity hierarchy, whose detailed description is available in the documentation of the Czech Named Entity Corpus 1.1.

czech-cnec1.1-<version>.ner-

Czech named entity recognizer trained on training portion of CNEC 1.1.

The latest version

czech-cnec1.1-140304.nerreaches 75.47% F1-measure on two-level hierarchy and 78.51% F1-measure on top-level hierarchy of CNEC 1.1 etest data. Model speed: ~35k words/s, model size: ~7MB. czech-cnec1.1-<version>-no_numbers.ner-

Czech named entity recognizer trained on training portion of CNEC 1.1,

except for the supertypes

c(Bibliographic items),n(Number expressions) andq(Quantitative expressions), which are not included. The latest versionczech-cnec1.1-140304-no_numbers.nerreaches 77.48% F1-measure on two-level hierarchy and 80.72% F1-measure on top-level hierarchy of CNEC 1.1 etest data withoutc(Bibliographic items),n(Number expressions) andq(Quantitative expressions) supertypes. Model speed: ~40k words/s, model size: ~7MB.

2. English NameTag Models

English models are distributed under the CC BY-NC-SA licence. They are trained on CoNLL-2003 NER annotations (Sang and De Meulder, 2003) of part of Reuters Corpus and internally use [MorphoDiTa http://ufal.mff.cuni.cz/morphodita/] as a tagger and lemmatizer. English models work in NameTag version 1.0 or later.

English models are versioned according to the date when released, the version

format is YYMMDD, where YY, MM and DD are two-digit

representation of year, month and day, respectively. The latest version is

140408.

2.1. Acknowledgements

This work has been using language resources developed and/or stored and/or distributed by the LINDAT/CLARIN project of the Ministry of Education of the Czech Republic (project LM2010013).

The recognizer research was supported by the projects MSM0021620838 and LC536 of Ministry of Education, Youth and Sports of the Czech Republic, 1ET101120503 of Academy of Sciences of the Czech Republic, LINDAT/CLARIN project of the Ministry of Education of the Czech Republic (project LM2010013), and partially by SVV project number 267 314. The research was performed by Jana Straková, Zdeněk Žabokrtský and Milan Straka.

Czech models use MorphoDiTa as a tagger and lemmatizer, therefore MorphoDiTa Acknowledgements and English MorphoDiTa Model Acknowledgements apply.

2.1.1. Publications

- Straková Jana, Straka Milan, Hajič Jan: A New State-of-The-Art Czech Named Entity Recognizer. In: Lecture Notes in Computer Science, Vol. 8082, Text, Speech and Dialogue: 16th International Conference, TSD 2013. Proceedings, Copyright © Springer Verlag, Berlin / Heidelberg, ISBN 978-3-642-40584-6, ISSN 0302-9743, pp. 68-75, 2013

- Straková Jana, Straka Milan and Hajič Jan. Open-Source Tools for Morphology, Lemmatization, POS Tagging and Named Entity Recognition. In Proceedings of 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, pages 13-18, Baltimore, Maryland, June 2014. Association for Computational Linguistics.

2.2. English Model

English model is trained on CoNLL-2003 NER annotations (Sang and De Meulder, 2003) of part of

Reuters Corpus. The corpus uses coarse

classification using four classes PER, ORG, LOC and MISC.

The latest version 140408 of the English-CoNLL NameTag models can be downloaded from LINDAT/CLARIN repository.

The model english-conll-140408.ner reaches 84.73% F1-measure on

CoNLL2003 etest data. Model speed: ~20k words/s, model size: ~10MB.

3. Running the Recognizer

The NameTag Recognizer can be executed using the following command:

run_ner recognizer_model

The input is assumed to be in UTF-8 encoding and can be either already tokenized and segmented, or it can be a plain text which is tokenized and segmented automatically.

Any number of files can be specified after the recognizer_model. If an

argument input_file:output_file is used, the given input_file is

processed and the result is saved to output_file. If only input_file is

used, the result is saved to standard output. If no argument is given, input is

read from standard input and written to standard output.

The full command syntax of run_ner is

Usage: run_ner [options] recognizer_model [file[:output_file]]...

Options: --input=untokenized|vertical

--output=conll|vertical|xml

3.1. Input Formats

The input format is specified using the --input option. Currently supported

input formats are:

untokenized(default): the input is tokenized and segmented using a tokenizer defined by the model,vertical: the input is in vertical format, every line is considered a word, with empty line denoting end of sentence.

3.2. Output Formats

The output format is specified using the --output option. Currently

supported output formats are:

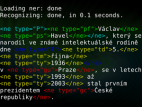

xml(default): Simple XML format without a root element, using<sentence>element to mark sentences and<token>element to mark tokens. The recognized named entities are encoded using<ne type="...">element. Example input:Václav Havel byl český dramatik, esejista, kritik komunistického režimu a později politik.

A NameTag identifies a first name (pf), a surname (ps) and a person name container (P) in the input (line breaks added):<sentence><ne type="P"><ne type="pf"><token>Václav</token></ne> <ne type="ps"><token>Havel</token></ne></ne> <token>byl</token> <token>český</token> <token>dramatik</token><token>,</token> <token>esejista</token><token>,</token> <token>kritik</token> <token>komunistického</token> <token>režimu</token> <token>a</token> <token>později</token> <token>politik</token><token>.</token></sentence>

vertical: Every found named entity is on a separate line. Each line contains three tab-separated fields: entity_range, entity_type and entity_text. The entity_range is composed of token identifiers (counting from 1 and including end-of-sentence; if the input is alsovertical, token identifiers correspond exactly to line numbers) of tokens forming the named entity and entity_type represents its type. The entity_text is not strictly necessary and contains space separated words of this named entity. Example input:Václav Havel byl český dramatik, esejista, kritik komunistického režimu a později politik.

Example output:1,2 P Václav Havel 1 pf Václav 2 ps Havel

conll: A CoNLL-like vertical format. Every word is on a line, followed by a tab and recognized entity label. An empty line denotes end of sentence. The entity labels are:O: no entityB-type: the word is the first in the entity of typetypeI-type: the word is a non-initial word in the entity of typetype

Václav Havel byl český dramatik, esejista, kritik komunistického režimu a později politik.

Example output:Václav B-P Havel I-P byl O český O ...

4. Running the Tokenizer

Using the run_tokenizer executable it is possible to perform only

tokenization and segmentation used in a specified model.

The input is a UTF-8 encoded plain text and the input files are specified same

as with the run_ner command.

The full command syntax of run_tokenizer is

run_tokenizer [options] recognizer_model [file[:output_file]]... Options: --output=vertical|xml

4.1. Output Formats

The output format is specified using the --output option. Currently

supported output formats are:

xml(default): Simple XML format without a root element, using<sentence>element to mark sentences and<token>element to mark tokens. Example output for inputDěti pojedou k babičce. Už se těší.(line breaks added):<sentence><token>Děti</token> <token>pojedou</token> <token>k</token> <token>babičce</token><token>.</token></sentence> <sentence><token>Už</token> <token>se</token> <token>těší</token><token>.</token></sentence>

vertical: Each token is on a separate line, every sentence is ended by a blank line. Example output for inputDěti pojedou k babičce. Už se těší.:Děti pojedou k babičce . Už se těší .

5. Running REST Server

NameTag also provides REST server binary nametag_server.

The binary uses MicroRestD as a REST

server implementation and provides

NameTag REST API.

The full command syntax of nametag_server is

nametag_server [options] port (model_name model_file acknowledgements)*

Options: --connection_timeout=maximum connection timeout [s] (default 60)

--daemon (daemonize after start, supported on Linux only)

--log_file=file path (no logging if empty, default nametag_server.log)

--log_request_max_size=max req log size [kB] (0 unlimited, default 64)

--max_connections=maximum network connections (default 256)

--max_request_size=maximum request size [kB] (default 1024)

--threads=threads to use (default 0 means unlimitted)

The nametag_server can run either in foreground or in background (when

--daemon is used). The specified model files are loaded during start and

kept in memory all the time. This behaviour might change in future to load the

models on demand.

6. Training of Custom Models

Training of custom models is possible using the train_ner binary.

6.1. Training data

To train a named entity recognizer model, training data is needed. The training data must be tokenized and contain annotated name entities. The name entities are non-overlapping, consist of a sequence of words and have a specified type.

The training data must be encoded in UTF-8 encoding. The lines correspond to

individual words and an empty line denotes an end of sentence. Each non-empty

line contains exactly two tab-separated columns, the first is the word form and the

second is the annotation. The format of the annotation is taken from CoNLL-2003:

the annotation O (or _) denotes no named entity,

annotations I-type and B-type denote named entity of specified type.

The I-type and B-type annotations are equivalent

except for one case – if the previous word is also a named entity of same type, then

- if the current word is annotated as

I-type, it is part of the same named entity as the previous word, - if the current word is annotated as

B-type, it is in a different name entity than the previous word (albeit with the same type).

6.2. Tagger

Most named entity recognizer models utilize part of speech tags and lemmas. NameTag can utilize several taggers to obtain the tags and lemmas:

trivial- Do not use any tagger. The lemma is the same as the given form and there is no part of speech tag.

external- Use some external tagger. The input "forms" can contain multiple space-separated values, first being the form, second the lemma and the rest is part of speech tag. The part of speech tag is optional. The lemma is also optional and if missing, the form itself is used as a lemma.

morphodita:model- Use MorphoDiTa as a tagger with the specified model. This tagger model is embedded in resulting named entity recognizer model. The lemmatizer model of MorphoDiTa is recommended, because it is very fast, small and detailed part of speech tags do not improve the performance of the named entity recognizer significantly.

6.2.1. Lemma Structure

The lemmas used by the recognizer can be structured and consist of three parts:

- raw lemma is the textual form of the lemma, possibly ambiguous

- lemma id is the unique lemma identification (for example a raw lemma plus a numeric identifier)

- lemma comment is additional information about a lemma occurrence, not used to identify the lemma. If used, it usually contains information which is not possible to encode in part of speech tags.

Currently, all these parts are filled only when morphodita tagger is used.

If external tagger is used, raw lemma and lemma id are the same and lemma

comment is empty.

6.3. Feature Templates

The recognizer utilizes feature templates to generate features which are used

as the input to the named entity classifier. The feature templates are

specified in a file, one feature template on a line. Empty lines and lines

starting with # are ignored.

The first space-separated column on a line is the name of the feature template, optionally followed by a slash and a window size. The window size specifies how many adjacent words can observe the feature template value of a given word, with default value of 0 denoting only the word in question.

List of commonly used feature templates follows. Note that it is probably not

exhaustive (see the sources in the features directory).

BrownClusters file [prefix_lengths]– use Brown clusters found in the specified file. An optional list of lengths of cluster prefixes to be used in addition to the full Brown cluster can be specified. Each line of the Brown clusters file must contain two tab-separated columns, the first of which is the Brown cluster label and the second is a raw lemma.CzechAddContainers– add CNEC containers (currently onlyPandT)CzechLemmaTerm– feature template specific for Czech morphological system by Jan Hajič (Hajič 2004). The term information (personal name, geographic name, ...) specified in lemma comment are used as features.Form– use forms as featuresFormCapitalization– use capitalization of form as featuresFormCaseNormalized– use case normalized (first character as-is, others lowercased) forms as featuresFormCaseNormalizedSuffix shortest longest– use suffixes of case normalized (first character as-is, others lowercased) forms of lengths betweenshortestandlongestGazetteers [files]– use given files as gazetteers. Each file is one gazetteers list independent of the others and must contain a set of lemma sequences, each on a line, represented as raw lemmas separated by spaces.GazetteersEnhanced (form|rawlemma|rawlemmas) (embed_in_model|out_of_model) file_base entity [file_base entity ...]– use gazetteers from given files. Each gazetteer contains (possibly multiword) named entities per line. Matching of the named entities can be performed either usingform, disambiguatedrawlemmaof any ofrawlemmas proposed by the morphological analyzer. The gazetteers might be embedded in the model file or not; in either case, additional gazetteers are loaded during each startup. For eachfile_basespecified inGazetteersEnhancedtemplates, three files are tried:file_base.txt: gazetteers used as features, representing eachfile_basewith a unique featurefile_base.hard_pre.txt: matched named entities (finding non-overlapping entities, preferring the ones starting earlier and longer ones in case of ties) are forced to the specifiedentitytype even before the NER model is executedfile_base.hard_post.txt: after running the NER model, tokens not recognized as entities are matched against the gazetteers (again finding non-overlapping entities, preferring the ones starting earlier and longer ones in case of ties) and marked asentitytype if found

Lemma– use lemma ids as a featureNumericTimeValue– recognize numbers which could represent hours, minutes, hour:minute time, days, months or yearsPreviousStage– use named entities predicted by previous stage as featuresRawLemma– use raw lemmas as featuresRawLemmaCapitalization– use capitalization of raw lemma as featuresRawLemmaCaseNormalized– use case normalized (first character as-is, others lowercased) raw lemmas as featuresRawLemmaCaseNormalizedSuffix shortest longest– use suffixes of case normalized (first character as-is, others lowercased) raw lemmas of lengths betweenshortestandlongestTag– use tags as featuresURLEmailDetector url_type email_type– detect URLs and emails. If an URL or an email is detected, it is immediately marked with specified named entity type and not used in further processing.

For inspiration, we present feature file used for Czech NER model. This feature file is a simplified version of feature templates described in the paper Straková et al. 2013: Straková Jana, Straka Milan, Hajič Jan, A New State-of-The-Art Czech Named Entity Recognizer. In: Lecture Notes in Computer Science, Vol. 8082, Text, Speech and Dialogue: 16th International Conference, TSD 2013. Proceedings, Copyright © Springer Verlag, Berlin / Heidelberg, ISBN 978-3-642-40584-6, ISSN 0302-9743, pp. 68-75, 2013.

# Sentence processors Form/2 Lemma/2 RawLemma/2 RawLemmaCapitalization/2 Tag/2 NumericTimeValue/1 CzechLemmaTerm/2 BrownClusters/2 clusters/wiki-1000-3 Gazetteers/2 gazetteers/cities.txt gazetteers/clubs.txt gazetteers/countries.txt gazetteers/feasts.txt gazetteers/institutions.txt gazetteers/months.txt gazetteers/objects.txt gazetteers/psc.txt gazetteers/streets.txt PreviousStage/5 # Detectors URLEmailDetector mi me # Entity processors CzechAddContainers

6.4. Running train_ner

The train_ner binary has the following arguments (which has to be specified

in this order):

- ner_identifier – identifier of the named entity recognizer type. This affects

the tokenizer used in this model, and in theory any other aspect of the recognizer.

Supported values:

- czech

- english

- generic

- tagger – the tagger identifier as described in the Tagger section

- feature_templates_file – file with feature templates as described in the Feature Templates section.

- stages – the number of stages performed during recognition. Common values are either 1 or 2. With more stages, the model is larger and recognition is slower, but more accurate.

- iterations – the number of iterations performed when training each stage of the recognizer. With more iterations, training take longer (the recognition time is unaffected), but the model gets over-trained when too many iterations are used. Values from 10 to 30 or 50 are commonly used.

- missing_weight – default value of missing weights in the log-linear model. Common values are small negative real numbers like -0.2.

- initial_learning_rage – learning rate used in the first iteration of SGD training method of the log-linear model. Common value is 0.1.

- final_learning_rage – learning rate used in the last iteration of SGD training method of the log-linear model. Common values are in range from 0.1 to 0.001, with 0.01 working reasonably well.

- gaussian – the value of Gaussian prior imposed on the weights. In other words, value of L2-norm regularizer. Common value is either 0 for no regularization, or small real number like 0.5.

- hidden_layer – experimental support for hidden layer in the artificial neural network classifier. To not use the hidden layer (recommended), use 0. Otherwise, specify the number of neurons in the hidden layer. Please note that non-zero values will create enormous models, slower recognition and are not guaranteed to create models with better accuracy.

- heldout_data – optional parameter with heldout data in the described format. If the heldout data is present, the accuracy of the heldout data classification is printed during training. The heldout data is not used in any other way.

The training data in the described format is read from the standard input and the trained model is written to the standard output if the training is successful.